A. van Ooyenf & B. Nienhuis

Biological Cybernetics (1993) 70: 47-53

A.van Ooyen: Netherlands Institute for Brain Research,

Meibergdreef 33, 1105 AZ Amsterdam, The Netherlands.

B. Nienhuis: Institute for Theoretical Physics, University of Amsterdam,

Valckenierstraat 65, 1018 XE Amsterdam, The Netherlands.

We demonstrate that equipping the neurons of Fukushima's Neocognitron with the phenomenon that a neuron decreases its activity when repeatedly stimulated (adaptation) markedly improves the pattern discriminatory power of the network. By means of adaptation, circuits for extracting discriminating features develop preferentially. In the original neocognitron, in contrast, features shared by different patterns are preferentially learned, as connections required for extracting them are more frequently reinforced.

In most neural network models, the individual units (neurons) are over-simplified compared with biotic neurons. Usually, only the very basic input-output properties are considered. In the simplest case, each unit can be in two states only: either it is at rest or it fires at its maximum frequency.

The other extreme is formed by network models in which one attempts to model the constituent neurons as realistically as possible, even down to the level of ionic conductances in different neuron compartments (e.g., Traub, 1982). Because of the complexity of such models, it is usually not attempted to pinpoint the role the various neuronal properties play in the behaviour of the network. In general, such models are too complicated to be of much help in answering the question of how various neuronal properties might contribute to the information processing power of neural networks. In order to establish this, one should work with series of models of varying complexity. The importance of particular properties can then be established by adding (or removing) them from the model (see De Boer, 1989).

In this paper, we focus on one such neuronal property, namely adaptation, and study what role it could play in pattern recognition. The term adaptation is used here to describe the phenomenon that a neuron becomes gradually less responsive when repeatedly stimulated, reflected by a decrease in its firing frequency. For example, neurons in the inferior temporal cortex in monkeys give strong responses to visual stimuli that are new or that have not recently been seen (Rolls et al., 1989; Miller et al., 1991). Possible mechanisms for adaptation include an increasing potassium conductance as a result of calcium influx during sustained depolarization (Meech, 1978; Connors et al., 1982). In Desimone (1992) adaptation or adaptive filtering is mentioned as one of the neuronal mechanisms that may play a role in the formation of memory traces. In order to study how adaptation could contribute to pattern recognition, we have incorporated it into the neocognitron, which is a multi-layered feedforward neural network model for visual pattern recognition (Fukushima, 1980; Pukushima and Miyake, 1982; Miyake and Fukushima 1984; Pukushima, 1988; Johnson et al. 1988; Menon and Heinemann, 1988; Fukushima, 1989). The neocognitron has the capacity to carry out translation and size invariant pattern recognition (but see Barnard and Casasent, 1990), and is trained by non-supervised learning. The learning mechanism employed is a type of competitive learning (networks based on competitive learning can be found in e.g., Kohonen 1984; Carpenter and Gross-berg, 1987; and Reilly et al. 1982). After the learning process is completed, the model has a hierarchical structure in which simple features are combined step by step into more complicated features. The tuning of the network can be complicated because of the large number of parameters. Recently it has been shown that the model can be greatly simplified while preserving its interesting properties of self-organization and tolerance of image deformations (Trotin et al., 1991). The Neocognitron can be used for various practical applications (e.g., Fukushima, 1988; Fukushima and Imagawa, 1993).

The organization of this article is as follows. First, the original neocognitron will be described. Next, the modified version will be given in which adaptation is incorporated. The effects of adaptation on the development of feature detecting circuits will then be described. Subsequently, the performance of the network, with and without adaptation,

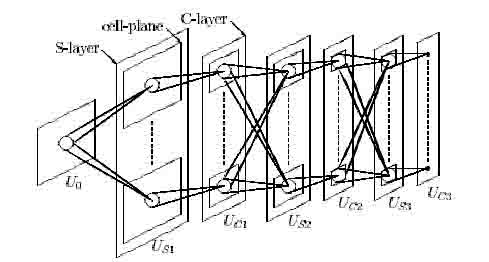

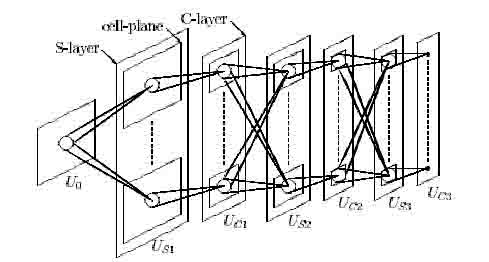

Figure 1: Schematic diagram illustrating the structure of the Neocognitron. After Fukushima (1980).

will be compared in simulation experiments.

In this Section we will briefly describe the structure of the network (after learning is completed) and the self-organization (learning) of the network. For a more detailed description the reader is referred to Fukushima and Miyake (1982) and the Appendix.

After completion of the learning process, the neocognitron has a structure in which cells in the lowest level extract local features of the input pattern, while cells in each succeeding level respond to specific combinations of the features detected in the preceding level. In the highest level, each cell will respond to only one input pattern, i.e., the pattern is recognized. This structure is similar to the hierarchical model of the visual system proposed by Hubel and Wiesel (1962, 1965).

The input layer is composed of a two-dimensional array of receptor cells. Each of the succeeding levels consists of a layer of excitatory S-cells (S-layer) followed by a layer of excitatory C-cells (C-layer; see Fig. 1). Each C-layer also contains inhibitory V-cells (not shown in the figure). An S-cell receives excitatory connections from a certain group of C-cells in the preceding level. An S-cell also receives an inhibitory connection from a V-cell, which in turn receives fixed excitatory connections from the same group of C-cells as does the S-cell to which it projects. After the learning stage is finished, S-cells extract features from the input patterns. Within their respective layers, S-cells and C-cells are divided into cell-planes. All the cells in such a cell-plane extract the same feature but from different positions of the input layer, while different cell-planes extract different features. Each C-cell receives signals from a group of preceding S-cells, all of which extract identical features but from slightly different positions. A C-cell will be activated if at least one of these S-cells is active, and is therefore less sensitive to positional shifts of the input pattern than is an S-cell. The size of cell-planes in both S- and C-layer decreases with

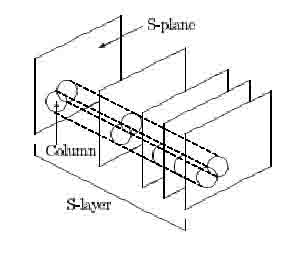

Figure 2: Hypercolumns within an S-layer. After Fukushima (1980).

the order of the level, and in the highest level each cell-plane in the C-layer has only one C-cell which responds, if the learning has been successful, to only one particular input pattern.

Only the excitatory and inhibitory connections to S-cells are variable. To modify them, maximum-output cells (MOCs) are chosen in the following way. They are selected from each S-layer every time a stimulus pattern is presented. In an S-layer, from a group of cells that extract features within a small area on the input layer (such a group is called a hypercolumn, see Fig. 2), the cell with the largest positive response is chosen as a candidate for the MOC. This is repeated for different such hypercolumns, each of which receives signals from different areas of the input layer. If more than one candidate has been chosen in the same cell-plane, the one with the largest response is selected. All the MOCs from different hypercolumns have their input connections, both excitatory and inhibitory, reinforced. The amount of reinforcement is proportional to the signal strength through a connection, so that the excitatory connections will grow to match the response in the preceding layer (i.e., the S-cell will detect the feature that has been presented). All the other S-cells in the cell-plane from which the MOC has been selected have their connections reinforced in the same way as the MOC, so that they all come to respond to the same feature but extracted from different positions of the input layer. When a novel feature is presented to the same S-cell, the inhibitory input from the V-cell is stronger than the excitatory input, as the excitatory connections do not optimally match this feature. Usually, an S-cell in another cell-plane is now MOC and has its connections reinforced. Thus, S-cells in different cell-planes come to respond to different features. This process of selecting MOCs and reinforcing connections is repeated for each level of the network and for each time an input pattern is presented.

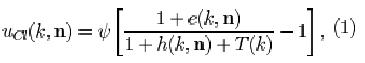

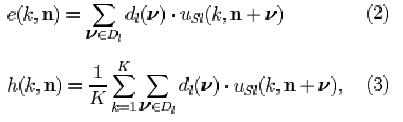

In the original neocognitron, circuits detecting features common among a set of input patterns develop in preference to those detecting rare or unique features. S-cells responding to common features will have their input connections more often reinforced than other S-cells, and will therefore get higher responses. Cells in succeeding levels will preferentially have those input connections reinforced that come from cells which are frequently activated and which have high levels of activity. Hence, strong connections will develop to such cells, which detect, however, the least discriminating features for pattern recognition. Consequently, the information passed on to higher levels will not always be specific enough for the C-cells in the highest level to respond selectively to only one particular input pattern. In what follows, we will show that neuronal adaptation, i.e., a cell becoming less responsive when frequently stimulated, improves pattern selectivity. Adaptation is implemented by modifying the response characteristic of the C-cells (Eq. 8 in Appendix), which by adding only one term to the denominator becomes

where e(k, n) and h(k, n) are defined as the excitatory and inhibitory effect, respectively (for the explanation of the other symbols, see Appendix):

and T(k) is a variable controlling the degree of adaptation. At a given time, all the C-cells of the k-th cell-plane of the /-th level have the same degree of adaptation. The greater the value of T, the more adapted a C-cell is and the weaker its response to the feature which it detects via the S-cells in the preceding layer. The first term in the argument of Ksi (Eq. 1) can be thought of as the membrane potential of the neuron, which is raised by excitatory inputs and shunted by lateral inhibition and adaptation. The term Ucl represents the firing frequency; it is proportional to the difference between membrane potential and resting potential, the latter being represented by the second term in the argument of Ksi.

Before the update rule for T will be given, we rewrite Eq. 1 as

Each time an input pattern is presented to the network, T(k) is updated by the following amount:

where T(k] is the degree of adaptation of all the C-cells in the k-th cell-plane of the l-th level; gi controls the speed of adaptation in the /-th level; pi determines the maximumvalue of T(k) under sustained stimulation; em(k] is the maximum value of e(k,n) found among the C-cells of the A;-th cell-plane; hm(k) is the maximum value of h(k, n); (p[x] = x if x > 0 and

Eqs. 4 and 5 have the following implications. Consider the first level of the network (i.e., / = 1), which extracts simple features from the input pattern. Suppose that the feature detected by cell-plane k is present in all input patterns, and that at least one of the C-cells in the k-th cell-plane is activated each time an input pattern is presented, i.e., phi [em(k) — hm(k)] is always greater than zero ( T(k) = 0 initially). Further assume that phi [em(k) — hm(k)] is the same for each input pattern. Then, the value of T(k) will increase until it equals pl * phi [em(k) — hm(k)], and the response of all C-cells in the k-th cell-plane becomes strongly depressed. If pi = 1, the response will become zero.

Now consider a cell-plane responding to a feature that occurs only in a subset of the input patterns. If a pattern containing this feature is presented, and the cell-plane is activated by it, adaptation will be build up by an amount proportional to pi-ip [em(k] — hm(k)]-T(k). If the next input patterns do not contain this feature (i.e.,

Now consider the case in which the feature detected by cell-plane k is present in all input patterns but does not produce exactly the same response each time, e.g., due to lateral inhibition of other cell-planes. That is, tp[em(k} — hm(k}\ is always greater than zero but does not always have the same value each time an input pattern is presented. After T(k) has reached its equilibrium value, cell-plane k is only active if the response is so strong that it exceeds T(k).

Adaptation has the following effect on the development of connections leading to succeeding levels. Because the response of C-cells activated by frequently occurring features is weak, due to adaptation, connections leading from these cells to succeeding S-cells will not be preferentially reinforced. Connections leading from C-cells that respond to rare or unique features, on the other hand, are preferentially reinforced because the output of these cells is relatively high due to a low degree of adaptation. In this way, circuits for detecting discriminating features will develop preferentially.

Cells in higher levels than the first combine simple features extracted by cells in lower levels. Therefore, cells in higher levels will become differentially adapted to combinations of simple features. The more frequently a particular combination occurs, the higher the degree of adaptation for cells detecting this combination.

4 Simulation Results

In this Section, some experiments with the modified neocognitron will be presented, the results of which will be compared with those obtained with the original one.

A reasonable parameter setting for a range of experiments involving recognition of numerals was chosen. To facilitate comparison, the same setting was used in the original and modified neocognitron. The network used has nine layers: an input layer followed by 4 levels, each consisting of an S- and C-layer. The first level has 10 cell-planes, while the three other levels each have 15 cell-planes. The number of cells in the input layer is 16x16. The number of cells in a given cell-plane is: 16x16 in Ugi (S-layer of level 1); SxSin C/ci (C-layer of level 1) and Usz', 4x4 in Uci and Uss', 2x2 in C/cs and [/s4; and 1 in C/C4- In every layer, the connecting area Si is 5x5 and DI is 6x6. Parts of the connection area falling outside a layer receive zero input. The value of n, which controls the intensity of the inhibitory input to an S-cell, decreases with the order of the level: ri=8.0, r2=5.8, ra=5.0 and r4=4.5. The degree of saturation (aj): ai=0.1, a^ =0.004, 0:3=0.02 and 0:4=0.3. The speed of reinforcement of the variable connections, <#, increases with the order of the level: gi=1.3, #2=2.3 , #3=3.3 and #4=4.3. The value of m, which determines the duration of the connections a and 6, (see Appendix) decreases with the order of the level: mi=35,m2=30, m3=28, and m4=25. The values of the fixed connections c and d are determined as in Pukushima and Myake (1982).

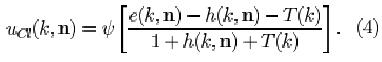

In order to study closely the effects of adaptation and to demonstrate what factors can hamper pattern recognition in the original neocognitron, we focus on an experiment in which only four numerals with common elementary features were presented. The input patterns "8", "5", "6" and "9" (see Figs. 3 and 4) were presented in a cyclical manner, i.e. 85698569 The positions of these patterns were not shifted during presentation.

In this small experiment, a network consisting of an input layer followed by only three levels would have been sufficient, as there turned out to be a one-to-one correspondence between the activation pattern in the 3rd and the 4th level. With the parameter setting used, the network did not acquire the ability to distinguish "5" and "6": in the highest layer, one and the same C-cell is activated by both numerals. Although the features with which they can be distinguished are extracted by cells in the first level, it is mainly the features shared by both patterns that are extracted by cells in the second level, as can be seen in Figure 3a. As a result, "5" and "6" cannot be distinguished. (Because in this case only a few patterns needed to be recognized, it would be possible to recognize all patterns by choosing a different parameter setting.)

Figure 3: Neocognitron without adaptation, a. Response of the cells in Uci, Uci and Ucs to each of the four input patterns. Note that the numbering of the cell-planes in Uci corresponds to the feature numbering in b. b. Features extracted by the S-cells in the first level, c. Connecting areas between S-layer 2 (vertical) and C-layer 1 (horizontal), d. Connecting areas between S-layer 3 (vertical) and C-layer 2 (horizontal). In c and d, only high connection strengths are shown. See further text.

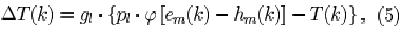

The initial degree of adaptation in each cell-plane was set to zero. There was adaptation only in the first level, i.e., gi = #3 = g±= 0. The speed of adaptation in the first level, <7i, was set to 0.05; pi, which determines the maximum equilibrium value of T(k), was set to 1.0. With this value, T(k) stabilized round an equilibrium value after about 100 presentations of the whole set of input patterns. Because T of each cell-plane converges to the average of the responses elicited by different numerals, cell-planes will be activated only if the response exceeds the adaptation level. Therefore, only a reduced set of features will be active for each input pattern: the discriminating features (i.e., infrequent features) are enhanced (see Fig. 4). As a result, "5" and "6" are now recognized as being different.

The feature with which "5" can be distinguished from all other numerals, feature 3 (see Figure 4b), is now used in the circuit for recognizing " 5".

In the original neocognitron, the features with which "6" can be distinguished from "5" (features 8 and 9) were not extracted by cells in the second level. In the network containing adaptation feature 8 is the most important features for recognizing "6".

Although feature 7 can be used to distinguish "9" from all other patterns, it was not actually used for this purpose in the original neocognitron. In the modified neocognitron, however, there are strong connections leading from the cell-plane which detects this feature.

Feature 1 is present in all input patterns but elicits the strongest response when an "8" is presented. The strong response to this particular feature can thus be regarded as characteristic of "8". In the modified neocognitron, the degree of adaptation for this feature is so high that only if "8" is presented the cell-plane is activated. Consequently, feature 1 is used solely for the recognition of "8", whereas in the original neocognitron it had been used in the recognition of all numerals.

Using the parameter settings described in Sections 4.1 and 4.2.2, a larger experiment was carried out in which the network was to recognize nine different numerals, in order of presentation: "1", "8", "5", "6", "9", "3", "2", "7" and "4". The order of presentation is not crucial; here we have more or less grouped together patterns with common features. The input numerals were presented as follows, again without shifting their position: only the first pattern 30 times, then the first two patterns 30 times, then the first three patterns cyclically, etc, until all numerals had been presented. With the parameter settings used, the original neocognitron developed only three cells in the highest layer that responded specifically to one of the input patterns. Only the numerals "1", "8" and "4" could be distinguished correctly. One cell responded to both "5" and "6", and another to "9", "3", "2", and "7". With the addition of adaptation, in contrast, all numerals were correctly identified, i.e., each cell in the highest layer responded to only one of the input patterns. Also in experiments in which the positions of the input patterns were shifted during the presentation, the neocognitron with adaptation was found to perform markedly better than the one without. In the original neocognitron the parameters had to be tediously set each time so as to be optimal for the particular task at hand. Our experience suggests that

Figure 4: Neocognitron with adaptation, a. Response of the cells in Uci, Ucz and Ucs to each of the four input patterns. Note that the numbering of the cell-planes in Uci corresponds to the feature numbering in b. b. Features extracted by the S-cells in the first level (Note that these are the same features as in Fig 3b). c. Connecting areas between S-layer 2 (vertical) and C-layer 1 (horizontal), d. Connecting areas between S-layer 3 (vertical) and C-layer 2 (horizontal). In c and d, only high connection strenghts are shown. During learning, extra cell-planes were created in the second and third layer. They are not shown here because they never become activated in the final network. See further text.

by the incorporation of adaptation into the network the parameter range is broadened.

The neocognitron has been modified to incorporate neuronal adaptation, by means of which the network suppresses frequently occurring features (or combinations of features) and enhances relatively infrequent features (i.e., discriminating features) within a set of input patterns. Through adaptation, cells will become progressively less responsive to the more frequent features, and, consequently, circuits for detecting them will not be reinforced. Circuits for detecting the most discriminating features of the stimulus develop preferentially under these conditions, as a result of which the ability of the network to differentiate patterns is markedly improved.

In general, the concept of adaptation or habituation is not restricted to single neurons but could also be applied to entire neural systems, e.g., it might be used in explaining phenomena such as selective attention.

Adaptation is incorporated in C-cells rather than in S-cells. As S-cells compete with each other to respond to a particular feature, a weaker response due to adaptation would make them less competitive. Then, a new S-cell could develop which extracts the same feature as the S-cell that became previously adapted to it, since the adapted cell can no longer inhibit the development of the new one.

In the case where the elementary features detected at the first level occur in all the input patterns (i.e., only combinations of features are distinctive), adaptation should only be moderate in the first level, so that the response is not entirely suppressed and the succeeding levels can, by means of adaptation, enhance those combinations of features that are discriminating.

In the cognitron, a precursor of the neocognitron, it was also recognized that frequent features were preferentially learned. To improve the cognitron's pattern selectivity, modifiable feedback connections were introduced (Myake and Fukushima 1984) so that a cell can suppress its presynaptic cells by inhibitory feedback if it is activated by a feature already familiar to the network. In this way, familiar features fail to elicit a sustained response, and only circuits detecting novel features will develop. This modification was shown to improve pattern selectivity and learning speed. However, in order to achieve this improvement, rather drastic modifications were made in the structure of the cognitron, as a new type of inhibitory cells with feedback connections had to be inserted. Additionally, one has to assume that the input patterns are presented in a specific way. Furthermore, the response-characteristics of all the cells in the network are affected by the modification. In contrast to this global modification, adaptation need not involve more than a local change in the network.

For many neuronal properties it is not clear what role they could play in the information processing carried out by biotic neural networks (see Selverston, 1988). Model neurons are therefore usually simplified to the point that only the most basic response characteristics are considered (summation of inputs, firing threshold). However, to incorporate neuronal properties solely to make them more 'realistic', without studying how they might actually contribute to the behaviour of the network, will not give a better insight into what the contributions 01 each property in tact are to the various tasks being carried out by biotic neural networks (see also Kohonen, 1992). In this study, we have focussed on the possible role of one of such property, namely adaptation, in visual pattern recognition. We have shown that adding adaptation to a neural network model for visual pattern recognition improves its discriminatory power.

We are grateful to M. A. Corner for critically reading the manuscript and for correcting the English, and to M. Timmerman for assistance with the figures.

In this Section, the formulas from Pukushima and Miyake (1982) relevant for our modification will be described.

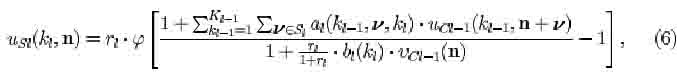

The output of an S-cell of the ki-th cell-plane of S-cells in the l-th level is given by

where

where q_i (i/) represents the strength of the fixed excitatory connections coming from the preceding C-cells. The term vci-i(n) has the same connecting area, Si, as usi(ki,n).

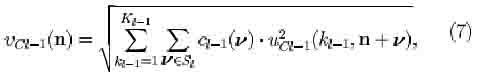

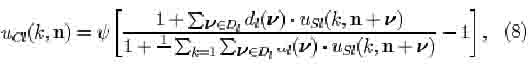

The output of a C-cell of the A;-th cell-plane of C-cells in the l-th level is given by

where ip[x] = x/(a + x) if x > 0 and -0[o;]= 0 if x < 0 , a being a parameter determining the degree of saturation of the output; di(n) is the value of the fixed excitatory connection; DI is the connecting area for uci(k, n).

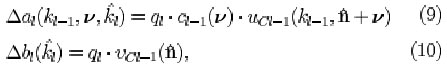

The variable connections ai(ki-i,is,ki) and bi(k{) are updated as follows. Let cell usi(ki, n) be selected as a maximum-output cell. The connections to this cell and to all the cells in the same cell-plane are reinforced:

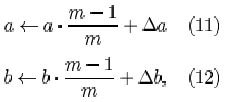

where qi is a parameter determining the speed of reinforcement. In Fukushima and Miyake (1982), a and b increase without bound. In our implementation, the connection strength is saturated:

where m gives the duration of the memory of the connections a and b expressed in learning cycles. The initial strength of the variable inhibitory connections are set to zero. All the variable excitatory input connections to an S-cell get initially a very small value, which is proportional to the strength of the corresponding fixed input connection to the V-cell.

References