Department of Electrical Communications, Tohoku University

Aoba-yama05, Sendai 980-8579,

Japan e-mail: shun @ aso. ecei. tohoku. ac.jp

The Division of Mathematical and Information Sciences,

Hiroshima University Higashi-Hiroshima 739-8521, Japan

Department of Electrical Communications, Tohoku University

Aoba-yama05, Sendai 980-8579, Japan

Department of Applied Physics, Tohoku University

Aoba-yama04, Sendai 980-8579, Japan

A rotation-invariant neocognitron, which has been recently proposed by authors, is trained by using hand-written numerical patterns provided by ETL-1 database. A new learning algorithm, "auto-generating algorithm", is proposed and the learning time is reduced. The model can recognize realistic hand-written patterns. It is also shown that the model is completely robust for rotations of hand-written patterns.

A neocognitron [1], which is a hierarchical neural networks for pattern or signal recognition, is considerably robust for distortion, scaling, and/or translation of patterns but it can not recognize patterns which are rotated in large angles. A rotation-invariant neocognitron was proposed by authors in order to make up the weak point of the original neocognitron [2],[3]. In the new model rotational information of inputs are added besides positional information in the original neocognitron. The rotation-invariant neocognitron could recognize globally and/or locally rotated patterns as well as translated, scaled and distorted ones. In the simulation, we used patterns produced by use of a computer.

In this paper, we examine whether the rotation-invariant neocognitron can recognize realistic hand-written patterns. For this purpose, we make use of a numbers of hand-written numerical patterns given in ETL-1 database1. In practice, various patterns more than one thousands are used to test the model. In constructing a model a new method , "auto-generating algorithm", is proposed for shortening learning time.

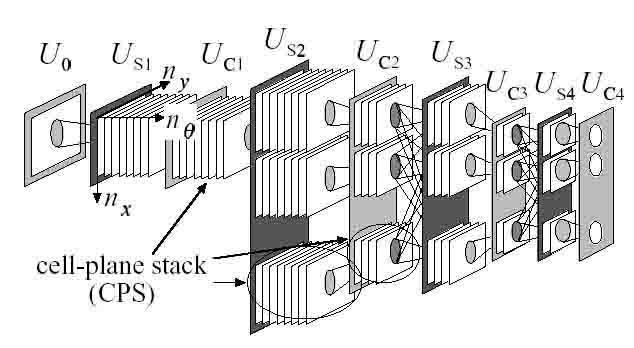

A rotation-invariant neocognitron is composed of cascaded connections of a number of modular structures Usl and Ucl preceded by an input layer Uo as shown in Figure 1. Here Usl denotes a layer consisting of S-cells in the /th module, and Uci a layer consisting of C-cells. A layer is composed of a number of cell-plane stacks, and different cell-plane stacks detect different features of inputs (a cell-plane stack is referred to as "CPS" hereafter). A CPS is composed of a number of cell-planes [1], and each cell-plane detects a different rotation angle of the features. Each cell in a CPS is located in a three-dimensional space. In this model a rotational information of an input pattern is represented by the number n0 assigned to a cell-plane in a CPS, and a positional information by a position (nx, ny) of a firing cell in the specific cell-plane.

Figure 1: A structure of rotation-invariant neocognitron.

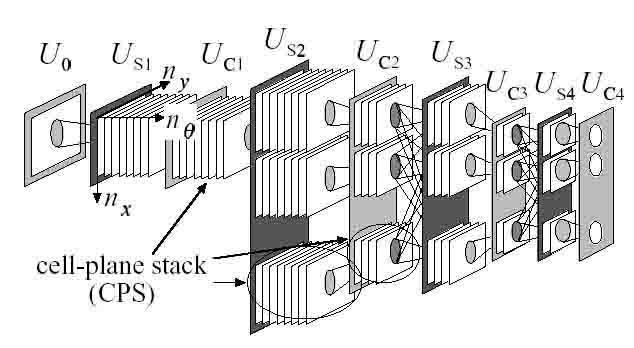

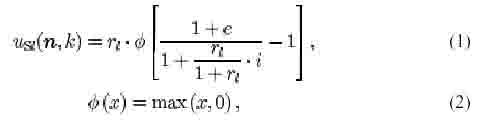

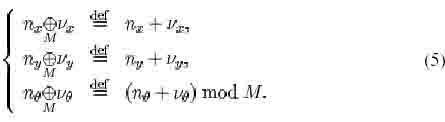

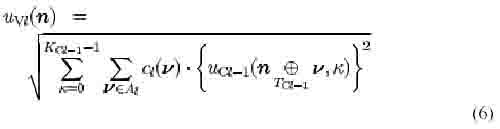

An output response of an S-cell located on n = (nx, ny, no) of the kth CPS in the lth module is denoted by Usl(n,k), and an output response of a C-cell by Ucl(n, k). The CPS with serial number k is denoted as CPS#k. The output response Usl(n,k) is given by [1]

where

A binominal operator  is defined by

is defined by

Here TI denotes a threshold value of an S-cell, al(V, n, K, k) represents an excitatory connection from a C-cell to an S-cell and bi(k) an inhibitory connection from a V-cell to an S-cell —a V-cell sends inhibitory inputs to S-cells and are not depicted in Figure 1 for simplicity. Each connection is linked to a restricted number of C-cells in the preceding module, Ai(C Z^3), Kcl denotes the number of CPS's in the Uci layer, and TCI the number of cell-planes in the Uci layer.

An output response of a V-cell is given by

where cl(v) is an excitatory connection from a C-cell to a V-cell, which takes a fixed value during a learning.

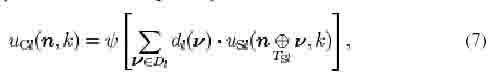

An output response of a C-cell is given by

where the function ksi is defined by

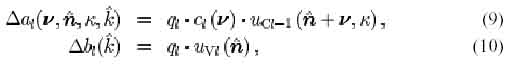

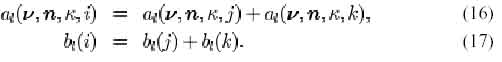

A learning process can be divided into two stages. At the first stage a winner-cell (called as a seed-cell) of S-cells, which is denoted by Usl(n, k), is chosen, and then excitatory connections al(v,n,K,k) and inhibitory connections bl(k) are modified by an unsupervised learning given by

where ql is a learning constant in the lth module. At the next stage the other excitatory connections from C-cells to an S-cell at the  of the

of the  cell-plane are modified by rotation-matrix method [3].

cell-plane are modified by rotation-matrix method [3].

It is very important to adjust thresholds TI of S-cells so that a neocognitron or a rotation-invariant neocognitron works with a high recognition rate. One prepares many thresholds for each layer, and then chooses an optimal threshold by observing firing rate and estimating a recognition rate for each threshold.

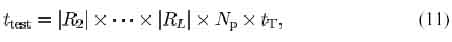

Let Rl be a single set of thresholds for testing in the lth layer  , JVp the number of training patterns, and ?T the time taken to train the model for a set of threshold and for a single training pattern. We shall now examine a simulation time. The learning time required to train the model for the all sets of threshold and for Np training patterns is estimated by

, JVp the number of training patterns, and ?T the time taken to train the model for a set of threshold and for a single training pattern. We shall now examine a simulation time. The learning time required to train the model for the all sets of threshold and for Np training patterns is estimated by

where |•| denotes the number of elements and L is the number of a module. We use four modules in the model. If we use ten thresholds for each layer, five hundreds for Np and assume ten seconds for Tt, The total learning time Ttest is estimated at about two months.

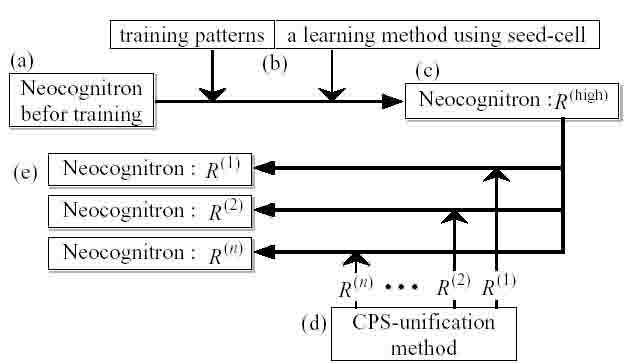

In order to reduce the learning time by a few orders of magnitude, we propose a new algorithm, "auto-generating algorithm", in this paper. In a conventional algorithm we train a lot of neocognitrons for all sets of threshold in order to produce the models corresponding to every set of threshold. In the new algorithm a single neocognitron is produced by training the model for only a single high thresholds and then many neocognitrons are generated from the single neocognitron by use of a new method, "CPS-unification method", in a very short time compared to Np x Tt.

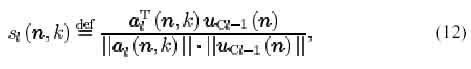

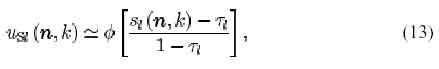

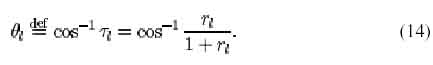

A relation between firing rate of an S-cell and a threshold has been already analyzed in [5],[6]. An S-cell located at n of the kth CPS in the lth module has its own "weight vector" denoted by ai (n, k), and the S-cell receives outputs of C-cells in the preceding module. These outputs are referred to as an "input vector" denoted by ucl-1 (n). The dimension of the weight vector is equal to that of the input vector, and is given by |Al| x Kcl-1. A "similarity" between the weight vector and the input vector of an S-cell is defined as

and the firing rate of the S-cell is expressed as

where Tl = rl/ (1 + rl). Equations (12) and (13) show that an S-cell in the lth module fires if and only if the similarity is larger than TJ. This condition means that the angle between a weight vector and an input vector is smaller than an angle defined by

We call  an "accept-region", which becomes narrower for a larger value of rj.

an "accept-region", which becomes narrower for a larger value of rj.

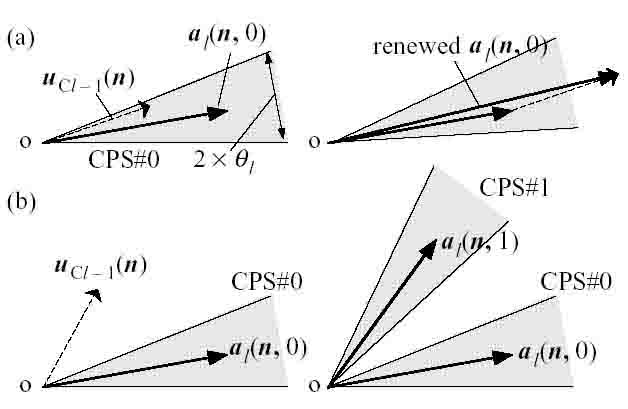

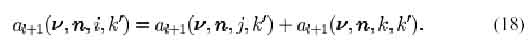

Next, we briefly explain a learning method using a seed-cell selecting plane [4]. A seed-cell is generated in a seed-selecting plane (or a seed-selecting plane stack), and the position of each seed-cell, n, corresponds to the central position of a firing pattern in the previous layer. The firing pattern corresponding to n is an important one that should be learned in the learning process. If S-cells at every n in CPS's don't fire, a new CPS is generated and connections with the S-cell at n in the new CPS are reinforced. If some S-cells at n in CPS's fire, the S-cell of maximum firing rate is selected and the connections with the S-cell are generated and reinforced. These processes can be explained by geometrical relation among a weight vector, an input vector and a threshold as shown in Figure 2. Let us consider a situation in which a pattern has already been trained in CPS#0. If an another input vector (training pattern) is in the accept-region of CPS#0, the vector is trained in CPS#0 (Figure 2(a)). On the other hand, if the input vector is outside the accept-region of CPS#0, a new CPS (CPS#1) is generated and the vector is trained in CPS#1 (Figure 2(b)). As a threshold of an S-cell becomes higher, more CPS's are generated, because accept-regions become narrower in a higher threshold.

We describe the "auto-generating algorithm" in the following. The flow diagram of the algorithm is shown in Figure 3. First, one constructs a neocognitron which is trained with a set of high thresholds,  by presenting training patterns and applying a learning method using a seed-cell

selecting plane (Figure 3(a)-(c)). We emphasize that training patterns are not presented any longer after the application of the learning method. An example in which two CPS's are generated with a narrow accept-region

by presenting training patterns and applying a learning method using a seed-cell

selecting plane (Figure 3(a)-(c)). We emphasize that training patterns are not presented any longer after the application of the learning method. An example in which two CPS's are generated with a narrow accept-region  lg is shown in Figure 4 (a).

lg is shown in Figure 4 (a).

Figure 2: A learning process using a seed-cell. A gray region is an accept-region.

(a): An input vector is trained by a learning of the S-cell in CPS#0. (b): An input vector is trained in new CPS#1 because the input vector is outside the accept-region.

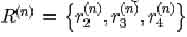

The set of high thresholds, R(high) is replaced by a set of lower threshold,  , in the process(d) in Figure 3. In the case of the lower

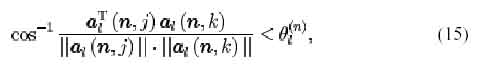

thresholds the accept-region spreads to 0l(n) and the weight vector, al (n, 1), in CPS#1 comes into the accept-region of CPS#0 as shown in Figure 4(b). This situation is inconsistent with the learning method using seed-cell selecting plane as mentioned above. This means that CPS#1 should not be generated and al (n, 1) should be trained by an S-cell in CPS#0 with lower thresholds R(n) The CPS-unification method detects such an inconsistent situation between CPS#j and

, in the process(d) in Figure 3. In the case of the lower

thresholds the accept-region spreads to 0l(n) and the weight vector, al (n, 1), in CPS#1 comes into the accept-region of CPS#0 as shown in Figure 4(b). This situation is inconsistent with the learning method using seed-cell selecting plane as mentioned above. This means that CPS#1 should not be generated and al (n, 1) should be trained by an S-cell in CPS#0 with lower thresholds R(n) The CPS-unification method detects such an inconsistent situation between CPS#j and

Figure 3: The flow diagram of auto-generating algorithm

CPS#k which is described as

and unifies CPS#j and CPS#k into a newly generated CPS#i. The new connections with an S-cell of CPS#i are given by

Such unification also influences the connections of S-cells in the following module. The connections in the following module are given by

We note that in the new algorithm the training patterns are presented only once in order to construct many neocognitrons, while in a conventional algorithm the patterns are presented |R2| x • • • x |Rl| times.

Figure 4: Schematic representation of a vector space with a different value of thresholds, (a): A high threshold, (b): A low threshold.

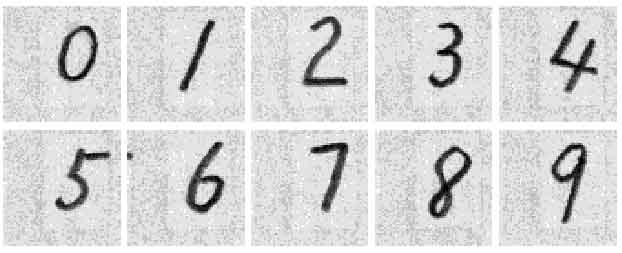

We examine the ability of the rotation-invariant neocognitron for the recognition of realistic hand-written numerical patterns provided by ETL-1 database. We use five hundreds training patterns (see Figure 5 for examples) and one thousands test patterns. The rotation-invariant neocognitron can not distinguish between the pattern "6" and "9" in the last C-layer, [/C4, because the model is completely robust even for the rotation of 180 degrees. Therefore, we observe a firing pattern of S-cells in [/S4 preceding [/C4- The parameters of the model are given in Table 1. The sizes of the areas, AI and DI, are given in Table 2.

The number of the sets of thresholds is one thousand. We determine an optimal set of thresholds, R(opt) as the set that shows the best recognition rate for the training patterns using the auto-generating algorithm. The result turns out .R(opt) = {2.3, 2.0, 2.5}. The recognition rate of the rotation-invariant neocognitron with R(opt) shows 88.9% for the test patterns.

Table 1: The number of cell-plane stack, and the number of cells in one cell-plane stack. The numbers in columns marked by asterisks are not defined, and the numbers in parentheses in columns are ones for the threshold,

| l=0 | l=1 | l=2 | l=3 | l=4 | |

| Ksl | * | 1 | (6) | (7) | (9) |

| Nsl | * | 59 | 17 | 13 | 3 |

| Tsl | * | 16 | 8 | 4 | 2 |

| Kcl | 1 | 1 | (6) | (7) | (9) |

| Ncl | 61 | 19 | 17 | 10 | 1 |

| Tcl | 1 | 8 | 4 | 2/td> | 1 |

Table 2: A size of area connected with one cell. Al and Dl denote the size connected with a C-cell and an S-cell, respectively.

| l=1 | l=2 | l=3 | l=4 | |

| Al | 3x3x1 | 3x3x8 | 5x5x4 | 3x3x2 |

| Dl | 5x5x3 | 3x3x3 | 5x5x3 | 3x3x2 |

We examine the effectiveness and the efficiency of the auto-generating algorithm. We compare the rotation-invariant neocognitron based on the auto generating algorithm with the model based on the conventional algorithm in the recognition rate and the number of CPS. In the conventional algorithm the recognition is 88.4%, almost same as the value that in the auto-generating algorithm. The numbers of CPS in both cases equal each other. It turns out that the model trained by auto-generating algorithm is almost equal to the model trained by the conventional algorithm. The auto-generating algorithm is efficient and effective in the sense that a presentation of training patterns and a learning is needed only once in order to construct many neocognitron. Since the execution time of the new algorithm is about eight seconds in generating one rotation-invariant neocognitron, the ttest becomes about eight thousands seconds. This means that we can reduce the time tiesi from about two months to about two hours.

Figure 5: Examples of training patterns.

The rotation-invariant neocognitron is compared with a neocognitron in the recognition rate. It is very difficult for the neocognitron with ten C-cells in UUC4 to learn correctly all five hundreds training patterns using an unsupervised learning, because the patterns of ETL-1 database are very distorted. For this reason we use twenty patterns to train both the models to make comparison on the same footing. In this case the neocognitron learns correctly all twenty patterns. The optimal set of thresholds of the neocognitron is {2.95, 2.48, 2.80}. For the one thousand test patterns the rotation-invariant neocognitron shows the recognition rate of 85.3%, and the original neocognitron shows the rate of 76.2%. Examples of rotated patterns shown in Figure 6 are correctly recognized by the rotation-invariant neocognitron but can not be recognized by the original neocognitron. We remark that the latest neocognitron [7] has additional layers, an edge-detecting layer or a bend-detecting layer [8], to improve the recognition rate, and shows the recognition rate of more than 98%.

Figure 6: Examples of rotated patterns which are correctly recognized by a rotation-invariant neocognitron and can not recognized by an original neocog-nitron

Figure 7: Examples of patterns recognized unsuccessfully.

Finally we examine the robustness for rotations. We make rotated patterns of 30°, 60°, • • • and 330° for each one thousand test patterns. These amount to 22,000 in total number. The recognition rate for the rotated patterns is also 88.9%. The rotated patterns which are correctly recognized are made of the test patterns which are correctly recognized in the previous simulation. It turns out that our model is completely robust for rotations of realistic patterns. Examples of patterns which are not correctly recognized are shown in Figure 7. We expect that the rate can be improved by attaching a edge detecting layer or a bend-detecting layer [8], which has been introduced to an original neocognitron.

A new learning algorithm, auto-generating algorithm, using the new method, CPS-unification, is proposed, and the efficiency and the effectiveness of the method is shown. The recognition rate of the rotation-invariant neocognitron is higher than that of the original neocognitron. The rotation-invariant neocognitron is completely robust for rotations of realistic patterns. We intend to improve the recognition rate by adding an additional module used in an original neocognitron, and expect that the model will show a high recognition rate for realistic patterns.

Authors are grateful to Prof. K. Fukushima for useful discussions and suggestions. Authors wish to thank Prof. S. Inawashiro for discussions in preparing the final form of the paper.