Vision Based Robotic Interception in Industrial Manipulation Tasks

Àâòîð: A. Denker, T. Adiguzel

Èñòî÷íèê: International Journal of Information and Mathematical Sciences 3:4 2007

Abstract

In this paper, a solution is presented for a robotic manipulation problem in industrial settings. The problem is sensing objects on a conveyor belt, identifying the target, planning and tracking an interception trajectory between end effector and the target. Such a problem could be formulated as combining object recognition, tracking and interception. For this purpose, we integrated a vision system to the manipulation system and employed tracking algorithms. The control approach is implemented on a real industrial manipulation setting, which consists of a conveyor belt, objects moving on it, a robotic manipulator, and a visual sensor above the conveyor. The trjectory for robotic interception at a rendezvous point on the conveyor belt is analytically calculated. Test results show that tracking the raget along this trajectory results in interception and grabbing of the target object.

Introduction

VAST majority of applications for industrial purposes utilize robotic manipulators. Sensing a target object and reaching it for grasping could be considered as a common goal for manipulation tasks. The ability of sensing a moving object is important for the efficiency of manipulation tasks in these industrial settings.

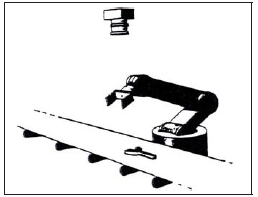

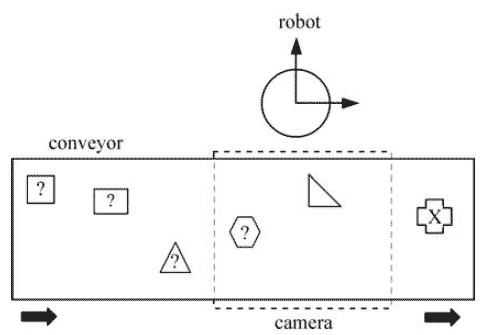

Robots have had less impact in applications where the work environment and object placement cannot be accurately controlled. The limitation is mainly due to the lack of sensory capability. Using a visual set-up for robotic manipulation when working with targets whose position is unknown, provides substantial advantages to solve these problems. For this reason from early of seventies to recent days, efforts on combining vision and robotic applications have appeared in many studies. A depiction of robotic manipulation platform utilizing visual system is shown in Figure 1.

Figure 1 – Manipulation platform using vision system.

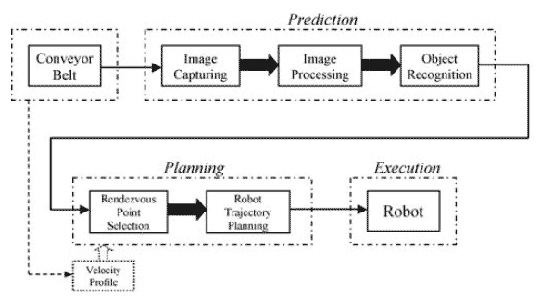

The block scheme in Figure 2 summarizes the set-up which is implemented. There is a conveyor belt and a digital camera takes pictures of the region of interest. In the prediction block, the system gets the captured image data, analyzes it and decides if there is a target object moving on the conveyor. If the system detects a target object, planning of motion for the end effector, with respect to the target object’s motion, is done in the next block. Reference trajectory for the end-effector to intercept the object is calculated at this stage. Executing the calculated trajectory on the robotic manipulator is the final step.

Figure 2 – Manipulation platform using vision system.

Although there is an integrity between the blocks of such a system, the main emphasis of this study is on the part of the system providing visual sensory capability and planning a robotic interception at a convenient rendezvous point. Then, the approach to the problem can be split in two main parts as robot vision and robotic interception.

In section 2, image processing part of the system is explained and the algorithms used for feature extraction and recognition are given. In section 3, motion planning for robotic interception is presented. Extraction of the initial positions of determined target objects, and calculation of the tracking trajectory are examined. The paper concludes with the simulation and experimental results.

Object recognition

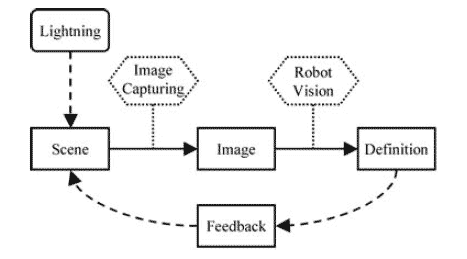

Vision part of the system is modeled as in the diagram shown in Figure 3. Scene covers the camera’s field of view on the belt, and is lightened by using two distinct light sources which are located at opposite sides of the camera.

The inputs for this part are captured images, and generated outputs are symbolic definitions of the skeleton images. Symbolic definitions contain feature data of the objects. Obtaining these features could be considered in two steps: i)distinguishing objects from medium and obtaining a skeleton of the image; ii) determining object definitions by using predefined application data.

By using extracted feature, a classifier determines whether the seen object is a target or not.

Figure 3 – Robot Vision Scheme.

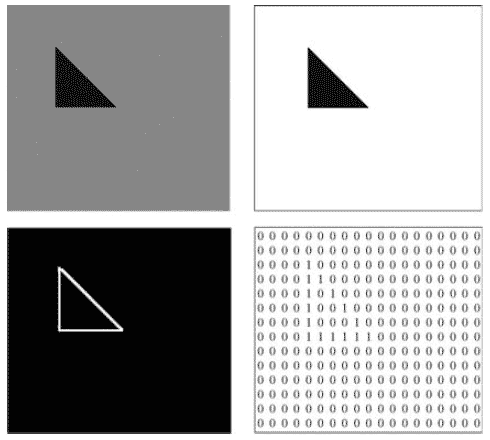

The object recognition method in this study, utilizes corner information, which is obtained by using an 8–directional chain code, as features of objects, and Kohonen’s self organizing maps as classifiers. The method operates in three steps; image processing, feature extraction and classifying.

Figure 4 – Edge detection for a triangular object.

Robotic Interception

After identifying the target object and obtaining its initial location by using visual system, the next phase of this study is executing robotic interception process at a convenient rendezvous point.

Figure 5 – Layout of the system.

Figure 5 illustrates the layout of the physical platform in detail. In this system, the camera is located at a position where its lens is perpendicular to the conveyor surface and its field of view covers whole width of the conveyor belt. As depicted in

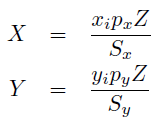

Figure 5, objects travel on the conveyor until their image touches a virtual detection line in the image plane. As soon as this contact takes place, the frame is captured and processed to extract position of the object from basic geometry:

where Px, Py are pixel dimensions, Xi, Yi are image coordinates in the x-y coordinate system, and Z is the distance of the camera to the conveyor surface.

Conclusion

In this paper we have addressed an assemblage of visual object recognition and rendezvous planning problems for a robotic manipulation system. The objective was to identify a target on a conveyor belt and plan a convenient trajectory for robotic interception and execute these on a real platform. The key to the success of such a system is the knowledge of the targets location. Since the vision is a useful sensory capability that it allows for non-contact measurement of environment, then visuality of the target adds great effectiveness for the manipulation tasks. The results of the study shows the potential of using robotic vision for industrial purposes.

References

1. P.I. Corke, Visual Control of Robot Manipulators–A Review, in Visual Servoing, edited by K. Hashimoto. World Scientific: Singapore, 1993.2. Y. Shirai and H. Inoue, ”Guiding a robot by visual feedback in assembling tasks” Pattern Recognition, vol.5, pp.99–108, 1973.

3. M. Sitti, M. Ertugrul and A. Denker, ”Coordination of two robots using visual feedback,” In Proc. of Int. Conf. on Recent Advances in Mechatronics, Istanbul, Turkey, 1995, p.615–620.

4. S. Hutchinson, G.D. Hager and P.I. Corke, ”A tutorial on visual servo control,” IEEE Trans. on Robot. Autom., vol.12, pp.651–670, 1996.

5. T. Borangiu, ”Visual conveyor tracking for ”pick-on-the-fly” robot motion control,” 7th International Workshop on Advanced Motion Control, Maribor, Slovenia, 2002, pp.353–358.

6. D. Kragic, M. Bjorkman, H.I. Christensen and J-O. Eklundh, ”Vision for Robotic Object Manipulation in Domestic Settings,” Robotics and Autonomous Systems, vol.52, pp.85–100, 2005.

7. T. Kohonen, Self-organizing Maps, Third Edition. Springer–Verlag: Berlin, 2001.

8. R.C. Gonzales and R.E. Woods, Digital Image Processing, Second Edition. Prentice Hall: N.J., 2002.

9. G. Coppini, S. Diciotti, and G. Valli, ”Matching of medical images by self-organizing neural networks,” Pattern Recognition Letters, vol.25, pp.341–352, 2004.

10. M. Mehrandezh, N.M. Sela, R.G. Fenton and B. Benhabib, ”Robotic interception of moving objects using an augmented ideal proportional navigation guidance technique,” IEEE Transactions on Systems, Man and Cybernetics, vol.30, pp.238–250, 2000.