Autors: Diego Santa-Cruz and Touradj Ebrahimi

Sorce:Diego Santa-Cruz and Touradj Ebrahimi, An analytical study of JPEG 2000functionalities. In Proc. of the International Conference on Image Processing (ICIP), vol. 2, pp. 49-52, Vancouver, Canada, September 10-13, 2000.

AN ANALYTICAL STUDY OF JPEG 2000 FUNCTIONALITIES.

ABSTRACT

JPEG 2000, the new ISO/ITU-T standard for still image coding, is about to be finished. Other new standards have been recently introduced, namely JPEG-LS and MPEG-4 VTC. This paper compares the set of features offered by JPEG 2000, and how well they are fulfilled, versus JPEG-LS and MPEG-4 VTC, as well as the older but widely used JPEG and more recent PNG. The study concentrates on the set of supported features, although lossless and lossy progressive compression efficiency results are also reported. Each standard, and the principles of the algorithms behind them, are also briefly described. As the results show, JPEG 2000 supports the widest set of features among the evaluated standards, while providing superior rate-distortion erformance.

1. INTRODUCTION

JPEG 2000 will be the next ISO/ITU-T standard for compression of still images. Effort has been made to make this new standard suitable for today’s and tomorrow’s applications by providing features unavailable in previous standards, but also by providing more efficient support for features that are covered by them. A legitimate question would be: What are the features offered by JPEG 2000 but also how well are they fulfilled when compared to other standards offering the same features. This paper aims at providing an answer to this simple but somewhat complex question.

2 OVERVIEW OF STILL IMAGE CODING STANDARDS

For the purpose of this study we compare the coding algorithm in the JPEG 2000 standard to the following three standards: JPEG, MPEG-4 Visual Texture Coding (VTC) and JPEG-LS .In addition, we also include PNG . The reasons behind this choice are as follows. JPEG is one of the most popular coding techniques in imaging applications ranging from Internet to digital photography. Both MPEG-4 VTC and JPEG-LS are very recent standards that start appearing in various applications. It is only logical to compare the set of features offered by JPEG 2000 standard not only to those offered in a popular but older standard (JPEG), but also to those offered in most recent ones using newer state-ofthe- art technologies. Although PNG is not formally a standard and is not based on state-of-the-art techniques, it is becoming increasingly popular for Internet based applications. PNG is also undergoing standardization by ISO/IEC JTC1/SC24 and will eventually become ISO/IEC international standard 15948. Although JPEG 2000 supports coding of bi-level and palette color images, we restrict ourselves to continuous tone, since it is one of the most popular image types. Other image coding standards are JBIG and JBIG2. Although these are known for providing very good performance for bi-level images, they do not support an efficient coding of continuous tone images with a large enough number of levels. Since this paper concentrates on the latter, JBIG and JBIG2 are not considered. Other popular defacto standards for coding continuous tone images are GIF and Flash-Pix. GIF is limited to 8 bit paletted images and therefore is not considered here. FlashPix is based on JPEG and is therefore more of a file format than a coding standard and is not considered in this paper either. In the following a brief explanation of the principles behind the algorithms used in each of these standards is given.

2.1. JPEG

This is the very well known ISO/ITU-T standard created in the late 1980s. There are several modes defined for JPEG , including baseline, lossless, progressive and hierarchical. Baseline mode is the most popular and supports lossy coding only. It is based on the 8x8 block DCT, zig-zag scanning, uniform scalar quantization and Huffman coding. The lossless mode is not popular but provides for lossless coding, but not lossy. It is based on a predictive scheme and Huffman coding. It should be noted that the lossy and lossless modes of JPEG are based on totally different algorithms. The progressive and hierarchical modes of JPEG are both lossy and differ only in the way the DCT coefficients are coded or computed, respectively, when compared to the baseline mode. They allow a reconstruction of a lower quality or lower resolution version of the image, respectively, by partial decoding of the compressed bitstream. Progressive mode encodes the quantized coefficients by a mixture of spectral selection and successive approximation, while hierarchical mode uses a pyramidal approach to computing the DCT coefficients in a multi-resolution way.

2.2. MPEG-4 VTC

MPEG-4 Visual Texture Coding (VTC) is the algorithm used in MPEG-4 standard in order to compress the texture information in photo realistic 3D models. As the texture in a 3D model is similar to a still picture, this algorithm can also be used for compression of still images. It is based on the discrete wavelet transform (DWT), scalar quantization, zero-tree coding and arithmetic coding. MPEG-4 VTC supports SNR scalability through the use of different quantization strategies: single (SQ), multiple (MQ) and bi-level (BQ). SQ provides no SNR scalability, MQ provides limited SNR scalability and BQ provides generic SNR scalability. Resolution scalability is supported by the use of band-by-band scanning (BB), instead of the traditional zero-tree scanning (treedepth, TD), which is also supported. MPEG-4 VTC also supports coding of arbitrarily shaped objects, by the means of a shape adaptive DWT, but does not support lossless coding.

2.3. JPEG-LS

JPEG-LS is the latest ISO/ITU-T standard for lossless coding of still images and which also provides for “near-lossless” coding. Part-I, the baseline system, is based on adaptive prediction, context modeling and Golomb coding. In addition, it features a flat region detector to encode these in run-lengths. Part-II will introduce extensions such as an arithmetic coder, but is still under preparation. This algorithm was designed for low-complexity while providing high compression. However it does not provide for scalability, error resilience or other additional functionality.

2.4. PNG

Portable Network Graphics (PNG) is a W3C recommendation for coding of still images which has been elaborated as a patent free replacement for GIF, while incorporating more features than this last one. It is based on a predictive scheme and entropy coding. The entropy coding uses the Deflate algorithm of the popular Zip file compression utility, which is based on LZ77 coupled with Huffman coding. PNG is capable of lossless compression only and supports gray scale, paletted color and true color, an optional alpha plane, interlacing and other features.

2.5. JPEG 2000

JPEG 2000 is still under development, although Part I (the core system) is technically frozen and scheduled to reach International Standard (IS) status in December 2000. It is based on the discrete wavelet transform (DWT), scalar quantization, context modeling, arithmetic coding and post-compression rate allocation. The entropy coding is done in blocks, typically 64x64, inside each sub-band. The DWT can be performed with reversible filters, which provide for lossless coding, or non-reversible filters, which provide for higher coding efficiency without the possibility to do lossless. The coded data is organized in so called layers, which are quality levels, using post-compression rate allocation and then output to the code-stream in packets. JPEG 2000 provides for resolution, SNR and position progressivity, or any combination of them, parseable code-streams, error-resilience, arbitrarily shaped region of interest, random access (to the sub-band block level), lossy and lossless coding, etc., all in a unified algorithm.

3. COMPARISON METHODOLOGY

One important concern in coding techniques is that of compression efficiency which is still one of the top priorities in the design of imaging products. In a previous study, we devoted a special attention to compression efficiency. However, we report lossless and lossy progressive compression efficiency results to evaluate how well the algorithms code different types of imagery and how well progressive coding is supported. Most applications also require other features in a coding algorithm than simple compression efficiency. This is often referred to as functionalities. Examples of such functionalities are resiliency to residual transmission errors that occur in mobile channels for instance. In the next section we summarize the results of the study as long as the considered functionalities are concerned.

4. RESULTS

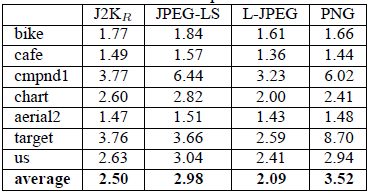

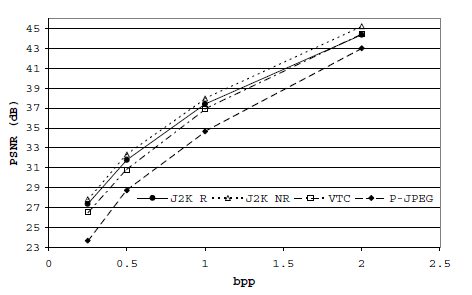

The algorithms have been evaluated with seven images from the JPEG 2000 test set, covering various types of imagery. The images “bike” (2048x2560) and “cafe” (2048x2560) are natural, “cmpnd1” (512x768) and “chart” (1688x2347) are compound documents consisting of text, photographs and computer graphics, “aerial2” (2048x2048) is an aerial photography, “target” (512x512) is a computer generated image and “us” (512x448) an ultra scan. All these images have a depth of 8 bits per pixel. The software implementations used for coding the images are the JPEG 2000 Verification Model (VM) 6.1 (ISO/IEC JTC1/ SC29/WG1 N 1580), the MPEG-4 MoMuSys VM of Aug. 1999 (ISO/IEC JTC1/SC29/WG11 N 2805), the Independent JPEG Group JPEG implementation, version 6b, the SPMG JPEG-LS implementation of the University of British Columbia, version 2.2, the Lossless JPEG codec of Cornell University, version 1.0, and the libpng implementation of PNG , version 1.0.3.

Table 1. Lossless compression ratios.

Fig. 1. PSNR corresponding to average RMSE, of all test images, for each algorithm when performing lossy decoding at 0.25, 0.5, 1 and 2 bpp of the same progressive bitstream.

5. CONCLUSIONS

This work aims at providing a comparison of the efficiency of various features that can be expected from a number of recent as well as most popular still image coding algorithms. To do so, many aspects have been considered including genericity of the algorithm to code different types of data in lossless and lossy way, and features such as error resiliency, complexity, scalability, region of interest, embedded bitstream and so on. The results show in a quantitative way how much improvement can be expected from various points of view (genericity and other functionalities) from JPEG 2000 standard. At the same time, it puts into the same perspective many existing standards one can efficiently choose from, based on the needs of the underlying product.

6. REFERENCES

1 ISO/IEC JTC 1/SC 29/WG 1, ISO/IEC FCD 15444-1: Information technology — JPEG 2000 image coding system: Core coding system [WG 1 N 1646], Mar. 2000, http://www.jpeg.org/FCD15444-1.htm.

2 William B. Pennebaker and Joan L. Mitchell, JPEG: Still Image Data Compression Standard, Van Nostrand Reinhold, New York, 1992.

3 ISO/IEC, ISO/IEC 14496-2:1999: Information technology — Coding of audio-visual objects — Part 2: Visual, Dec. 1999.

4 ISO/IEC, ISO/IEC 14495-1:1999: Information technology— Lossless and near-lossless compression of continuous-tone still images: Baseline, Dec. 1999.

5 W3C, PNG (Portable Network Graphics) Specification, Oct. 1996, http://www.w3.org/TR/REC-png.

6 ISO/IEC, ISO/IEC 11544:1993 Information technology —Coded representation of picture and audio information—Progressive bi-level image compression, Mar. 1993.

7 ISO/IEC JTC 1/SC 29/WG 1, ISO/IEC FCD 14492:Information technology — Coded representation of picture and audio information — Lossy/Lossless coding of bi-level images [WG 1 N 1359], July 1999, http://www.jpeg.org/public/jbigpt2.htm.

8 Diego Santa-Cruz and Touradj Ebrahimi, “A study of JPEG 2000 still image coding versus other standards,” in Proc.of the X European Signal Processing Conference, Tampere, Finland, Sept. 2000, vol. 2, pp. 673–676.