Content

2. Expected scientific novelty

5. A review of research and development

Introduction

Every day more and more people are beginning to use the Internet. The path of development of all countries of the world in terms of information lies in two factors. The first - everyone should have a fast home Internet access. Second - to be placed on the Internet as much as possible of services that would become the replacement today and already obsolete services. Make sure this is easy - go to a regular store. It is likely you will see the payment terminals. Operate these terminals via the Internet and allow to pay for what people used to pay at the box office, performing Night a huge queue for hours. Now all this can be done in minutes. The second example - social networking. Almost every one of us has replaced half or more of the real communication in virtual and it became part of our lives.

1. The relevance of the topic

More popular site Ч the more pressure on him. It has long gone are the days when the speed of the site is primarily dependent on the rate of household Internet. Now we consider any sites other than torent trackers and archive files [5].

time, which will be held on how the user enters the address of the site and clicks the Ђenterї to a full page load plays an important role in the life of the site and consists of 2 components: generation time, and file download time of the file. Time to display a file browser, we do not count, since it depends only on the power of a particular user's computer.

At speed of 1 Mbit / s page load time can also be neglected. This is done because the average size of any page in the range of 100-200 kbps and download speed of the data volume will be approximately equal to 0.1-0.2 seconds, and at a speed of 10 Mbit / s this time will be reduced to 0.01 - 0 , 02 seconds. For a person 0.1 seconds and 0.01 seconds is a moment during which he can not measure. Based on this assumption, we neglect the time of download page [1].

remains the main parameter - page generation time. As we can see, this is the major sites are very popular.

2. Expected scientific novelty

Above mentioned, the main setting for the popular website - page generation time. The scientific novelty of this work is:

1) Development of rules to create projects with a high load

2) Implementation of the rules obtained in the current popular projects and creating new ones, are already using the data obtained

3. Expected practical results

The study we plan to create a set of methods and means by which to create projects that would have withstood a greater load. Under heavy load means the sites that received 5 requests per second.

The results will be tested on their own projects. The resulting set of algorithms will not only be confirmed by theoretical calculations, but the practical results obtained.

4. The practical value of

The value of the work is to apply the results to improve current projects and creating new ones. Let us consider in more detail these two points.

The first - the improvement of current projects. Now create a lot of sites with interesting ideas, the creators spend a lot of time and effort to write code on advertising, content developers, etc. In 99.9% of cases no one has its own data centers, which would superproductive computers. Most projects are located on a shared host, at least - on dedicated servers. When the project is already written, he began his advertising. If everything is successful, the users begin to visit the resource increasingly active and growing number of users can be estimated as an increase in geometric progression.

Immediately begin to appear in the majority of users this kind of problem:

1) Page loaded long

2) You receive 503 server error

3) Loading, not all elements of the page and display the page correctly

4) Work is not all part of the application

After the appearance of such problems of the users once the application or leave the site and a further rise in popularity is simply impossible [8].

Our lessons learned will be implemented in these projects, and they will work steadily, increasing in popularity more rapidly.

The second - the creation of new projects with the use of derived methods. In this case, once the project is created by the new rules, and its growth rate will depend only on the idea, but not the load on the server, because the application will be able to cope with any load.

5. A review of research and development

Research carried out by each individual for himself and results tend not to share because the company explores for himself and wants to become a leader and make your product as fast as possible. The leading firms in a similar study are Facebook and Google.

Consider the most popular technologies that they use:

Memcached

Memcached Ч one of the most well-known projects in the Internet. Its distributed cache system information is used as a caching layer between the web server and MySQL (because database access is relatively slow). Years passed, and Facebook has made a lot of code modifications Memcached and related software (eg, optimization of network performance).

In Facebook with thousands of Memcached servers with tens of terabytes of data cached at any given time. This is probably the world's largest array of Memcached servers.

Figure 1 shows the principle of the memcached server

Figure 1 Ч The principle of the memcached server (Animation: 4 frames, the delay between frames 5, the number of cycles of reproduction is not limited to, size 500 * 250, 29.92 KB)

HipHop for PHP

PHP, since it is a scripting language, quite slow when compared with the native code of the processor, the executable on the server. HipHop converts scripts in PHP, source code in C + +, which are then compiled in order to ensure good performance. This allows Facebook to receive a greater return on fewer servers, as PHP is used almost universally Facebook. A small group of engineers (in the beginning there were only three) has developed a HipHop for 18 months and now he is working on the servers of the project [7].

Haystack

Haystack Ч a high-performance storage / receiving photos (strictly speaking, Haystack - Object Repository so it can store any data, not just photos). The share of the system falls out a lot of work. On Facebook uploaded more than 20 billion photos, and each is stored in four different resolutions, with the result that gives us more than 80 billion units of photos. Haystack must not only be able to store photos, but also to give them very quickly. As we mentioned earlier, Facebook gives more than 1.2 million pictures per second. This amount does not include pictures that are given to a system of content delivery, and Facebook is growing [9].

BigPipe

BigPipe - Dynamic delivery of Web pages designed on Facebook. It is used for the delivery of each web page sections (called pagelets) to optimize performance. For example, the chat, news and other parts of the page is requested separately. They are available in parallel, which increases productivity and enables users to use the website, even if some of it is disabled or defective.

Cassandra

Cassandra Ч distributed fault-tolerant data storage. This is one of the systems, which are always mentioned, speaking of NoSQL. Cassandra was the project open source and even became a subsidiary project of Apache Foundation. On Facebook we use it to search your Inbox. In principle, it is used by many projects. For example, Digg. It is planned to use it in the project Pingdom.

Scribe

Scribe Ч Convenient system logging, which is used for several things at once. It was designed to ensure that logging into Facebook and wide support for the addition of new categories of events as they happen (hundreds of them on Facebook).

Hadoop and Hive

Hadoop Ч the implementation of map-reduce algorithm is open source, which allows computations on large data volumes. In Facebook we use it to analyze the data (as you know, enough of them on Facebook). Hive was developed at Facebook and allows you to use SQL queries to retrieve information from Hadoop, which facilitates the work of non-programmers. Hadoop and Hive and have open source code and develop under the auspices of the Apache Foundation. Their uses are many other projects. For example, Yahoo and Twitter.

Thrift

Facebook uses a variety of programming languages ??in the various components of the system. PHP is used as a front-end, Erlang for a chat, Java and C + + also did not remain idle. Thrift - cross-linguistic framework that connects all parts of the system into a single unit, allowing them to communicate with each other. Thrift is developed as a project with open source and it has added support for several other programming languages.

Varnish

Varnish Ч HTTP accelerator that can serve as a load balancer and cache content, which can then be delivered with great speed. Facebook is using Varnish for delivery of photos and profiles, pictures, keeping the load in the billions of requests per day. Like everyone else, that you are using Facebook Varnish - the software open source [2].

6. Current results

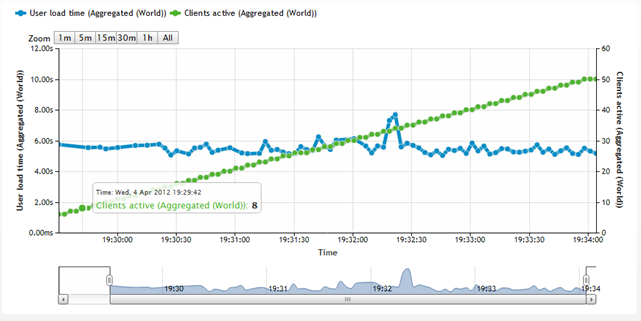

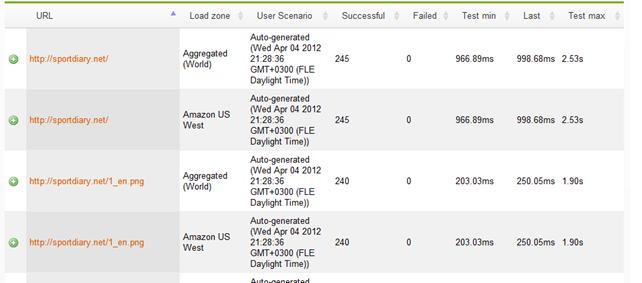

At the moment it was created four web-based applications. 3 applications run on social networks and one independent. Obtained some practical results, and now we consider them as an example web application "Sports Diary" To create a Web page aimed at a large number of visits, it is necessary to solve the problem of high resource utilization. In general, there are two ways to solve this problem. First - to optimize the source code using the best technology. Second - use the powerful hardware. In this report, using the first method. For projects with a high load is advisable to use php in the mode of fast-cgi. There are several schemes for implementing such a regime. For these projects used a bunch of nginx and php-fpm. The gain in performance compared to Apache and mod_php is achieved due to the fact that the php-fpm creates n php-processes, which are then hung in the system and process scripts are transmitted from a Web server. In this scheme, save time and system resources to call php-interpreter. Use of this regime has reduced the load on the server about 5 times. The next way to improve performance is to install php-accelerator. The essence of his work lies in the binary caching script. In fact, the cost of CPU time to translate the script into a binary code for each call is meaningless. Such appeals to the same script can be up to 100 times per second, so the use of eAccelerator is appropriate. After you install the system performance increases, and the load decreases several times during page generation is dramatically reduced. To search for a non-optimal code sections have been added to the draft timer counting down the execution time of php and sql-query execution. Once they identify problem queries need to be redone, and add an index to the required location. Very useful for solving this problem is the command EXPLAIN. As a result, execution time sql-queries sometimes dropped to 10-20. But this is not the only way to reduce the sql-queries. Memcached - the simplest and at the same time, high-performance caching server developed in time to livejournal.com. Use memcache in full is not possible, because work is not limited to this resource, but nevertheless it is of great benefit. The project uses memcached to cache the results of sql-query, or storage of ready otrendernyh blocks. On many pages of their generation time was reduced to 0.007 sec. Another useful tool for improving the quality of life is tuning sysctl. At high loads the default configuration is not as effective as possible. If possible, php-fpm, memcached and MySQL are transferred to unix-socket. As a result, the load at peak times the server gives the contents as quickly as without loads [6]. Testing resource site loadimpact.com shown in Figures 2 and 3 [10].

Figure 2 Ч Testing the web application downloading

Figure 3 Ч Performance testing of certain segments of the site

A very useful tool in the development of a heavily web-based applications are database MySQL. As part of this current have been investigated to date, the possibility of MySQL:

Х Segmentation - an opportunity to break a large table into several pieces, placed in a different file systems based on user-defined functions. Under certain conditions it can give significant performance improvement and, moreover, facilitates the scaling of the tables.

Х Changed the behavior of some operators, to ensure greater compatibility with the standard SQL2003.

Х Progressive replication (English row-based replication), in which the binary log will be written only about a real change in rows in a table instead of the original (and, possibly, slow) the query text. Line-replication can be used only for certain types of sql-query in terms of MySQL - mixed replication (English mixed replication).

Х Built-in scheduler to periodically run jobs. Syntax to add tasks like adding a trigger to the table on ideology - by crontab.

Х An extra set of functions for processing XML, support for the implementation of XPath.

Х New tools for diagnosing problems and tools for performance analysis. New features for managing the contents of log files, log files can now be stored in tables and general_log slow_log. Utility mysqlslap allows for load testing of databases to record the response time for each query.

Х To facilitate the update utility is prepared by mysql_upgrade, which will check all the existing tables for compatibility with the new version, and if necessary, appropriate adjustments.

Х MySQL Cluster is now released as a standalone product, based on MySQL 5.1 and storage NDBCLUSTER.

Х Significant changes in the MySQL Cluster, such as, for example, the ability to store tabular data on the disk.

Х Back-to-use built-in library libmysqld, absent in MySQL 5.0.

Х API for plugins, which allows you to download third-party modules that extend the functionality (eg, full-text search) without a server restart.

Х Implementation of full-text search parser in the form of plug-in.

Х A new type of tables, Maria (fault-tolerant clone of MyISAM) [4].

flexibility provided by the MySQL database support for many types of tables: users can select a table type MyISAM, supporting full-text search, as well as tables InnoDB, support transactions at the level of individual records. Moreover, the MySQL database comes with a special type of table EXAMPLE, demonstrating the principles of creating new types of tables. Thanks to its open architecture and the GPL-licensed, MySQL database in the constantly emerging new types of tables.

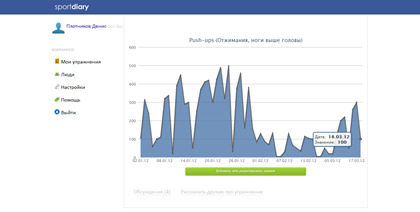

using these technologies and hosting for nginx and apache server was set up application "Sports Diary." It is located at http://sportdiary.net/. Figure 4 shows the main page.

Figure 4 Ч Home of the developed web application

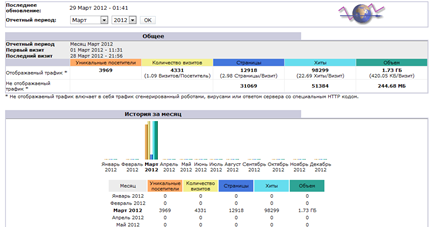

The idea of ??a sports blog is that the user is logged on the site, filling out a form. Next, he introduces his exercises in a special window. All exercises are added to the user list. By clicking on the exercise, the user can enter data for any day and see them as a graph. Figure 5 shows a graph of the exercise "Push-ups." All users can view statistics for each other. To test the high-load has been placed advertising on portals such as Yandex, Facebook and Mamba. The server has a module for logs that AWStats server collects data and presents them in graphs and tables. Some of the data presented in Figure 6 [3].

Figure 5 Ч View Exercise

Figure 6 Ч Visitor statistics developed by the Web resource

Conclusion

It is assumed that the results will help the creators of popular web applications to avoid problems with the load on the server. The project currently is under revision. In writing this essay master's work is not yet complete. Final completion: December 2012. The full text of the work and materials on the topic can be obtained from the author or his head after that date.

References

[1]. яндекс. —тажировки в департаменте разработки [Web source].

Access mode: http://company.yandex.ru/job/vacancies/form.xml

[2]. јрхитектура больших проектов: Facebook [Web source].

Access mode: http://habrahabr.ru/post/100020/

[3]. AWStats official web site [Web source].

Access mode: http://awstats.sourceforge.net/

[4]. –азработка высоконагруженного веб-приложени€. ѕлотников ƒ.ё., ћалЄваный ≈.‘., јноприенко ј.я.

Source: »нформационные управл€ющие системы и компьютерный мониторинг (»”— и ћ 2012)Ч ƒонецк, ƒонЌ“” Ч 2011, с. 431-435

[5]. Ѕудущее информационных технологий [Web source].

Access mode: http://www.ua3000.info/articles/9/2010-08-29095.html

[6]. What is memcahed [Web source].

Access mode: http://code.google.com/p/memcached/wiki/NewOverview

[7]. Hip-Hop for php by facebook [Web source].

Access mode: https://github.com/facebook/hiphop-php

[8]. јнализ загрузки веб-страницы [Web source].

Access mode: http://webo.in/articles/habrahabr/16-optimization-page-load-time/

[9]. About Haystack [Web source].

Access mode: http://django-haystack.readthedocs.org/en/latest/

[10]. LoadImact documents [Web source].

Access mode: http://loadimpact.com/learning-center/faq