Abstract

Contents

- Introduction

- 1. Theme Relevance

- 2. Goal and tasks of the research, planned results

- 3. Different types of parallel computing systems review

- 3.1 Multiprocessor system

- 3.2 Multicomputer system

- 3.3 Distributed system

- 4. The review of existing classical methods of processes scheduling in various computing systems

- 4.1 Various characteristics of task schedulers

- 4.2 Schedulers for multiprocessor systems

- 4.3 Schedulers for multicomputer systems

- 4.4 Schedulers for distributed systems

- 5. Conclusion

- References

Introduction

Continuous growth of user's requirements and inquiries to the speed of calculations is connected to the increase in complexity of calculations made by computing systems. Not the last role in computing systems' productivity increasement is played by methods and algorithms for the organization of difficult calculations.

Depending on structure of the computing system exist various algorithms and methods of planning of the activity, often pursuing specific aims (reduction of time of performance of a task, ensuring uniform loading or the maximum use of all available resources of system, maximizing quantity executed tasks/subtask for a unit of time, etc.). In a general view the problem of planning of processes can be reduced to the following items: * what of the processes standing in a queue on performance, the system time has to start at present; * what resources and in what quantity for performance of this task have to be used. In many respects the speed of receiving results depends on overall performance of the scheduler, after all nobody wants to waste precious time waiting while this or that process when others came to the end for a long time will be processed. Calculations and without that demand considerable resources and time still to spend it for nothing because of the inefficient schedule of works.

1. Theme urgency

At the moment it is developed a lot of universal and all of known algorithms of planning for various types of computing systems. There is also large number of the specific schedulers using methods who are effective only for a certain type of networks or narrowly targeted tasks, and the number of such algorithms grows day by day. There are no reasons for doubts that importance of effective distribution of resources and processes in any system will increase only with development of computer technologies as program and hardware, so the analysis, studying and modifications of algorithms of planning are and remain important part of development of the majority of computing systems of high complexity.

My master's thesis is devoted to an actual scientific task: to studying of possibility of modification of any modern algorithm of the scheduler of tasks for the purpose of increase of its efficiency (global or local). Naturally, at present there is a set of various methods of the similar planning realizing various ways and approaches. The comparative analysis of some such algorithms with the purpose to reveal the most suitable for further modification will be important part of the thesis.

2. Goal and tasks of the research

Research objective is increase of efficiency of a stage of planning of processes in parallel computing systems. By the main objectives of research are put:

- The review of various existing methods of planning of tasks.

- Analysis and comparative assessment of the most perspective algorithms.

- Search and detection of those characteristics of schedulers that can be improved for receiving more optimum results.

- Simulation of modifications, creation of new models. Carrying out repeated comparison. Identification positive (and negative) changes in results of planning.

- Development and improvement of most effective of the received methods of planning.

Object of research is the scheduler of the multiprocessor systems.

Object of research: improvement of algorithm of planning for the multiprocessor computing systems.

Within a master's thesis receiving actual scientific results of the following directions is planned:

-

Creation of more effective method of planning of processes.

-

Definition of the perspective directions of development of schedulers.

-

Modification of known algorithms of planning of tasks.

3. Review of different types of parallel computing systems

For performance of difficult calculations and effective operation of big computing systems usually use one of the following models:

- multiprocessor system;

- multicomputer system;

- the distributed system.

3.1 Multiprocessor system

For multiprocessor system existence of the general memory access to which can get any processor entering into its structure is characteristic. In similar system for the general memory use for exchange of information between various processors. It is increased by the data exchange speed, but realization of the general memory which hundreds and hundreds various processors, in practice much more difficult and labor-intensive process, than implementation of interprocessor interaction via additional modules will use at the same time.

3.2 Multicomputer system

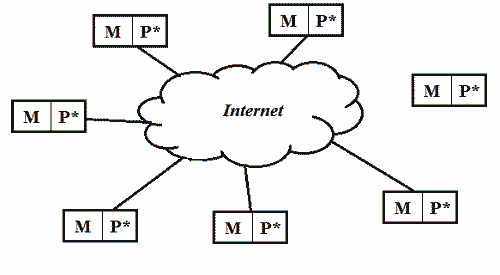

Unlike it, the multicomputer system has no general memory, but each of processors (we will designate them P) has a small site of local memory (M) where there is a storage of information processed and analyzed by it [6]. The similar architecture means that any of processors won't be able to get direct access to data of another, and it is complicates exchange of information already at a stage of combination of results of work a little. For implementation of a similar traffic of data between the protsessamy there is an additional element, a so-called subsystem of "an internal communication network".

In spite of the fact that the multicomputer system concedes in the speed of processing multiprocessor a little, realization of such system is much simpler and cheaper (at similar number of used processors).

Figure 1. Differences in a way of storage and data-working in systems (5 frames, 5 cycles of repeating, 61,8 kilobytes):

a) multiprocessor system consists out of 12 processors using the general memory;

b) multicomputer system consists out of 12 processors, each has his own memory.

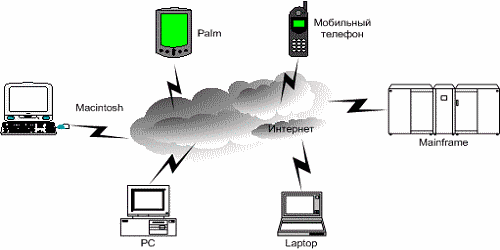

3.3 The distributed systems

It is possible to consider as the Distributed Computing System (DCS) a set of the independent computers connected by communication channels which for the foreign user are a whole [2]. RVS can be presented, as some separate personal computers or the servers united in uniform system by means of the Internet. Naturally, the message data on the Internet, as well as ways of the organization of the similar message (an exchange of packages, routing, etc. aspects) between the cars being in various parts of the globe (physically), it is impossible to call stable and definitely fast, and therefore temporary indicators of similar systems are quite low. However RVS differ high reliability, in a type of that failure of one element or knot of a network doesn't play a special role in its working capacity. Besides, they possess almost unlimited potential in respect of accumulation of productivity – the more computers will be united by this structure, the their general computing capacity will be higher.

|

|

Figure 2. General idea of distributed network architecture (system)

4 . The review of existing classical methods of planning in various computing systems

4.1 Various characteristics of schedulers of tasks

The way of the solution of a

question of planning of tasks

significantly depends on that, are independent or dependent (processes

in the list are connected with each other), and therefore they can be

broken into two big categories as a whole:

- planning of independent processes;

- planning of dependent processes.

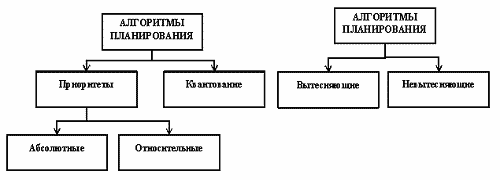

At present there is a set of

various algorithms of planning of the

processes pursuing the various aims and providing various quality of

multiprocessor processing in system. Among this set of algorithms we

will consider in more detail two groups of most often meeting

algorithms: the algorithms based on quantization, and the algorithms

based on priorities.

Figure 3. Basic classification of scheduling algorithms

The algorithms based on ideas of quantization, carry out change of active process if there is one of the following:

- process came to the end and left system,

- there was a mistake,

- process passed EXPECTATION to a condition,t

- he quantum of processor time allocated for this process is settled.

Process which settled the quantum, is transferred to a condition READINESS and expects when the new quantum of processor time, and for performance according to a certain rule will be provided to it new process of turn of the ready gets out. Thus, any process doesn't occupy the processor for a long time therefore quantization is widely used in systems of division of time.

Quanta can differ for various processes or in general change eventually. Processes, which not completely used allocated with it for work time (for example, because of leaving on performance of operations of input-output), often receive in system additional privileges at the subsequent service. It can be differently organized and turn of ready processes: cyclically, by the rule "the first came-the first was served" (FIFO), "the last came - the first was served" (LIFO) or by means of other, more difficult method.

In a different way classification of algorithms of planning can consider division them on forcing out and not forcing out. Non-preemptive multitasking (not forcing out multitasking) is a way of planning of processes at which active process is carried out until he, on own initiative, won't give management to the scheduler in order that that chose other process ready to performance from turn.

Preemptive multitasking (forcing-out multitasking) is such way at which the decision on processor switching from performance of one process on performance of other process is accepted by the scheduler, instead of the most active task.

The concepts preemptive and non-preemptive are sometimes identified with concepts of priority and priority-free disciplines that is absolutely incorrect, and also with concepts of absolute and relative priorities that incorrectly partly. Forcing-out and not forcing out multitasking is broader concepts, than priority types. Priorities of tasks can as be used, not to be used both at forcing out, and at not forcing out ways of planning. So in case of use of priorities the discipline of relative priorities can be carried to a class of systems with not forcing out multitasking, and discipline of absolute priorities - to a class of systems with forcing-out multitasking. And the priority-free discipline of planning based on allocation of equal quanta of time for all tasks to treat forcing-out algorithms.

The main distinction between preemptive and non-preemptive options of multitasking is extent of centralization of the mechanism of planning of tasks. At forcing-out multitasking this mechanism entirely is concentrated in the computing system, and the programmer writes the appendix, without caring of how it will be carried out in parallel with other tasks. Thus the system carries out the following functions: defines the moment of removal from performance of an active task, remembers its context, chooses from turn of ready tasks following and starts it on performance, loading its context.

At not forcing out multitasking the mechanism of planning is distributed between system and applied programs. The applied program, having received management, itself defines the moment of completion of the next iteration and returns management to system by means of any system call, and that, in turn, forms turns of tasks and chooses according to some algorithm (for example, taking into account priorities) the following task on performance. Such mechanism creates problems both for users, and for developers.

4.2 Schedulers of multiprocessor systems

Schedulers of multiprocessor computing systems a little than differ from schedulers of the processes used in the most operating system of the computer. They are urged to solve two following problems:

- what of processes ready to performance needs to be started in this concrete timepoint;

- on what of processors it is necessary to start the chosen process.

The main algorithm of planning

of independent processes is the

algorithm with use of turns of tasks. The main idea that all

processes ready to execution are located in turn of tasks.

The released processor receives process ready to execution of this

turn. In process of data processing there are new processes

ready to execution which are located in turn of tasks. In the

presence of several processes ready to execution there is a problem of

formation of rules of allocation of processes of turn.

All processes ready to execution, form or the uniform turn ordered on priorities, or some turns for processes with different priorities (for example, turn of processes with a high priority and with a usual priority). Switching of processors consists in a choice of the most priority process of turn which is in its beginning. Change of processes can be caused by exhaustion of quantum of time which has been taken away under its performance, or transition of process to other condition.

The considered scheme of planning allows:

- to provide to processors a mode of division of time;

- allows to balance automatically loading of processors of system, excepting a situation when one of them stands idle while other processors are overloaded.

It is possible to refer the possible competition of processes to shortcomings of this scheme at access to the specified turns. Besides, such scheme of planning doesn't consider the following circumstance. If a certain process long time was carried out on the same processor, the cache memory of this processor contains many data belonging to this process. After interruption of this process, it will logically start on the same processor, as earlier as its cache memory can contain all necessary information still. Some existing systems even carry out planning taking into account this circumstance, however it is negative influences uniform loading of all processors.

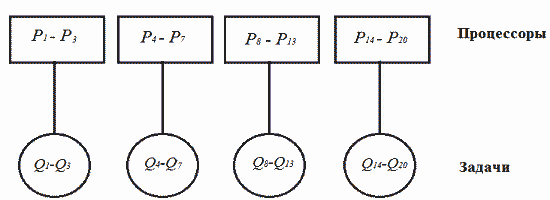

Very widespread algorithm for coherent processes consists in static splitting of all set of available processors into subsets and appointment to each of them separate group of coherent processes. For example, there are four groups of the connected processes of Q1-Q3, Q4-Q7, Q8-Q13 and Q14-Q20. Then, according to this algorithm, processes of the first group have to be appointed to the first subset of processors (P1-P3), processes of the second group - to the second subset of processors (P4-P7), etc. (see fig. 1).

Figure 4. Planning of related processes.

If in system there is a new group of the connected processes, is checked, whether are available number of processors necessary for it. If this is so, to each process is allocated the processor. In an opposite case any of processes of this group isn't started before emergence of enough of free processors. The processor is released and arrives in a pool of free processors only after completion of the process appointed to it.

The advantage of this algorithm of planning in decrease in overhead costs of switching of a context of processes. Shortcoming - in losses of time of processors when blocking. In this regard, the algorithm of joint planning of coherent processes where groups of the connected processes also are planned as a single whole became development of the described method, however are carried out in a mode of division of time of free processors. At the beginning of each quantum of time replanning of all processes is made.

4.3 Schedulers of multicomputer systems

In multicomputers each processor has own set of processes (it something reminds the algorithm described above). Therefore the planning stage here is reduced to two tasks:

- planning of processes on each of processors by means of local algorithm (similar to planning in single-processor system);

- distribution of new processes on processors.

The last task consists in search of such algorithm of distribution of processes on processors at which system effectiveness is maximum (most often as system effectiveness I understand "loading balancing" processors). After purpose of process to any central processing unit this process can't be carried out on other any more. For multicomputer systems it is possible to consider as the main algorithms the following:

The determined graph algorithm [3]. If data exchange schemes between processes are known, processes are appointed the central processing unit so that to minimize a network traffic. Algorithm: the bond graph between processes is under construction, then breaks into subgraphs so that between them there were as little as possible communications, therefore, as little as possible stages of data exchange.

The distributed heuristic algorithm initiated by the sender. If creating process of the central processing unit is overloaded, he tries to find in a random way such processor which is overloaded less it, and to transfer it process.

The distributed heuristic algorithm initiated by the recipient. At completion of process load of this processor is analyzed. If it is free, looks for in a random way loaded processor and requests process of its turn on performance [3].

Algorithm of "auction". The central processing unit forms "offer". To each processor certain "price" is appointed. At creation of affiliated process, parental analyzes the market and chooses the cheapest processor. Process sends the demand with the price to this central processing unit. The most expensive processor gets out of all demands, and performance of process is transferred to it. The price of processors is adjusted during time.

4.4 Schedulers of the distributed systems

The direction of the distributed systems is an implementation of communication in global networks and providing access to resources. Therefore for them processes, and granting necessary resources to them are planned not. Aspects of planning are:

- definition of executive resources for each task;

- definition of time during which resources are allocated for a task.

For the distributed systems often use graph algorithms that is more caused by the structure of such RVS. From them it is possible to allocate the following big groups of algorithms:

List planning. The method consists in sorting and ordering tops of the count of tasks in the list on any sign, and then consistently to form the plan for each separate task so that time of the beginning of its performance was minimum. The order of tasks in the list is defined by their priority. Instead of a priority it is possible to use a topological order of tasks in the column, the top or bottom level of top of the count or their sum (VU + WELL).

The bottom level of top in the column is the length of the greatest way in the column, beginning with this top. The top level of top is the length of the greatest way in the column, terminating in this top. Depending on priority type, the list of tops can be formed once in the beginning algorithm performance (a static priority) or the new task which is subject to addition in the plan, will be calculated anew on each iteration (a dynamic priority).

Algorithms of a clustering of tasks [7]. They are directed on minimization of interprocessor interaction. It is reached at the expense of association of processes strongly connected among themselves in separate clusters which then are displayed on processors of the set computing system. Here on each iteration tops, and edges of the count of tasks undertake a basis any more.

In algorithm of a linear clustering from the count of tasks at once allocate a critical way and already of it form a separate cluster. Then for all remained tops these iteration repeats. In a clustering on an edge on each iteration one edge is considered and is defined, whether will lead zeroing of weight of the given edge to reduction of total length of the plan. The result of performance of algorithm of a clustering generally still can't be considered as the required solution of a problem of planning - the turned-out clusters need to be displayed on processors of the target computing system.

5. Conclusion

Creation of qualitative and effective algorithm of planning of processes is pledge of successful functioning of any computing system. There where in a similar case it is impossible to do already available universal practices in this area, need of creation new or modifications of the existing scheduler, without doubts, will bring positive results.

The master's thesis is devoted to an actual scientific task: to studying of possibility of modification of any modern algorithm of the scheduler of tasks for the purpose of increase of its efficiency. Within the conducted researches acquaintance with a number of classical algorithms of planning is executed.

Further researches will be directed on:

- Carrying out analysis and comparative assessment of the most perspective algorithms.

- Search and detection of those characteristics of schedulers that can be improved for receiving more optimum results.

- Simulation of modifications, creation of new models. Carrying out repeated comparison. Identification positive (and negative) changes in results of planning.

- Development and improvement of most effective of the received methods of planning.

When writing this paper the master's thesis is yet complete. Final end: December, 2013. The full text of work and materials on a subject can be received at the author or his head after the specified date.

References

- Барский А.Б. Параллельные процессы в вычислительных системах. Планирование и организация / А.Б. Барский. — М.: Радио и связь,1990. 256 с.

- Таненбаум Э., ван Стеен М. Распределенные системы. Принципы и парадигмы. - СПб.: Питер, 2003. — 877 с.: ил. — (Серия «Классика Computer Science») — ISBN 5–272–00053–6.

- Таненбаум Э.: "Современные операционные системы", 3-е издание - СПб.: "Питер", 2011.- 1115 с.

- Морев Н. В. Сравнение алгоритмов планирования распределения задач для однородных распределенных вычислительных систем [Текст] / Н. В. Морев // Информационные технологии : Научно-технический и научно-производственный журнал. - 2010. - N 5. - С. 43-46

- Афраймович Л.Г. Модели и методы эффективного использования распределенных вычислительных систем [Учебно-методическое пособие] — Нижний Новгород, 2012 – 17 с.

- Принципы построения параллельных вычислительных систем. / Электронный ресурс. - Режим доступа: http://wiki.auditory.ru/Принципы_построения_параллельных_вычислительных_систем

- Воронцов К. В. Лекции по алгоритмам кластеризации и многомерного шкалирования. / Электронный ресурс. - Режим доступа: http://www.ccas.ru/voron/download/Clustering.pdf