Abstract

Content

- Introduction

- 1. Theme urgency

- 2. Goal and tasks of the research

- 3. Prospective scientific novelty

- 4. Review of the existing cryptographic algorithms

- 4.1 The random number generators

- 4.2 Symmetric encryption algorithms

- 4.3 Asymmetric encryption algorithms

- 4.3.1 Secret-key encryption

- 4.3.2 Public-key encryption

- Conclusion

- References

Introduction

Currently hashing as a way to ensure the security and encryption of electronic information, is found almost everywhere.

Brian Kernighan said it as one of the greatest inventions of science

.

Hashing — is an partition of any key sets which may typically be represented as strings or numbers into subsets having certain properties. These properties are described in the hash function are called hash address. In order to perform a reverse process there hash structure or hashtable. They provide fast access to an item on a hash table address. The ideal situation — is when you can access to the element in one call by specified key, but this hash address must be unique. In a real situation often turns out that according to the same hash-address may be more items, and have to be replaced because the ideal compromise.

Hashing mechanism has been known for a sufficient amount of time, however, the term hash appeared in print not so long ago, in 1967 [1]. According to Donald Knuth [2], the first time the idea and principles of hashing voiced G. Lan in 1953, an internal memo IBM. To resolve hash address conflicts, he proposed to use the method of chains. Zhini Amdahl — another member of IBM — proposed use of the open linear addressing. Arnold Dumi in 1956 first described the hash in the press. He proposed to use a hash address residue modulo a prime number. A much more thorough study of hash functions was published in 1963 by Werner Bukholts [3].

1. Theme urgency

Currently, data security is a critical problem in many industries, but because of the information society in the interest of a more resistant to cracking, as well as faster algorithms in their work can and will occur, both now and in the future.

Master's thesis is devoted to the research topic of the existing algorithms on their ability to optimize and improve resistance of algorithm to cracking.

2. Goal and tasks of the research

Goal of research: to analyze the existing data encryption algorithms and to develop an efficient algorithm for cryptanalysis.

The main tasks:

- Analysis of the existing cryptographic algorithms.

- Study methods SAT Solvers.

- Developing an algorithm of cryptanalysis.

The object of the research is data encryption algorithms.

The subject of the research is how to optimize data encryption algorithms.

3. Prospective scientific novelty

Scientific innovation in the Master's work will consist in the proposals for optimization of the existing cryptographic algorithms based on SAT methods Solvers.

4. Review of the existing cryptographic algorithms

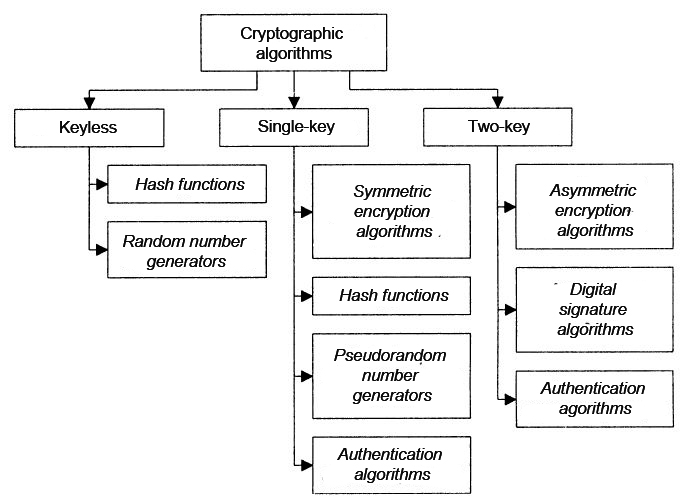

According to the classification provided by S. Panasenko [4], cryptographic algorithms are divided into keyless, single-key and two-key.

Keyless — do not use any keys during cryptographic transformations.

Single-key — used in his calculations a secret key.

Two-key — at various stages of the calculations are two kinds of keys: private and public.

A more detailed classification is shown in Picture 1.

Picture 1 — Classification of cryptographic algorithms

Some of the algorithms discussed below.

4.1 The random number generators

Cryptographic random number generators produce random numbers used in cryptographic applications, for example — for the generation of keys. The usual random number generators available in many programming languages and software environments are not suited to the needs of cryptography (they were created in order to get a statistically random distribution, cryptanalysts can predict the behavior of such random generator).

Ideally random numbers should be based on a real physical source of random information that can not be predicted. Examples of such sources

include noisy semiconductor devices, the least significant bits of digital audio, the intervals between interrupts devices or keystrokes.

Resulting from the physical noise source then distilled

cryptographic hash function so that each bit dependent on each bit. Often enough

to store random data is used quite a large pool (thousands of bits) and every bit of the pool is dependent on every bit of noise information

and every other bit of pool cryptographically secure (strong) method.

When there is no physical source of the noise, we have to use pseudo-random numbers. Such situation is undesirable, but often occurs in general purpose computers. It is always advisable to get a noise environment — say from the delays in the devices, the statistics of the use of resources, network statistics, keyboard interrupt, or something else. The aim is to get the data, unpredictable to the outside observer. To achieve this, a random pool must contain at least 128 bits of entropy present.

Cryptographic pseudorandom number generators typically use a large pool (seed-value) containing random information. Bits are generated by sampling from a pool with a possible run of a cryptographic hash function to hide the content of the pool from the outside observer. When you need a new piece bit agitated by the pool with a random encryption key (it can take as long as the unused portion of the pool), so that every bit of the pool depend on every other bit. New environmental noise should be added to the pool before mixing to make a new prediction pool value is even more complicated.

Despite the fact that by careful design of cryptographically strong random number generator to implement is not so difficult, this question is often overlooked. Thus, we should emphasize the importance of cryptographic random number generator — if it is done poorly, it can easily be the most vulnerable part of the system [5].

4.2 Symmetric encryption algorithms

Symmetric encryption algorithm requires one key to encrypt and decrypt messages. This is called a shared secret key, since all the users involved in the exchange of data have the same key.

Currently, there are a number of symmetric encryption algorithms. Among them we mention DES (Data Encryption Standard — Data Encryption Standard), IDEA (International Data Encryption Algorithm — International Data Encryption Algorithm) — a patented algorithm used in PGP, and the Blowfish — generic algorithm that is used in SSH.

With symmetric encryption algorithms is the concept of firmness of the code. Resistance — a measure of the resistance to cryptanalytic attacks. Persistence of the algorithm depends on the size of the key used. In the IDEA apply 128razryadnye keys. In the Blowfish algorithm, the key size is configurable from 32 to 448 bits. The longer the key, the more stable code. DES using 56 bit keys, so the algorithm is relatively weak.

To increase the firmness of the code can be used multiple keys or perform cryptographic algorithm repeatedly. An example of such an implementation is the algorithm TripleDES (integrated in some freely available utilities), where the data is first encrypted with one key is decrypted by another, and then, finally, the third re-encrypted.

Fundamental problem with symmetric encryption algorithms — the need to use a secret key. Before you start an encrypted dialogue, make sure that all the participants in the dialogue have the appropriate key. This can be achieved in many ways: send key by fax, by mail, use the services of courier services. But they are quite uncomfortable and have their weak points. A more reliable, although not devoid of drawbacks method — use asymmetric encryption to encode the secret key and send it by e-mail [6].

4.3 Asymmetric encryption algorithms

Asymmetric encryption algorithm requires the use of one key for encryption and a different but related it with a key — for decryption. One of the keys in this scheme is available to anyone who requests it. This is called the public key. Another key is known only to the owner and is called personal.

Asymmetric encryption algorithm requires the use of one key for encryption and a different but related it with a key — for decryption. One of the keys in this scheme is available to anyone who requests it. This is called the public key. Another key is known only to the owner and is called personal.

Asymmetric encryption algorithms can be used for its intended purpose (providing privacy), and to create digital signatures (authentication). But in its reliability they are not rivals symmetric encryption algorithms. In this regard, most frequently asymmetric algorithms are used to encrypt the secret key, transmitted over insecure channels, and to create digital signatures [6].

4.3.1 Secret-key encryption

Encryption based on the secret key that the access key has only authorized personnel. This key must be kept secret. If the key will fall into bad hands, then someone will be able to gain unauthorized access to encrypted information.

The most commonly used algorithm of the private key is the standard Data Encryption Standard (DES). This algorithm, developed by IBM in the seventies of the last century, adopted as the American standard for commercial and unclassified government communications. Modern computing speed by an order greater than the speed of computations in the seventies, so the DES algorithm is deprecated since at least 1998.

Other well-known system of secret-key encryption — it's RC2, RC4, RC5, triple DES (triple DES) and IDEA. Triple DES-algorithm provides sufficient protection. This algorithm uses the same encryption method as DES, but it uses three, using up to three different keys. The plaintext is encrypted using a first key is decrypted using the second key and then encrypted using a third key.

Picture 2 — The algorithm of secret-key encryption (animation, 16 frames, 5 reps, 53.2 KB)

Legend:

M — the message;

H(M) — hash message;

E — the digital signature;

K — the secret key;

ME — not encrypted message with a digital signature;

MEK — an encrypted message with a digital signature;

The obvious drawback of the algorithms with the private key is that to send secure messages to someone it is need to have safe way to transfer that person's private key [7].

4.3.2 Public-key encryption

The essence of the encryption public key is that used to encrypt a key for decryption and the other (however, such systems are often referred to as asymmetrical). Public and private keys are mathematically linked, data encrypted with the public key can be decrypted only with the corresponding private key, and the digital signature of the data signed using the private key can only be verified with the corresponding public key.

The basic premise that has led to the emergence of public-key encryption, was the fact that the sender of the message (the one who encrypts the message) is not necessarily to be able to decrypt it. That is, Even with the original message, the key with which it is encrypted, and knowing the encryption algorithm, it can not decrypt the message without the knowledge of the closed key decryption.

The first key, which is encrypted with the original message, called open and can be published for use by all users of the system. Decryption with the key can not. The second key is decrypted using a message called secret (closed) and must be known only to the rightful recipient of the message.

Algorithms for public-key encryption use the so-called permanent or one-way functions. These functions have the property that for a given value of the argument x relatively simple to calculate the value of the function (X), however, if the known value of the function y = f (x), there is no simple way to calculate the argument x. For example, the SIN. Knowing x, is easy to find the value of SIN (x) (E.g., x = π, then SIN (π) = 0). However, if the SIN (x) = 0 is uniquely identify x impossible, since in this instance x can be any number, determined by the formula i * π, where i — Integer [8].

Conclusion

Hashing, the occurrence of which were in the middle of the twentieth century, actively used and developed today. A large set of algorithms developed due to the wide range of their applications and the need to protect a huge number and variety of data. According to some views, the discovery of a linear hashing Witold Lytvyn [9] is the most important discovery with 197 0x's. Linear Hashing has nothing to do with the classical linear addressing technology that allows multiple hash locations to grow and serve in the inserted and deleted items. Linear Hashing may also be used for large databases, distributed between different nodes in the network.

In this abstract studied only some data encryption algorithms. Were considered only the basic techniques, without details of their implementation.

Important note

This master's work is not completed yet. Final completion: December 2013. The full text of the work and materials on the topic can be obtained from the author or his head after this date.

References

- Hellerman H., Digital Computer System Principles. McGraw-Hill, 1967.

- Кнут Д., Искусство программирования, т.3. М.: Вильямс, 2000.

- Buchholz W., IBM Systems J., 2 (1963), 86–111

- Панасенко С.П., Алгоритмы шифрования. Специальный справочник. – СПб.: БХВ-Петербург, 2009, - 576 с.: ил.

- Введение в криптографию [Электронный ресурс]. – Режим доступа: http://algolist.manual.ru/defence/...

- Симметричное шифрование [Электронный ресурс]. – Режим доступа: http://www.open-security.org/linux/...

- Шифрование с закрытым ключом [Электронный ресурс]. – Режим доступа: http://sevidi.ru/phpstroy/...

- Шифрование с открытым ключом [Электронный ресурс]. – Режим доступа: https://sites.google.com/site/...

- Litwin W., Proc. 6th International Conf. on Very Large Databases (1980), 212-223