Abstract on the theme of master's work

Content

- Introduction

- 1. Theme urgency

- 2. Goal and tasks of the research

- 3. Overview of researches and developments

- 3.1 Overview of international sources

- 3.2 Overview of national sources

- 3.3 Overview of local sources

- 4. Dynamic control of vergence system

- Conclusion

- References

Introduction

Vision is the most important source of information for both human and many animals. Visual analyzer provides several times more useful information about the outside world than all the other senses together. For any artificial agent is a critical advantage to have the ability of visual perception. Computer vision is an area of artificial intelligence, which includes a set of methods and techniques that enable machines to receive, process, analyze, recognize visual information coming from one or more cameras. This area can be described as a young, diverse and dynamic. Research in the field of computer vision strive to provide perceptive capabilities for robots comparable to those of human being.

The term "active vision" is a paradigm based on understanding the visual system of the robot in the context of the behavior of robot interacting with the changing world around it. In the statement of Ballard [1] active (animate) vision is a system that is able to change actively the point of view in response to physical stimuli.

1. Theme urgency

In the last few decades, there is a significant increase in interest in active control when receiving images to simplify and speed up the perceptive tasks. The fundamental idea of a proactive approach proposed by Aloimonos [2] and Bajcsy [3], have been tested and are greatly enhanced by many researchers.

Processing of visual information (images) and artificial vision for robots is one of the most the promising and rapidly developing application of artificial neural networks. The most interesting results were obtained mainly by Western researchers in an effort to create the most biologically plausible neurostructures and neuromethods of image processing, pattern recognition and memoizing objects.

My research in the field of computer vision devoted to the study and development of new methods and techniques of computer vision that are active, binocular and applicable for implementation in humanoid robotics.

2. Goal and tasks of the research

A goal of this study is to develop a new biologically plausible neural visual processing solutions for humanoid robots.

Main objectives of the study:

- Analysis of neuroarchitectures and neuroalgorithms of commuter view and analysis of researches in the field of active stereo vision for robotics.

- Development of visual control system of binocular robot's behavior.

Object of study : Processing of visual information by neural networks, neural network control.

Subject of study : Biologically plausible neural network structures of the active vision for robots.

Within the framework of the master's research the next scientific results are planned:

- Development of new and modification of existing models of neural networks for the processing and interpretation of input stereo images.

- Creation architecture of neural networks for controlling eye movements.

- Justification biological plausibility developed neurosystem.

3.1 Overview of international sources

3.1.1 Active vision

A paradigm of active vision for robots originates from the work [2], in which the authors investigated the typical tasks of computer vision such as: shape for shading, shape from contour, shapes from texture, structure from motion. Aloimonos proves that an active observer can solve these basic problems much more effectively than passive. The tasks that are non-linear and incorrect to passive observer become lenear and correct an active one. The basic assumption made by ??Aloimonos is that the observer is moved in known manner, has several points of view, makes a lot of controlled scene evaluations, i.e. receives more valid information about environment.

Bajcsy [3] introduces the concept of an active sensor - the camera, which in the work process can change its internal parameters and position in space according to some perceptive strategies. On this basis, the task of the active view can be set as the development of management strategies, combined with the process of obtaining visual information, which depends on the state of the system and its objectives.

Development of the humanoid robotic heads with a binocular visual system has led to the possibility of using controlled movements of the cameras to create systems which operate continuously in real time. One of the first such systems were described in the papers [1], [4] and [5]. The authors showed how the combination of a few simple visual behaviors can be used to implement saccades, vergence, movement of the neck and modeling of vestibulo-ocular reflex.

Paradigm of active vision proposed in [1], has had a huge impact on the development of computer vision for robotics. In this article, Ballard formulated the tasks of visual behavior of a robot, and showed differences between active and passive approaches in these tasks and benefits of active one. Many of the works, including those that will be covered in my bibliographical research, seek to take an advantage one way or another of the active views expressed in the article «Animate Vision» [1].

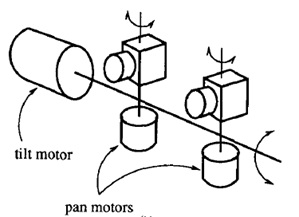

Advantages of «Animate Vision» paradigm have been confirmed for the first time by the establishment of Rochester robot with three degrees of freedom cameras [6], [7] and [14]. Experiments have demonstrated that, despite that control system complicates the visual system, active vision greatly simplifies the calculation of visual tasks. Although Rochester bobot is far from the actual architecture of the human eye, it solves the low-level vision problems in real-time.

Figure 1 – The scheme Rochester robot [6]

Article [5] describes a control system based on the paradigm of «active intelligence». The paradigm of active intelligence includes the idea of hierarchical control:

- At the top level a certain cognitive process is performed that achieves certain goals.

- At the bottom level of the hierarchy simple behaviors (analogs of reflexes) are implemented. They function independently, but they can influence each other. For example these are: object tracking by minimizing the blur or change the direction of gaze as a result of an attractors appearance.

In its next article [8] Brown described a system that combines visual behaviors such as saccades and smooth tracking of a moving target, vergence, vestibulo-ocular reflex - stabilization of the eyes relative to the movement of a head. The authors describe two versions of the system:

- Without delay. In this case, the individual behavior controllers are quite independent from each other. The system in this case is unstable because of the delays and spontaneous interactions.

- «Non-zero delay control». It is built on the basis of prediction methods, namely Smith predictor [9].

In article [15], the authors described a system they created that implements a set of algorithms for the simulation of the eyes movements: focus, vergence, saccades and smooth tracking. The advantages of their work are the reliability and performance in real time of each individual algorithm. For this purpose they used a simple algorithms to fast calculations.

Vergence - is a movement of one eye or both eyes which visual axes diverge or converge. In other words, it is the simultaneous movement of both eyes in the opposite direction to obtain or maintain binocular vision. The purpose of vergence control is to keep the eyes or the cameras fixed at some point in space, regardless of changes in the angle of view or distance to the target. The determining factor in the possibility of using a vergence control system in real time is a quick calculation of disparity. In article [15] the authors described and realized an algorithm for disparity calculation based on the idea of ??a normalized "cross-correlations» (NCC) [20], [21].

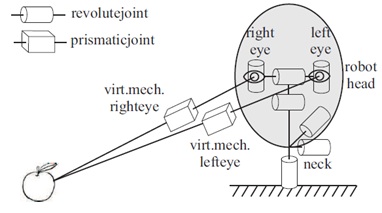

In article [22] an approach to control the direction of gaze robot head is proposed. It based on the concept of a virtual link. This virtual link connects the eye and a point in 3-D space. Using this mechanism, the task of tracking can be described in the most general form. This allows to use different control methods, approaches and strategies described in the literature, and implement them in different robots. Virtual connection can be considered as an additional joint attached to the eye, i.e. it adds an additional degree of freedom. When the eye moves, the virtual link also moves.

Рисунок 2 – Концепция виртуальной связи [22]

Remarkable feature of this work is that due to the virtual link concept it is easy to use redundant degrees of freedom of robot head to achieve a variety of head movement, better tracking, avoiding boundary positions of joints.

In article [23], [24] the authors use log-polar images for recognition and tracking objects. To control the direction of gaze they use learning methods that determine the movement of the eyes.

A kinematic and dynamic visual controller is proposed [25]. It is quite easy since it shares the kinematic links of a robot head. By splitting into separate movements the authors reached the simplicity of sensory-motor control.

In [26] there is proposed and implemented a simple split controller as a network of PD-controllers (proportional-differential controllers). The system uses two cameras for each eye: one has a wide field of view, other one has a narrow field of view. Therefore, the authors had to implement the transformation which guarantees that the point of interest will be in the center of the field of view of the narrow field camera, even when tracked by a large field one.

In [27] the authors proposed a hard parameter motion controller. The authors developed a mapping of two-dimensional space of points in a five-dimensional space of degrees of freedom. It is realized for only one eye, while a another eye copies the movements of a first one.

3.1.2 stereo vision

In geometry of stereo vision next things play a significant role:

- Projective geometry that describes the transformation of the point space coordinates to the projective coordinates of the plane of a camera.

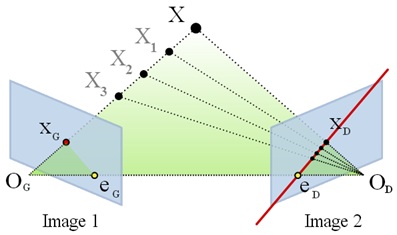

- Epipolar geometry is a geometry of two cameras, which describes the geometric relationship of the two images of the same object. A fundamental element of this geometry is an epipolar constraint: for some point projected on the first projective plane its projection on the other projective plane will be on the epipolar line.

Figure 3 – Epipolar constraint [10]

There are two basic ways to address the problem of finding a pair of corresponding pixels: based on the windows (regions) [16], [17] and based on the image features [18?], [19].

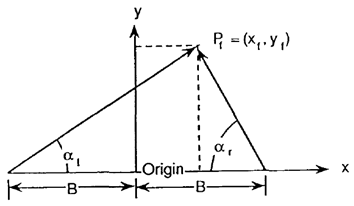

In the articles [11], [12] J. Crowley described a method of using epipolar constraints to find the space coordinates of the point of interest. The position of this point in space defined relative to the center point of the head (which lay on the base line connecting the center points of two cameras). This point naturally lies in the plane defined by the optical axes of two cameras and lay on their intersection.

Figure 4 – Finding the space position of the point of interest [11]

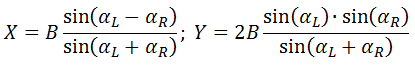

Defining cartesian coordinate system as shown at the figure 4, the position of the fixation point can be calculated using the information about the length of the baseline and angles aL and aR [11], [12]:

It is important for functioning of active stereo vision systems to maintain a calibration, i.e. constant maintenance of corresponding point projections on the left and right cameras. In the paper [13] the authors described and compared two methods for dynamic calibration of cameras. The first one is based on the fundamental matrix is use epipolar constraint and linear optimization. The second one is iterative and based on the differential model of optical flow.

3.1.3 Neural network approach in commuter vision

Today the term neural network combined a large number of models that try to simulate the functionality of the brain, reproducing some of its basic structures. The first model was proposed by McCulloch and Pitts in 1943, in which they studied the logical operations performed by the neurons. There are a large number of summarizing literature on neural networks [28-31]. Existing neural networks of image interpretation and object recognition are based on an analysis of the parts of an image and extract local features, which are then integrated into more general classes.

Character recognition is undoubtedly the most advanced application of neural networks. Various methods have been tested to solve this problem with relative success, such as those using the back propagation (RPG) [32], [33].

Another well-studied problem is a recognition of a particular object in a given scene [34-38]. In all cases since the object has been selected it is normalized so it fills the window of recognition. Special procedures implement scaling and rotation of the object to facilitate recognition. Education uses RPG. The studied examples are the various possible targets with their identities. To make the training resistant to noise, which may be important, the authors teach their networks noisy forms. The main limitation of this method is that it requires the objects to be recognized to be completely separated from the background, which is hardly achieved in the case of complex images such as natural scenes, and aerial photographs.

3.2 Overview of national sources

In Ukraine a sufficient number of departments and universities are exploring an images numerical treating, including those which use neural networks.

- In pattern recognition department of international research and training center of information technologies and systems of National Academy of Sciences of Ukraine and Ministry of Education of Ukraine headed by Dr. Viacheslav Matsello there are conducted researches in the following fields:

- Theoretical studies on pattern recognition, development and creation of intelligent information technologies of recognition and image processing.

- Creating models and devices that improve the quality of human-machine and robotic systems.

- Development of information technologies of intelligent control of moving objects. Mobile robotic systems.

- One of the research areas in the National Aerospace University of Zhukovsky at the department of signals reception, transmission and processing is the use of neural networks for pattern recognition and signal and image processing (performed by Yeremeev O.I.).

- The department of computer technology and programming of Kharkov Polytechnic Institute is working on the development of devices and design of image processing systems.

- The department of electronic systems of Donbass State Technical University carries out scientific research in areas of computer vision and image analysis algorithms.

- The Department of computers of Dnepropetrovsk National University of Oles Gonchar is conducting researches in the field of digital signal and image processing using neural networks and fuzzy logic algorithms.

- In the Zakarpatskiy State University there are conducted researches on the "Development of the theoretical foundations of information and computer vision and perception, processing and transmission of data for different domains of information infrastructure" (the project "Computer vision") (supervisor is prof. Gritsik V.V.).

- The department of geographic information systems of National Mining University researches image processing and pattern recognition, including data obtained from space.

3.3 Overview of local sources

For DNTU the scientific problem of commuter stereo vision for robotics is new. However, the following authors have been studying the neural systems that somehow intersect with my studies. These are basically various techniques of image interpretation using neural networks:

- Master's thesis of A. Fedorov, "Study of the contour segmentation methods for building OCR systems» [39].

- Article of O. Shparbera "Pattern recognition on the basis of infrared thermography» [40].

- Article of G. Kostecki, O. Fedyaeva "Image recognition of human faces using convolution neural network» [41].

- Article of I. Kolomoitseva "solution of the problem of pattern recognition on the example of the information system of screening adolescent girls» [42].

- Article of O. Blizkaya, Y. Skobtsov "The development of the method and pattern recognition algorithm of two-dimensional images of objects by contrast invariant features informative» [43].

- Master's thesis of S. Poltava "Study of the effectiveness of pattern recognition algorithms colored marking objects for vision systems» [44]. Work is concerned the basic procedures and methods of pattern recognition, pattern recognition methods especially in the design of robotic systems. Unlike my work, the system does not use neural networks, which increase the rate of recognition.

- Master's thesis A. Aphanasenko "Development of specialized hybrid pattern recognition system based on fuzzy neural networks» [45]. They use neural network to the problem of pattern recognition.

4. Dynamic control of vergence system

4.1 Statement of the Problem

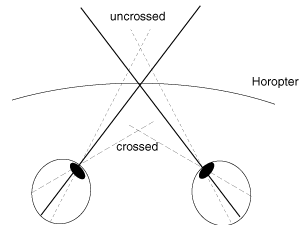

In binocular systems vergence is a movement of one eye or both eyes which visual axes diverge or converge [46]. This movement is necessary when both eyes were look to the same point of space. For biological visual systems such as human such a condition is obvious to the normal functioning because visual acuity is uneven: in the fovea the precision is higher while in the periphery of the visual field it is lower. Controlled vergence gives to artificial stereo vision a lot of advantages: from simplification of formulating and solving problems of computer vision to the aesthetics and ergonomics of the human-robot interaction.

My research is devoted to the methods of vergence control in stereo vision based on the calculation of disparity. Disparity is a difference of the relative positions of pixels that appear on the retinas of the left and right eyes. Figure 5 shows the cases of disparate and corresponding points [47]. In the case of artificial vision if both eyes are directed to the same object, then it is displayed in the center of projective planes (similar to retinas) and the disparity is close to zero. If the object is projected in the center of one camera and on periphery of other one, the disparity is high and the solving of stereo vision tasks is difficult.

Figure 5 – The projections of the disparity and corresponding points on the retinas or the projective plane of cameras

4.2 Literature overview

We analyzed various methods of vergence control which are usually part of an whole active vision system. Since the first publications on the active (animate) computer vision different authors have stressed the importance of vergence control, [1], [2]. This control similar to in biological visual systems are often based on disparity estimation. At the current stage, there are two main ways for disparity calculation and/or finding a pair of corresponding pixels in stereo images [48]:

Binocular system of my research is based on the algorithms and the structure of the ANN proposed in [51].

4.3 Objectives and statement of a research problem

Purpose of the study is to implement a system based on artificial neural network aimed to solve the vergence task. On basis of calculated disparity the ANN outputs a control signal to servo motors of cameras to direct two cameras to the same point in space. For its functioning the ANN should not need conventional computing facilities, such as a computer with the von Neumann architecture. That is, it can fully exploit the potential of massive parallelism to solve a problem that can be implemented in a cluster or a multi-core processor.

The main objective of this study is to identify the advantages and disadvantages of the method of vergence control in active stereo visio, described in [51]. And also propose and implement improvements in the structure of ANN and algorithms of its parallel implementation. Research is performed in the framework of the French-Ukrainian program MASTER (Donetsk National Technical Cooperation and the University of Cergy-Pontoise (France) [52]) as part of the Master's study.

4.4 Problem solution and research results

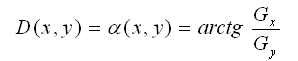

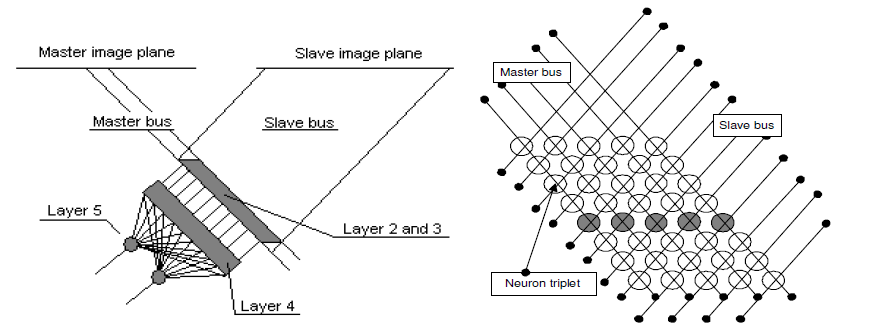

One of the cameras is a master and fixed relative to the vergence task and the other is slave, the position of which is regulated by neural network.

Figure 6 – Cameras Fire-i used in the experiment

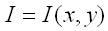

In order to solve this problem we propose to use the ANN of type multi-layer perceptron without feedback. A neural network is set up so it turns the slave camera and minimizes disparity. It is a biologically inspired five-layer ANN which compares the symbolic features of the image in order to determine the direction of the slave camera rotation. For the pixel with coordinates (x, y) the symbolic features are:

- Grayscale intensity:

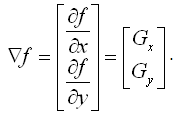

- Gradient magnitude. The gradient is calculated as:

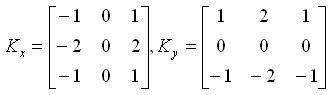

The gradient can be calculated by convolving the image with the following nuclei (convolution matrices): Kx, Ky, also known as the Sobel operator [53]:

Gradient magnitude at point (x, y) can be calculated as:

Gradient magnitude at point (x, y) can be calculated as:

- Orientation of the gradient at the point (x, y) can be calculated as:

Figure 7 shows the three features of pixels already mentioned in visual form.

Figure 7 – Grayscale intensity (a), gradient magnitude (b) and the gradient orientation (c)

ANN shown in Figure 8a has 5 layers doesn't contain feedback and doesn't require any training. The first layer is used to input of data from the regions of interest (ROI) of the square, for example, 15*15 in the center of the image obtained from master camera, and a stripe of size 640*15 in the image obtained from slave camera. Since each pixel has three characteristics (I, G, D) the first layer has 3*15*15+3*width*15 neurons. Neurons outputs of the first layer then intersect at the second layer in manner shown at Figure 8b.

Second layer comprises 15*15 *(640-14) neuron triplets which calculate the differences between the characteristics (I, G, D) of pixel pairs.

Figure 8 – General view of ANN (a) and the slice of a second layer (b) [51]

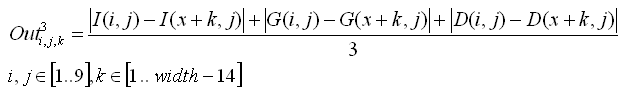

Neurons at third layer have three inputs each (I, G, D) and realize the function of the arithmetic mean value of characteristics. Because of its topology ANN shows the correlation Out(i, j, k) between the neuron of main image M(i, j) and the slave one S(k + i, j):

Together the second and third layers implement pairwise comparison of pixels features. The set of neurons with some kn will produce a pixel comparison of the two windows: master window n*n and kn-th window obtained from slave camera.

At the fourth layer k-th neuron calculates a correlation between the window obtained from the center of the image obtained from master camera and k-th window from the stripe obtained from the slave camera. Each neuron has 3*n*n inputs the values ??of which he adds after subtracting each of the 255.

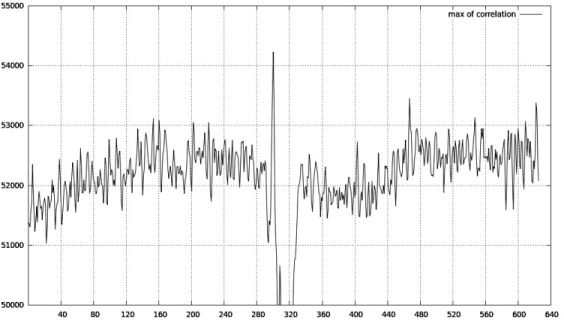

The cameras have resolution 640x480 pixels. That means that the central window has a number nc = 640-14/2 = 313 . In the experiment, when the master camera (right) was looking at the left side of the thick vertical line and the slave camera (left) was looking a little more to the right, then the neuron with the number n = 300 had a stable maximum output value.

Figure 9 – The output values ??of the fourth layer neurons

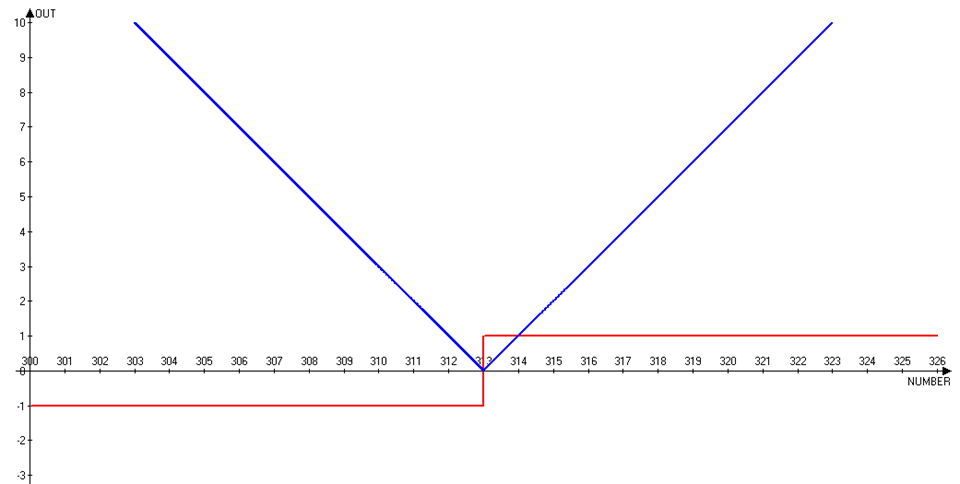

In order that two neurons of the fifth layer could be used for to control slave camera, the fourth layer should work according the principle "winner take all» (WTA) - only the winner neuron is activated and only the value of its transfer function is different from zero.

The fifth layer contains two neurons: one indicates which way the slave camera should be rotated, and the other indicates the magnitude of that rotation. Both neurons receive inputs from all the neurons of the fourth level (where only one is active). Figure 10 shows input weights of the fifth layer neurons according to the position of the fourth neuron.

Figure 10 – Input weights of the fifth layer neurons: red graph is for the neuron indicating the direction, blue graph is for the neuron indicating the magnitude of rotation.

Process information propagation in ANN is shown at Figure 11.

Figure 11 – The functioning of the ANN

(animation: 5 frames, 20 cycles of repeating, 270 kilobytes)

Conclusion

Master's thesis is devoted to the actual scientific task of creating an biosimilar vision system for robots. As part of effected research there are carried out:

- Analyzed the methods of vergence control in robotics, in particular that are based on neural networks.

- There was implemented a neural network which controls the cameras.

- A number of experiments on the use of neural networks in the applications of computer vision were done, the results were analyzed. The system operates stably, if the optical axes of the two chambers lie in one plane.

Further research is focused on the following aspects:

- Implementation of other visual movements.

- Union all the movements implemented in a holistic visual behavior.

- Parallel implementation of the algorithms, the simulation of neural networks on multiprocessor systems.

- Evaluation of biological plausibility of the resulting architecture of neural network.

During writing this abstract the master's work is not yet complete. Final completion: December 2013. Full text of the work and materials on the topic can be obtained from the author or his scientific adviser after that date.

References

- Ballard, D.H. and Ozcandarli, A., ТEye Fixation and Early Vision: Kinematic DepthУ, IEEE 2nd Intl. Conf. on Comp. Vision, Tarpon Springs, Fla., pp. 524-531, Dec. 1988.

- J.Y. Aloimonos, I. Weiss and A. Bandopadhay, "Active Vision", International Journal on Computer Vision, pp. 333-356, 1987.

- R. Bajcsy, "Active Perception", IEEE Proceedings, Vol 76, No 8, pp. 996-1006, August 1988.

- J.O. Eklundh and K.Pahlavan, Head, "Eye and Head-Eye System", SPIE Applications of AI X: Machine Vision and Robotics, Orlando, Fla. April 1992.

- C.M. Brown: Prediction and Cooperation in Gaze Control. Biological Cybernetics 63, 1990.

- Olson, T.J. Potter R.D: Real-time vergence control. Computer Vision and Pattern Recognition. Proceedings CVPR '89: 404-409, 1989.

- Thomas J. Olson, David J. Coombs: Real-time vergence control for binocular robots. International Journal of Computer Vision 7(1): 67-89, 1991.

- C.M. Brown, Gaze controls with interactions and delays. IEEE Trans Syst Man Cybern IEEE-TSMC20(2), March 1990

- O. J. M. Smith: Closer control of loops with dead time. Chemical Engg. Prog. TnJns~, 53(5):217219, 1957.

- Geometrie epipolaire – Wikipedia [Электронный ресурс]. – Режим доступа: http://fr.wikipedia.org/wiki/...

- James L. Crowley, Philippe Bobet, Mouafak Mesrabi: Gaze Control for a Binocular Camera Head. ECCV 1992: 588-596

- James L. Crowley, Philippe Bobet, Mouafak Mesrabi: Layered Control of a Binocular Camera Head. IJPRAI 7(1): 109-122, 1993.

- M. Bjorkman and J-O. Eklundh: Real-Time Epipolar Geometry Estimation of Binocular Stereo Heads. IEEE Trans. Pattern Analysis and Machine Intelligence 24(3), pp. 425-432, Mar 2002.

- Ballard, D.H. and Ozcandarli, A., ТEye Fixation and Early Vision: Kinematic DepthУ, IEEE 2nd Intl. Conf. on Comp. Vision, Tarpon Springs, Fla., pp. 524-531, Dec. 1988.

- X. Roca, J. Vitrih, M. Vanrell, J.J. Villanueva: Gaze control in a binocular robot systems. Emerging Technologies and Factory Automation. Proceedings of ETFA '99, 1999.

- Marapane, S. B. and M. M. Trivedi (1989) Region-based stereo analysis for robitic applications. IEEE Trans. Syst., Man, Cybern., 19, 1447-1464.

- Kanade, T. and M. Okutomi (1994) A stereo matching algorithm with an adaptive window: theory and experiment. IEEE Trans. Pattern Anal. Machine Intell., 16, 920-932.

- Nasrabadi, N. M., W. Li, B. G. Epranian, and C. A. Butkus (1989) Use of Hopfield network for stereo vision correspondence. IEEE ICSMC, 2, 429-432.

- Nasrabadi, N. M. and C. Y. Choo (1992) Hopfield network for stereo vision correspondence. IEEE Trans. Neural Networks, 3, 5-13.

- K. Pahlavan, Active Robot Vision and Primary Ocular Processes, Ph.D. thesis, Royal Institute of Technology. Computational Vision and Active Perception Laboratory, 1993.

- A. Bernardino. "Seguimento binocular de alvos mbveis baseado em imagens log-polar" M.S. thesis, Instituto Superior Tbcnico, Lisbon, Portugal, January 1997.

- Damir Omrcen, Ales Ude, Redundant control of a humanoid robot head with foveated vision for object tracking / Conference on Robotics and Automation (ICRA), 2010 IEEE International 3-7 May 2010, 4151 - 4156.

- R. Manzotti, A. Gasteratos, G. Metta, G. Sandini. Disparity estimation on log-polar images and vergence control / Journal Computer Vision and Image Understanding, Volume 83 Issue 2, August 2001, Pages 97-117.

- G. Metta, A. Gasteratos, and G. Sandini. Learning to track colored objects with log-polar vision. Mechatronics, 14:9891006, 2004.

- A. Bernardino and J. Santos-Victor. Binocular visual tracking: Integration of perception and control. IEEE Transactions on Robotics and Automation, 15(6):1080–1094, 1999.

- A. Ude, C. Gaskett, and G. Cheng. Foveated vision systems with two cameras per eye. In Proc. IEEE Int. Conf. Robotics and Automation, Orlando, USA, 2006.

- S. Vijayakumar, J. Conradt, T. Shibata, and S. Schaal. Overt visual attention for a humanoid robot. In Int. Conf. on Intelligent Robots and Systems (IROS), Hawaii, USA, 2001.

- J. L. MCCLLELAND, D. E. RUMELHART, G. E. HINTON, Parallel distributed processing, Exploration in microstructure of cognition», vol. 1, vol. 2, Cambridge, MIT press.

- T. Kohonen, Self-Organization and Associative Memory. New York: Springer-Verlag, 1989.

- T. KHANNA, «Foundations of Neural Networks», Addison-Wesley Publishing Compagny, 1989.

- R. LIPPMANN, «An Introduction to Computing with Neural Nets», IEEE ASSP, Magazine, April 1987, p. 4-22.

- Y. LECUN, B. BOSER, J. S. DENKER, D. HENDERSON, R. E. HOWARD, «Backpropagation applied to handwritten zip code recognition», Neural Computation, vol. 1, ri 4, 1989, p. 541-551.

- T. DE SAINT PIERRE, «Codification et apprentissage connexionniste de caracteres multipolices», Cognitiva 87, Paris, mai 87, p. 284-289.

- E. ALLEN, M. MENON, P. DICAPRIO, «A Modular Architecture for Object Recognition Using Neural Networks», INNC 90, Paris, July 90, p. 35-37.

- G. W. COTTREL, M. FLEMING, «Face Recognition using Unsupervised feature Extraction», INNC 90 Paris, July 90, p. 322-325.

- I. GUPTA, M. SAYEH, R. TAMMARA, «A Neural Network Approch te, Robust Shape Classification», Pattern Recognition, vol. 23, n' 9, p. 563-568, 1990.

- E. L. HINES, R. A. HUTCHINSON, «Application of Multi-Layer Perceptrons to Facial Feature Location», IEE image processing, 1989, p. 39-43.

- D. J. HEROLD, W. T. MILLER, L. G. KRAFT, F. H. GLANZ, «Pattern Recognition using a CMAC Based Leaming System», SPIE, vol. 1004, 1988, p. 84-90.

- А.В. Федоров. Исследование методов контурной сегментации для построения системы оптического распознавания символов. Руководитель: к.т.н., доцент кафедры ПМиИ Федяев О.И.

- О.В. Шпарбер. Распознавание образов на основе инфракрасной термографии. / ДонНТУ: Информатика и компьютерные технологии V, 2009.

- Г.Ю. Костецкая, О.И. Федяев. Распознавание изображений человеческих лиц с помощью свёрточной нейронной сети. / ДонНТУ: Штучний інтелект, нейромережеві та еволюційні методи та алгоритми, Том Первый, 2010.

- И.А. Коломойцева.Решение задачи распознавания образов на примере информационной системы скрининга девочек-подростков. / Наукові праці Донецького національного технічного університету, серія «Інформатика, кібернетика та обчислювальна техніка»,випуск 6, Донецк, ДонНТУ, 1999.

- О.В. Близкая, Ю.А. Скобцов. Разработка метода и алгоритма распознавания двухмерных контрастных изображений объектов по инвариантным информативным признакам. / Збірка студентських наукових праць факультету “Комп’ютерні інформаційні технології і автоматика” Донецького національного технічного університету. Випуск 3. –Донецьк: ДонНТУ, 2005. –366 с.

- С.А. Полтава. Исследование эффективности алгоритмов распознавания цветного маркирования объектов для систем технического зрения. Руководитель: к.т.н., доцент кафедры ПМИ Зори Сергей Анатолиевич.

- А.В. Афанасенко. Исследование эффективности алгоритмов распознавания цветного маркирования объектов для систем технического зрения. Руководитель: к.т.н., доцент кафедры ПМИ Зори Сергей Анатолиевич.

- Robert M. Youngson. Collins Dictionary of Medicine // Collins. –2005. –704 p. http://www.goodreads.com/book/show/12239549-collins-dictionary-of-medicine

- Вудвортс Р. С. Зрительное восприятие глубины / Психология ощущений и восприятия. –М.: ЧеРо, 1999. –с.343-382.

- J.-H. Wang. On Disparity Matching in Stereo Vision via a Neural Network Framework // J.-H. Wang, C.-P. Hsiao. –Proceedings of ROC(A). Vol. 23 #5. –1999. –665-678p.

- S. B. Marapane. Region-based stereo analysis for robotic applications // Marapane, S. B. and M. M. Trivedi. –IEEE Trans. Syst., Man, Cybern., 19. –1989. –1447-1464p.

- N. M. Nasrabadi. Use of Hopfield network for stereo vision correspondence // Nasrabadi, N. M., W. Li, B. G. Epranian, and C. A. Butkus. –IEEE ICSMC #2. –1989. –429-432p.

- Barna Resko. Camera Control with Disparity Matching in Stereo Vision by Artificial Neural Networks // Barna Resko, Peter Baranyi, Hideki Hashimoto. –Proceedings of WISES'03. -2003. –139-150с.

- Universite de Cergy Pontoise [Электронный ресурс]. Режим доступа: http://www.u–cergy.fr/

- I. Sobel. A 3x3 Isotropic Gradient Operator for Image Processing // I. Sobel, G. Feldman. –Stanford project. –1968.