Abstract

The Face Recognition Scheme Based on Neural Network and PCA technique for the detection of the persons. I have used the PCA technique which involves a mathematical method that transforms a number of possibly correlated variables into a smaller number of uncorrelated variables called principal components. Pre-processing stage – In this stage the images are made zero-mean and unit-variance. Dimensionality Reduction stage: (PCA) – Input data is reduced to a lower dimension to facilitate classification. In this stage dimension are reduced. Classification stage - The reduced vectors output from PCA are applied to the BPNN classifier for the training of the data and used to obtain the recognized image. I got good results from this proposed algorithm, MATLAB platform is used on various images to detect.

1. INTRODUCTION TO FACE RECOGNITION

The recognition of a person by their facial image can be done in a many different ways such as by taking an image of the face in the visible region using an inexpensive camera or by using the infrared patterns of facial heat emission. Facial recognition in visible light is the model key such as features from the central portion of a facial image. Using a wide assortment of cameras, the visible light system is used to extract the features from the images that do not change over time while avoiding superficial features such as expressions of face as mouth, nose eyes, lips, hair. Many approaches for the modeling of facial images in the visible spectrum ar e Principal Component Analysis, Local Feature Extraction, Neural Networks, Comparative Analysis, Automatic Gabor wavelet feature extraction method, and Radial basis function. Some difficulties in face recognition in the visible spectrum include reducing the impact of variable values and detects the mask or photograph. Some facial recognition systems may require a stationary or posed manner in order to take the image, though many systems use a real time process to detect a person's head and locate the face automatically. Main advantages of face recognition are that it is non-intrusive, continuous and accepted by approximate all the users. Face detection is essential front end for a face recognition system. Face detection locates and segments face regions from cluttered images, either obtained from video or still image.

It has numerous applications in areas like surveillance and security control systems, content based image retrieval, video conferencing and intelligent human computer interfaces. Most of the current face recognition systems presume that faces are readily available for processing. Human communication has two main aspects: verbal (auditory) and non-verbal (visual), examples of the latter being facial expressions, body movements and physiological reactions. All of these provide significant information regards the state of the person:

(i) Affective state, which includes both emotions such as fear, surprise, anger, disgust, sadness, and more enduring moods such as euphoria or irritableness.

(ii) Cognitive activity, such as perplexity, boredom, or concentration, temperament and personality, including such traits as hostility, sociability or shyness.

(iii) Truthfulness, including the leakage of concealed emotions, and clues as to when the information provided in words about plans or actions is false.

(iv) Psychopathology, including not only diagnostic information relevant to depression, mania, schizophrenia, and other less severe disorders, but also information relevant to monitoring response to treatment.

2. PRINCIPAL COMPONENT ANALYSIS

Principal component analysis (PCA) involves a mathematical method that transforms a number of possibly correlated variables into a smaller number of uncorrelated variables called principal components. PCA is a very famous approach, which is used to calculate a set of features for face Recognition. Any particular face can be

(i) Economically represented along the eigen pictures coordinate space, and.

(ii) Approximately constructed by the uses a small collection of Eigen pictures. To do this ,When a face image is projected to several face templates called eigenfaces then the difference between the images will be calculated which can be considered as a set of features that are considered as the variation between face images. When a set of eigenfaces is calculated, then a face image can be approximately reconstructed using a weighted combination of the eigenfaces. The projected weights form a feature vector for face representation and recognition. When a new test image is given, the weights are calculated by projecting the image onto the eigen-face vectors. The classification between the images is then carried out by comparing the distances between the weighted vectors of the test image and the trained images from the input database. Conversely, we can reconstruct the original image from the eigen faces so that the input image must exactly match with the original image using all of the eigen faces extracted from the original images. PCA is a mathematical method that is based on a transformation of the variables namely orthogonal with which we can convert a set of correlated variables into a set of uncorrelated variables. The variables which are uncorrelated with each other are known as principal components. The number of principal components means the uncorrelated must be less than or equal to the number of original variables. In such type of transformation the first principal components will have the highest priority which shows the maximum variance . This will help us to calculate the variability in data. If the data set is distributed normally only in that case the principal components has surety to be independent from the other variables. The reduction in dimensions can cause information loss but it preserves as much information as possible. The best low dimensional space can be calculated by the best eigenvectors of the covariance matrix.

3. ALGORITHMS AND PROPOSED TECHNIQUE

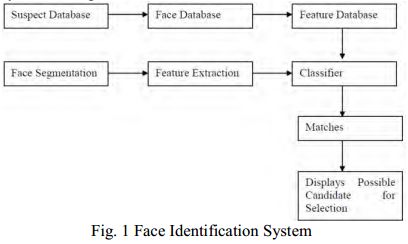

Pre-processing stage – In this stage the images are made zero-mean and unit-variance Dimensionality Reduction stage: (PCA) – Input data is reduced to a lower dimension to facilitate classification. In this stage dimension are reduced. Classification stage - The reduced vectors output from PCA are applied to the BPNN classifier for the training of the data and used to obtain the recognized image. Face recognition is a biometric technique used for surveillance purposes such as search for wanted criminals, suspected terrorists, and missing children. The term face recognition refers to the identification of an unknown face image, by using computational algorithms. This operation can be done by comparing the unknown face with the faces stored in database. Face recognition has three stages.

- faceace location detection;

- feature extraction;

- facial image classification.

4. PCA ALGORITHM

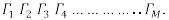

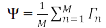

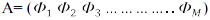

Let the training set of images be  The average face of the set is defined by

The average face of the set is defined by

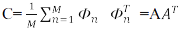

Each individual face differs from the average face by the vector

The Co-Variance matrix is formed by vector

Where the Matrix  .

The set of large vectors is then subject to PCA , Which Seeks

a set of M orthonormal vectors

.

The set of large vectors is then subject to PCA , Which Seeks

a set of M orthonormal vectors  .

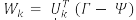

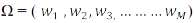

To obtain weight vector

.

To obtain weight vector  of the facial image ?.

The face is transformed into the Eigenface components

projected onto the face space

of the facial image ?.

The face is transformed into the Eigenface components

projected onto the face space

For k= 1, M', Where M'?M is the no. of eigenfaces used

for the recognition. The weights form vector

5. BACK PROPOGATION NEURAL NETWORKS ALGORITHMS

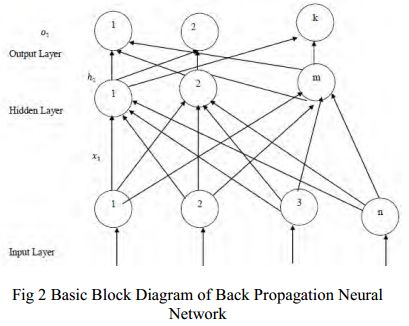

A typical back propagation network with Multi-layer,

feed-forward supervised learning is as shown in the figure.

Here learning process in Back propagation requires pairs of

input and target vectors. The output vector o

is compared

with target vector t

. In case of difference of o

and t

vectors, the weights are adjusted to minimize the difference.

Initially random weights and thresholds are assigned to the

network. These weights are updated every iteration in order

to minimize the mean square error between the output vector

and the target vector.

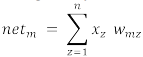

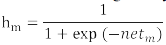

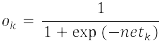

Input for hidden layer is given by

The units of output vector of hidden layer after passing through the activation function are given by

and the units of output vector of output layer are given by

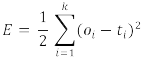

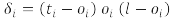

For updating the weights, we need to calculate the error. This can be done by

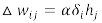

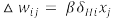

and represents the real output and target output at neuron i in the output layer respectively. If the error is minimum than a predefined limit, training process will stop; otherwise weights need to be updated. For weights between hidden layer and output layer, the change in weights is given by

Where a training rate coefficient that is restricted to the range [0.01,1.0], is the output of neuron j in the hidden layer, and can be obtained by

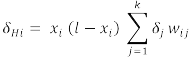

Similarly, the change of the weights between hidden layer and output layer, is given by

Where beta

is a training rate coefficient that is restricted to the

range [0.01,1.0], is the output ofNeuron j in the input layer,

and can be obtained by

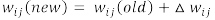

is the output at neuron i in the input layer, and summation term represents the weighted sum of all values corresponding to neurons in output layer that obtained in equation. After calculating the weight change in all layers, the weights can simply updated by

This process is repeated, until the error reaches a minimum value

6. RELATED WORK

Selection of Training Parameters

For the efficient operation of the back propagation network it is necessary for the appropriate selection of the parameters used for training. Initial Weights This initial weight will influence whether the net reaches a global or local minima of the error and if so how rapidly it converges. To get the best result the initial weights are set to random numbers between -1 and 1.

Training a Net

The motivation for applying back propagation net is to

achieve a balance between memorization and generalization;

it is not necessarily advantageous to continue training until

the error reaches a minimum value. The weight adjustments

are based on the training patterns. As long as error the for

validation decreases training continues. Whenever the error

begins to increase, the net is starting to memorize the training

patterns. At this point training is terminated.

Number of Hidden Units

If the activation function can vary with the function, then it

can be seen that a n-input, m output function requires at most

2n+1 hidden units. If more number of hidden layers are

present, then the calculation for the values are repeated for

each additional hidden layer present, summing all the values

for units present in the previous layer that is fed into the

current layer for which is being calculated.

Learning rate

In BPN, the weight change is in a direction that is a

combination of current gradient and the previous gradient. A

small learning rate is used to avoid major disruption of the

direction of learning when very unusual pair of training

patterns is presented.

Various parameters assumed for this algorithm are as

follows.

No. of Input unit = 1 feature matrix

Accuracy = 0.001

Learning rate = 0.4

No. of epochs = 400

No. of hidden neurons = 70

No. of output unit = 1 Main advantage of this back propagation algorithm is that it can identify the given image as a face image or non face image and then recognizes the given input image .Thus the back propagation neural network classifies the input image as recognized image.

According to this graph firstly the original data is applied to PCA and then PCA pre processes the data and transforms the data into a proper curve known as transient curve as shown in the above figure. After that the data classified differently according to the face image and the different colors shows the classification.

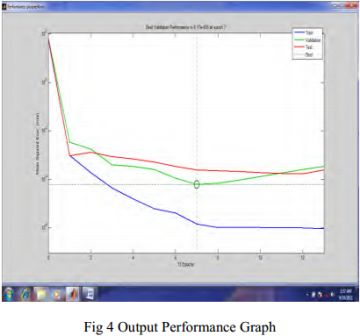

This is termed as the Performance Graph. According to this graph there are three different curves of different colors different colors have different meanings as the blue line denotes the Training the data line and the green line denotes the Validation of the data and Red line denotes the Testing of the data. Generally we select the 70% of the data for training and 15% data for the Validation of the data and 15% for testing data. And according to this graph the error rate is very small and the rate of detection is very high which is shown in the further Regression Graphs.

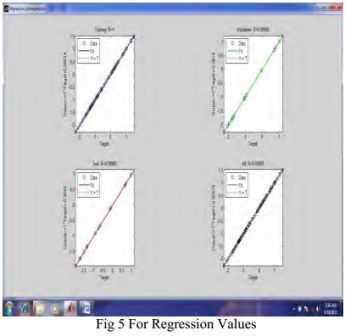

The above graph is termed as Regression Plot. This graph is plotted to calculate the value of accuracy or to determine the detection rate or Recognition rate. According to this graph the accuracy or the recognition rate is very high approximately equal to 100% for the trained images and decreases to a very small extent for non-trained images. This is also shown in the above graph.

7. CONCLUSION

Face detection has many applications including Security applications, until recently, much of the work in the field of computer vision has focused on face recognition, with very little research into face detection. Human face detection is often the first-step in the recognition process as detecting the location of a face in an image, prior to attempting recognition can focus computational resources on the face area of the image. Although a trivial task that can be performed by human effortlessly, the task of face detection is a complex problem in computer vision, due the great multitude of variation present across faces. Several techniques have been proposed to solve this problem, including Feature-Based approaches, and the more recent Image-Based approaches, Compression of the images. Both categories of approaches offer systems worthy of recognition with promising results. Feature-Based Approaches, often applied to real-time systems, are often reliant on a priori knowledge of the face, which is used explicitly to detect features. The more robust Image-Based approaches are too computationally expensive for real-time systems, although systems using a hybrid of both approaches are being developed with encouraging results. By the use of PCA and BPNN the value of the recognition rate increases. So by dividing the Pre processed image into smaller subsets known as principal components are applied to the neural network approach. To improve the output accuracy back propagation neural network is used in the LM approach. And this neural network is used for the classification of the images and detects the image very accurately. Firstly the neural network is trained and then testing the images provides the image which matches with the actual image. And the detection rate of this system is approximately 100% for the trained images.

Results from Proposed Scheme:

When BPNN technique is combined with PCA, non linear face images can be recognized easily. One of the images is taken as the Input image. The Recognized Image by BPNN and reconstructed output image by PCA is as BPNN technique is combined with PCA, non linear face images can be recognized easily. Hence it is concluded that this method has the accuracy more than 99 % and execution time of only few seconds. Face recognition can be applied in Security measure at Air ports, Passport verification, Criminals list verification in police department, Visa processing , Verification of Electoral identification and Card Security measure at ATM?s. Face recognition has received substantial attention from researches in biometrics, pattern recognition field and computer vision communities. In this dissertation we proposed a computational method of face detection and recognition, which is fast, reasonably simple, and accurate in constrained environments such as an office or a household.

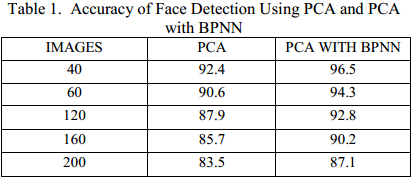

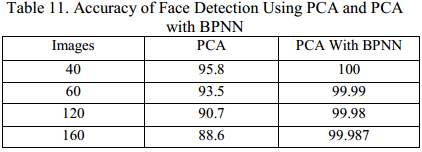

According to the above Table which was proposed earlier the Rate of recognition or detection of the face using only PCA is smaller But by using PCA and BPNN the detection of face images or the recognition rate increase so the accuracy as shown in the above table is as

The Table Shown above consists of the values which are calculated and showing the improvement in the rate of detection by using PCA and BPNN. In this system the accuracy is up to 100% for the trained images. And the accuracy decreases to a small extent by the increase in the number of images.

REFERENCES

- B.K.Gunturk,A.U.Batur, and Y.Altunbasak,(2003)

Eigenfacedomain super-resolution for face recognition,

IEEE Transactions of . Image Processing. vol.12, no.5.pp. 597 – 606. - M.A.Turk and A.P.Petland, (1991)

Eigenfaces for Recognition,

Journal of Cognitive Neuroscience. vol. 3, pp.71-86. - T.Yahagi and H.Takano,(1994)

Face Recognition using neural networks with multiple combinations of categories,

International Journal of Electronics Information and Communication Engineering., vol.J77-D-II, no.11, pp.2151-2159. - S.Lawrence, C.L.Giles, A.C.Tsoi, and A.d.Back, (1993)

IEEE Transactions of Neural Networks. vol.8, no.1, pp.98-113.

- C.M.Bishop,(1995)

NeuralNetworks for Pattern Recognition

London, U.K.:Oxford University Press.- Kailash J. Karande Sanjay N. Talbar

Independent Component Analysis of Edge Information for Face Recognition

International Journal of Image Processing Volume (3) : Issue (3) pp: 120 -131. International Journal of Emerging Science and Engineering (IJESE) ISSN: 2319–6378, Volume-1 Issue-6 April 2013 75- Matthew Turk and Alex Pentland " vision and Modeling Group, The Media Laboratory , Massachusetts institute of Technology. Fernando L. Podio and Jeffrey S. Dunn2

- Jain, Fundamentals of Digital Image Processing, Prentice-Hall Inc., 1982.

- http://www.ijser.orgInternational Journal of Scientific & Engineering Research Volume 2, Issue 6, June-2011

- E. Trucco, and A. Verri, Introductory Techniques for 3-D Computer Vision, Prentice-Hall Inc., 1998.

- L. G. Shapiro, and G. C. Stockman, Computer Vision, Prentice-Hall Inc., 2001.

- Phil Brimblecombe, 2005. Face Detection Using Neural Networks, Bachelor Thesis. School of Electronics and Physical Sciences, Department of Electronic Engineering. University of Surrey.

- Farah Azirar, 2004. Facial Expression Recognition. Bachelor Thesis. School of Electronics and Physical Sciences, Department of Electronic Engineering, University of Surrey.

- Konrad Rzeszutek, http://darnok.com/projects/facerecognition Terrillion, J.C., Shirazi, M., Fukamachi, H., and Akamatsu, S. (2000).

- Rowley, H., Baluja, S. and Kanade, T., Neural Network-Based Face Detection. IEEE Transactions on Pattern Analysis and Machine Intelligence,Vol. 20, No. 1, January, 1998, pp. 2338.http://www.ri.cmu.edu/pubs/pub_926_text.html

- Duda, R.O., Hart, P.E. and Stork, D.G. Pattern Classification. Wiley,New York, 2001.

- B.K.Gunturk,A.U.Batur, and Y.Altunbasak,(2003)

Eigenfacedomain super-resolution for face recognition,

IEEE Transactions of .Image Processing. vol.12, no.5.pp. 597-606.- Paul Viola and Michael Jones. Rapid object detection using a boosted cascade of simple features. In CVPR, 2001,http://citeseer.nj.nec.com/viola01rapid.html

- Meng Joo Er, Weilong Chen, Shiqian Wu. High-Speed Face Recognition Based on Discrete Cosine Transform and RBF Neural Networks. IEEE Transactions on Neural Networks, Vol 16, No. 3, May 2005

- C.M.Bishop,(1995)