Abstract

The content of the abstract

- ВведениеIntroduction

- 1.Relevance of the topic

- 2. The purpose and objectives of the study, the planned results

- 3.Cloud Computing Features

- 3.1 Cloud services and manageability boundaries

- 3.2 Principles of load balancing in cloud computing

- 3.3 Load balancing in cloud computing

- 4. Global agent

- Findings

- List of sources

Introduction

More recently, literally 20 years ago, the share of using information technologies in business was less than 5-10%. Now - almost 100%. The transition from uniqueness to scale makes it possible to perceive the computing power not as a separate computer server, standing in the building of the organization, but as a service that is provided by some distant data center. Actually, this is why cloud computing is called a new trend in the development of information technology.

That is why many companies are beginning to understand the importance and necessity of “going to the cloud”. And that is why now it is necessary to carry out research projects in this area. In the cloud computing market, there are not only proprietary solutions, such as VMware ESX, Xen, Distributed Resource Scheduler and others, but also well-documented open source systems, such as OpenStack.

1.Relevance of the topic

In a report entitled Cloud Dividends 2011, published in December 2010, the Center for Economic and Business Research (CEBR) states that by 2015, due to cloud computing, the economies of developed European countries will receive an additional 177.3 billion euros per year.

The report, commissioned by EMC, was the first of its kind to assess the value of cloud computing at the macroeconomic level for the five largest economies in Europe.

The authors of the CEBR report concluded that if in the UK, Germany, Italy, Spain and France, the introduction of cloud technologies will continue at the expected pace, then by 2015 they will bring to the economies of these countries 177.3 billion euros per year.

It is important to note that the study shows that the lion’s share of these funds will be provided by mastering the private and hybrid cloud computing models.

CEBR has calculated that the annual economic effect of cloud computing for each country by 2015 will be: Effect, billion euros:

Germany - 49,6

France - 37,4

Italy- 35,1

Great Britain - 30,0

Spain - 25,2

Cloud computing is a new approach to IT, in which technologies become available to enterprises in the right amount and when they need them, the research says. This speeds up time to market, removes traditional entry barriers and allows companies to take advantage of new business opportunities. Strengthening competition, this direct effect of cloud computing will have a huge impact on market structure in many sectors of the economy, and consequently, on world macroeconomic indicators, CEBR says. CEBR believes that cloud computing will be an important factor in economic growth, competitiveness and the creation of new enterprises throughout the eurozone. This underlines the importance of this technology for the economic recovery of the region, in particular, in the face of the growing threat from emerging economies that traditionally benefit from more intense competition.

2.The purpose and objectives of the study, the planned results

The aim of the study is to develop a scheme and algorithm for the work of the load balancer in cloud computing networks.

The main objectives of the study:

- Search and identification of major problems at all stages of network deployment and operation.

- Analysis of the problems identified.

- Analysis of possible difficulties in network modeling and balancer operation.

- Suggestions for eliminating or smoothing problems.

- Optimization of the balancer.

- Evaluation of the proposed solutions.

Object of study: cloud load balancer.

Subject of study: combining methods for reducing response time to remote user requests.

3. Cloud Computing Features

- stress on key resources is periodic and uneven;

- appeals to several types of resources simultaneously occur;

- the intensity of access to each resource may vary depending on external conditions;

- due to the absence of load distribution between resources at peak load, the equipment does not always allow serving all requests;

- up to 90% of the load is predetermined, since pre-registration is used to access resources.

In addition, it is worth noting that 80% of resources are in demand only in 20% of the time of the services.

Currently, existing solutions built on the basis of cloud services, using a universal approach for access to the resources placed in them.

Figure 1 - Application Layout Options

3.1 Cloud services and manageability boundaries

When discussing various types of cloud services - software, platform and infrastructure as a service, you should pay attention to the so-called. controllability boundaries - i.e. on what, in comparison with traditional models of deployment in its own infrastructure, can be controlled during the transition to the cloud platform. For obvious reasons, infrastructure as a service provides great opportunities for setting up individual components, whereas platform as a service and software as a service practically minimize these possibilities.

The differences in the limits of control are shown in the figure. 1.1.

Figure 1.1 - The boundaries of manageability

From Figure 1.1, you can see that when you deploy your own infrastructure, you manage all of its components — from network resources to running applications. While using the IaaS model, you can control components such as code execution environment, security and integration, databases, etc. When moving to the PaaS model, all platform components are provided as services with limited capabilities for managing them. This is done to provide consumers with an optimally configured platform that does not require additional settings.

When designing and creating cloud solutions, it is important to ensure reliability and optimal use of resources - monitoring, or obtaining information about the availability and loading of hardware resources of platforms with copies of a distributed application, and balancing, then distributing incoming user requests between existing hardware platforms.

3.2 Principles of load balancing in cloud computing

We can distinguish the following classes of solutions used in the construction of multi-node systems (Fig. 1.2): balancing with traffic passing through one balancing device; cluster balancing; balancing without passing traffic through a single balancing device.

Figure 1.2 - Principles of load balancing: a) balancing with traffic passing through one balancing device; b) balancing by means of a cluster; c) balancing without passing traffic through one balancing device

3.3 Load balancing in cloud computing

Virtual machines provide the ability to allocate resources dynamically according to requirements, optimizing application performance and power consumption. For dynamic redistribution of resources, there are a number of solution possibilities; one of the main ones is live migration of virtual machines. It allows cloud providers to move virtual machines from overloaded hosts, maintaining their performance under a given SLA, and dynamically consolidate virtual machines on the smallest number of hosts, in order to save power when the load is low. Using live migration and applying online algorithms that allow you to make real-time migration decisions, you can efficiently manage cloud resources by adapting resource allocation to VM (virtual machine) workloads, maintaining VM performance levels according to SLA and reducing infrastructure power consumption .

An important problem in the context of live migration is the detection of a host congestion or underload condition. Most current approaches are based on monitoring resource utilization, and if the actual or predicted next value exceeds a predetermined threshold, the node is declared congested. However, live migration has its price, justified by the violation of VM performance during the migration process. The problem with existing approaches is that detecting host congestion on a single measurement of resource usage or several future values ??can lead to hasty decisions, unnecessary overhead costs for live migration, and problems with VM stability.

A more promising approach is the decision-making approach on live migration based on projections of resource use a few steps ahead. This not only improves stability, since migration actions begin only when the load is maintained for several time intervals, but also allows cloud providers to predict the state of overload before this happens. On the other hand, forecasting a more distant future increases forecast error and uncertainty, while reducing the benefits of long-term forecasting. Another important problem is that live migration should be performed only if the penalty for possible violations of the SLA exceeds the overhead of migration.

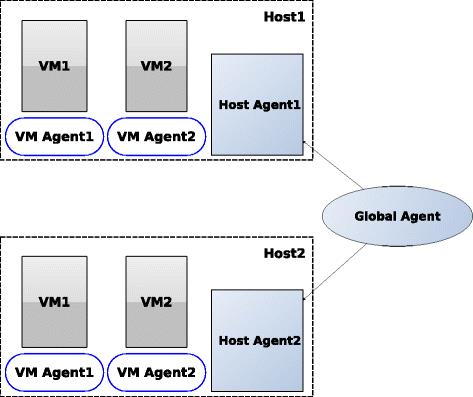

This work focuses on managing the IaaS cloud, in which several virtual machines run on multiple physical nodes. The overall architecture of the resource manager and its main components is shown in Figure. 1.3. There is a VM agent for each virtual machine that determines the allocation of resources on its virtual machine in each time interval. For each host, there is a host agent that receives resource allocation decisions for all of the VM agents and determines the final allocations, resolving any possible conflicts. It also detects when a node is overloaded or underloaded, and passes this information to the global agent. The global agent initiates virtual machine migration decisions by moving virtual machines from overloaded or underloaded hosts to consolidating hosts to reduce losses for violating SLA and reduce the number of physical nodes. The following sections look at each of the components in the resource manager in more detail.

Fig. 1.3 Resource Manager Architecture

4. Global agent

The global agent makes decisions about distributing provider resources using live migrations of virtual machines from overloaded or underloaded hosts to other nodes to reduce SLA violations and energy consumption. It receives notifications from the host agent if the node will be overloaded or underloaded in the future, and will perform a VM migration if it is worth it.

Non-optimized digital system designs, regardless of the implementation base, may have significant redundancy and, therefore, inefficient use of hardware resources [84]. This leads to the actualization of the task of hardware optimization, which, in the context of FPGA, reduced to reducing the percentage of use of various indoor units: LUT-elements (LUT - Look-Up Table), memory modules, synchronization schemes.

The global agent applies the Power Aware Best Fit Decreasing (PABFD) algorithm to host the VM with the following adjustments. To detect overload or underload, we use our approaches presented above. To select a virtual machine, the minimum migration time policy (MMT) is used, but with the modification that only one virtual machine is selected for migration in each decision round, even if the host can remain overloaded after migration. This is done to reduce the number of simultaneous virtual migrations of virtual machines and the costs associated with them.

For the consolidation process, only underused hosts are considered, which are detected by the proposed long-term forecasting approaches. From the list of underloaded hosts, first consider those that have a lower average CPU load.

Findings

Given the uncertainty of forecasting, the additional costs of live migration and applying the theory of making optimal decisions, we got the best solution in terms of aggregates and improved performance.

There are several markups for future work:

First, the approach is based on a long-term forecasting model. This means that the prediction model cannot easily predict a sudden and dramatic increase in load (i.e. load bursts). This question is beyond the scope of the study, but it can be solved by focusing on burst detection methods. An interesting area of ??future work is the combination of load spike detection methods using load prediction methods to develop a wide range of load models.

Secondly, in addition to the currently used scheme for predicting the next CPU load value for local resource allocation, more complex schemes can be studied, based on control theory, Kalman filtering or fuzzy logic.

Third, a resource allocation approach should be explored, in which each host agent makes decisions on live migration in collaboration with the closest host agents. This approach looks promising for large-scale cloud infrastructures, where centralized optimization complexity and a single point of failure are important factors. In such approaches, the problem lies in how host-agents with limited data should coordinate each other to achieve the global goal of optimization.

Finally, the study of long-term forecasting of the distribution of several resources (for example, CPU, RAM, I / O) and their interdependencies is an interesting area for future work.

List of sources

- Array Интеллектуальные навигационно-телекоммуникационные системы управления подвижными объектами с применением технологии облачных вычислений; РГГУ - Москва, 2015. - 158 c.

- Карр Николас Великий переход. Революция облачных технологий; Манн, Иванов и Фербер - М., 2015. - 324 c.

- Кузнецова, Т.В.; Санкина, Л.В.; Быкова, Т.А. и др. Делопроизводство. Организация и технологии документационного обеспечения управления; Юнити-Дана - М., 2015. - 359 c.

- Леонов В. Google Docs, Windows Live и другие облачные технологии; Эксмо - М., 2015. - 921 c.

- Тютюнник, А.В.; Шевелев, А.С. Информационные технологии в банке; БДЦ-пресс - М., 2016. - 368 c.

- Е. Гребнева. Облачные сервисы: взгляд из России.— М.: CNews,2011. —282с.

- С. Сейдаметова, С.Н. Сейтвелиева. Облачные сервисы в образовании. -Симферополь, 2012 - 206с.

- Модели облачных технологий. – Режим доступа: http://wiki.vspu.ru/workroom/adb91/index

- Что такое облачные сервисы, и какие бывают облачные технологии, а также их применение – Режим доступа: http://sd-company.su/article/cloud/service

- Клементьев И. П. Устинов В. А. Введение в облачные вычисления. – УГУ, 2009

- Широкова Е. А. Облачные технологии - Уфа: Лето, 2011

- Облачные сервисы для библиотек и образования И. Билан// «Университетская книга» №10, 2011

- «Облачные технологии» в образовательном процессе Т.М. Коробова// «ИТО-Саратов-2013»:V Всероссийская (с международным участием) научно-практическая конференция.

- Beloglazov, A., J. Abawajy, and R. Buyya (2011) “Energy-aware Resource Allocation Heuristics for Efficient Management of Data Centers for Cloud Computing”, Future Generation Computer Systems (28)5, pp. 755- 768, doi: 10.1016/j.future.2011.04.017.

- Shiva S. Introduction to logic design / S. Shiva. – CRC Press, 1998. – 628 pp.

- Singh A. Foundation of switching theory and logic design / A. Singh. – New Age International, 2008. – 412 pp.

- Поляков А.К. Языки VHDL и VERILOG в проектировании цифровой аппаратуры / А.К. Поляков. – М.: СОЛОН-Пресс, 2003. – 320 с.

- Ashenden P. Digital design: an embedded systems approach using Verilog / P. Ashenden. – Morgan Kaufmann Publishers, 2008. – 557 pp.

- Chu P. FPGA prototyping by Verilog examples / P. Chu. – Wiley, 2008. – 488 pp.

- Ciletti M. Advanced digital design with the Verilog HDL / M. Ciletti. – Prentice Hall, 2005. – 986 pp.

- Minns P. FSM-based digital design using Verilog HDL / P. Minns, I. Elliott. – Wiley, 2008. – 391 pp.

- Lee J. Verilog quickstart: a practical guide to simulation and synthesis in Verilog / J. Lee. – Springer, 2002. – 355 pp.

- Lee W. Verilog coding for logic synthesis / W. Lee. – Wiley, 2003. – 336 pp.

- Padmanabhan T. Design through Verilog HDL / T. Padmanabhan, B. Bala Tripura Sundari. – Wiley, 2004. – 455 pp.

- Berl, A., E. Gelenbe, M. di Girolamo, G. Giuliani, H. de Meer, M. Dang, et al. (2010) “Energy-Efficient Cloud Computing,” The Computer Journal (53)7, p. 1045.