Abstract

Content

- Introduction

- 1. Relevance of the topic

- 2. The purpose and objectives of the study, planned results

- 3. Analysis of existing methods

- 3.1 Detection and recognition of objects in the frame

- 3.1.1 Palm detector (BlazePalm)

- 3.1.2 Defining Key Points

- 3.1.3 Optimization

- 3.2 Preprocessing

- 3.3 Segmentation

- 3.4 Postprocessing

- 3.5 Feature Extraction & Recognition

- 4. Analysis of existing methods

- 5. Generalized scheme of the system

- Conclusions

- List of sources

Introduction

Biometric data is used to identify or distinguish people based on their unique characteristics. Historically, biometrics were mainly used in criminal investigations, and initially the methods were quite simple. Measurements were based on easily visible features of the body, such as scars, birthmarks or distances between individual parts of the body. Despite the simplicity (albeit the laboriousness) of the application, these methods suffered from inaccurate measurements and fuzzy characteristics, which increased the risk of unsuccessful identification or false positives.

At the end of the 19th century, the potential of fingerprints as a biometric feature was recognized. It was a much more subtle feature, but with much greater clarity than its predecessors. The fingerprint recognition system soon became the most commonly used in English-speaking countries [1].

1. Relevance of the topic

Currently, there are a very large number of identification systems based on various types of biometrics [1, 2]. They include:

- iris recognition;

- fingerprint recognition;

- retina recognition;

- voice recognition;

- face recognition;

- palm recognition.

The last one technology is a fairly new technology in the biometric field, which is gradually being introduced throughout the world. The idea of using a vascular brush pattern was first considered in the early 1990s, but it was only in the early 2000s that a commercial product was developed that became popular when an application for identifying a person based on a vein pattern on the back of the hand was created.

Although biometrics is still an important criminal investigation tool, it is also currently used in commercial products that require user authentication, such as access control. Another possible use of biometrics is in observations where face recognition is used. This is a field of biometrics that has been extensively investigated, especially after 9/11 attacks [3, 4].

2. The purpose and objectives of the study, planned results

The goal is to increase the reliability of contactless biometric systems.

To achieve this goal it is necessary to solve the following tasks:

- Develop an algorithm that determines the area of interest in the selected image.

- Develop an image filtering algorithm.

- Develop a segmentation algorithm.

- Develop a post-processing algorithm.

- Conduct experimental research.

3. Analysis of existing methods

The task of identifying a person by drawing veins, like any other task consisting in processing images, consists of several steps. Figure 1 shows the basic steps involved in image processing.

Figure 1 – The main stages of image processing

Obtaining an image of veins on the arm can be done in two ways:

- using a thermal camera;

- using an infrared camera.

The disadvantage of the first method is the high price of the camera. The second method is preferable, since with the proper skill, an infrared camera can be made at home from an ordinary Web-camera [13].

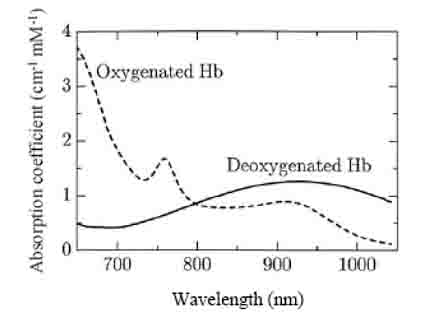

The extraction of this biometric feature has become possible due to the properties of blood, namely due to the fact that the hemoglobin of the blood absorbs IR radiation. As a result, the degree of reflection decreases and the veins are visible on the camera as black lines.

Figure 2 – Schedule of absorption of infrared radiation of oxygen-saturated blood and blood without oxygen

There are two methods for obtaining an image of the pattern of the veins of the palm. The reflection method allows you to place all the components of the device in one housing, due to which the size is reduced. The psychological barrier is also reduced (no need to stick your hand anywhere). The transmission method of infrared light is to install infrared illumination on the back of the hand, and the camera with the filter is installed on the side of the palm and receives infrared radiation that passes through the entire palm. Using the transmission method, the resulting images are more detailed.

The reflection method will be used for our task.

3.1 Detection and recognition of objects in the frame

First of all, after receiving the next frame, it is required to select objects of interest on it.

Hand recognition is a rather non-trivial task, which at the same time is widely in demand. This technology can be used in applications of additional reality for interaction with virtual objects. It can also be the basis for understanding sign language or for creating gesture-based control interfaces.

What is the difficulty?

The natural perception of hands in real time is a real challenge for computer vision, hands often overlap themselves or each other (fingers crossed or shaking hands). While faces have high-contrast patterns, for example, in the area of the eyes and mouth, the absence of such signs in the hands makes reliable detection only by their visual signs.

Now, to extract the area of interest (ROI) in ACS, two extraction methods are used.

The first method involves fixing the hand in a certain position immediately below the camera [5]. The second method is based on extracting information taken from the captured image. For this, key points of the hand contour are used [6].

In August 2019, GoogleAI researchers showed their approach to the task of tracking hands and identifying gestures in real time. [11, 12]

What did GoogleAI do?

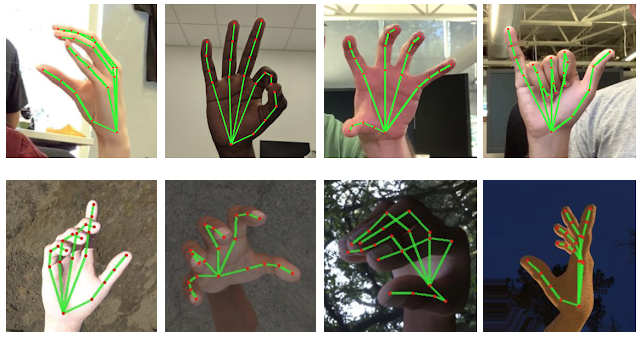

They implemented technology for precise tracking of hands and fingers using machine learning (ML). The program determines 21 key points of the hand in 3D space (height, length and depth) and on the basis of this data classifies the gestures that the hand shows. All this, based on just one frame of video, works in real time on mobile devices and scales by several hands. An example of how the technology works is presented in Figure 3 .

Figure 3 – Operation of GoogleAI Algorithms (animation: 11 frames, size: 657 kbytes, cycles: not limited)

How did they do it?

The approach is implemented using

MediaPipe

– an open source cross-platform framework for building

processing pipelines data (video, audio, time series).

The solution consists of 3 main models working together:

-

Palm detector (BlazePalm)

- accepts full image from video;

- returns oriented bounding box.

-

Model for determining key points on the hand

- takes a cropped picture of a hand;

- returns 21 key points of a hand in 3D space + confidence indicator.

-

Gesture recognition algorithm

- takes key points of the hand;

- returns the name of the gesture that the hand shows.

The architecture is similar to the one used in the pose estimation task. By providing precisely cropped and aligned hand images, the need for data augmentation (rotations, translation, and scaling) is significantly reduced, and instead the model can focus on the accuracy of coordinate prediction.

A model for recognizing gestures when solving the problem of highlighting ROI for identification of a person is not needed, therefore, it will not be considered.

3.1.1 Palm detector (BlazePalm)

To find your palm, use a model called BlazePalm – single shot detector (SSD), a model optimized for mobile device in real time.

In a GoogleAI study, they trained a Palm detector instead of an entire arm detector (the base of the palm without fingers). The advantage of this approach is that it is easier to recognize a palm or fist than an entire hand with gesturing fingers, in addition, a palm can be selected using square anchors (bounding boxes) while ignoring the aspect ratio, and thus reducing the number of required anchors in 3-5 times.

The feature extractor Feature Pyramid Networks for Object Detection (FPN) was also used to better understand the image context even for small objects.

As a loss function, focal loss was takken, which is good copes with the imbalance of classes arising from the generation of a large number of anchors.

Classical Cross Entropy:

Focal loss:

Using the above techniques, Average Precision was achieved 95.7%. When using simple cross-entropy and without FPN – 86.22%.

3.1.2 Defining Key Points

Once the Palm detector has determined the position of the palm on the entire image, the region is shifted by a certain factor up and expanded to cover the entire hand. Then, on the cropped image, the regression problem is solved-the exact position of 21 points in 3D space is determined.

For training, 30000 real images were manually marked. A realistic 3D model of the hand was also made, with the help of which artificial examples were generated on different backgrounds. Examples of hand models are shown on Figure 4.

Figure 4 – Models for learning

Top: real images of hands with marked key points.

Bottom: synthetic images of hands made using a 3D model.

3.1.3 Optimization

The main secret of fast real-time inference lies in one important optimization. Palm detector, which takes the most time, runs only when needed (quite rarely). This is achieved by calculating the position of the hand on the next frame, based on the previous key points of the hand.

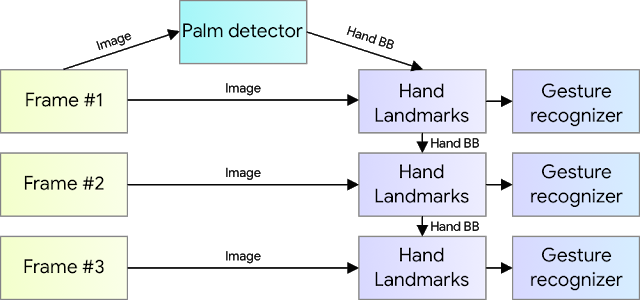

For stability of such approach to the model of definition of key points added one more output – scalar which shows how much the model is sure that on the cropped image there is a hand, and that it is correctly developed. When the confidence value falls below a certain threshold – re-starts the palm detector and applies to the entire frame. The scheme of this approach is given on Figure 5.

Figure 5 – Optimization scheme of the detector

3.2 Preprocessing

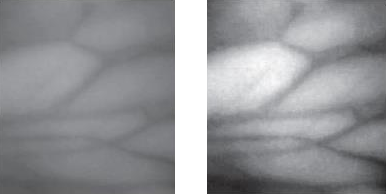

This step reduces the noise in the image, which could occur as a result of poor-quality camera

or the presence of hairs. For this stage, apply a high-pass filter (HPF), and

then binarization based on the histogram [7],

or a low-pass filter (LPF) Gauss [5].

Then, to remove the noise

from the hairs on the hand, a median filter is

applied. On Figure 6 [7] shows images

before and after this step.

Figure 6 – The result of the application of the preprocessing

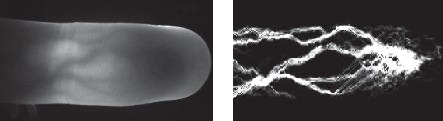

3.3 Segmentation

After noise reduction, the image is segmented. It is used to get a good binary representation of the vein pattern. A common method is the local threshold value [5, 8 ‐ 10]. This makes it possible to get a good separation of the venous pattern from the background. Other methods are directional vascular pattern [7] (presented on Figure 7) and edge detection [6] – are more complex to implement.

Figure 7 – The result of the application of directional vascular pattern

3.4 Postprocessing

The stage is needed due to changes in vein diameters caused by various factors

such as ambient temperature, exercise, etc. It is also used to isolate the

vein pattern by removing drops

that are not part of the vein pattern.

In post-processing, the following morphological methods are used: dilation (expansion, build-up), erosion (contraction), opening (opening) and closing (closure). The opening method is a sequential application of erosion and dilation methods; the closing method is dilation and then erosion.

3.5 Feature Extraction & Recognition

The last steps related to the vein pattern recognition system is the extraction and recognition function. These steps are highly dependent on each other, as one recognition method usually only works with a specific characteristic. For this reason, functions and corresponding extraction methods based on recognition methods have been chosen.

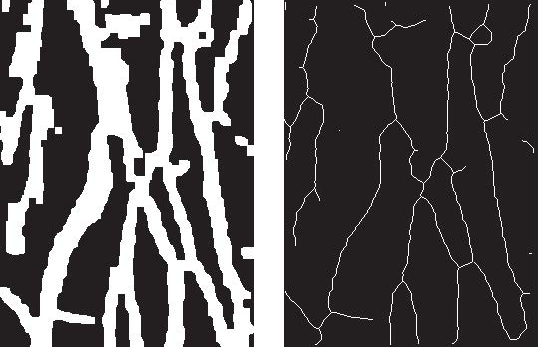

To overcome the problem of instability of the sign due to the environment, the system needs to analyze the overall shape of the vascular bed pattern. Thinning is a widely used method for extracting this form. It converts the vein image into a line one pixel thick. This algorithm is often modified to get rid of some unnecessary one-pixel points, as well as to get rid of small unnecessary branches [5, 8].

The skeleton obtained by this method (presented on Figure 8), then used for feature extraction and recognition.

Figure 8 – The result of obtaining the skeleton of the vascular bed

Sometimes the skeleton itself is used as a feature, sometimes features such as cross and end points are extracted.

When using a skeleton, the method of medial representation of the axis

of the vein array element is used, followed by the application of

a bounded sequential correlation

scheme to match the pattern [8].

When comparing points, Delaunay triangulation methods or modified Hausdorff distance (MHD) are most often used.

In this problem, without stability of the position of the points relative to each other, Delaunay triangulation is extremely inefficient. The greatest efficiency it shows when fixing the hand in space.

MHD – is a scalar. It has a minimum value when comparing samples of the same template and a high value for different ones.

MHD recognition requires a database of extracted patterns assigned to each registered person. When an unknown specimen is to be identified, the MHD value is calculated between it and all images corresponding to the person in the database. The average of these distances is then taken, with an estimate of how similar the sample is to that person's pattern. This is repeated for all registered persons. The unknown pattern is then identified as belonging to the class with the lowest MHD value that is below the specified decision threshold. If there is no value below the decision threshold, the pattern is classified as unknown.

4. Analysis of existing methods

The performance of biometric systems is important in determining whether the system has the potential to be applied in real-life situations. The table shows the different methods and their effectiveness.

| Name | Subjects | Images used for test | Match attempts | FAR | FRR | FTE | |

|---|---|---|---|---|---|---|---|

| Access | |||||||

| Present | Absent | ||||||

| Cross and Smith [1] | 20 | 2 | 40 | 760 | 0% | 7.5% | 0% |

| Wang and Leedham [8] | 12 | 6 | 72 | 792 | 0% | 0% | 0% |

| Tsinghya University [5] | 13 | 5 | 260 | 3120 | 0% | 4.6% | 0% |

| Harbin University [5] | 48 | 5 | 960 | 45120 | 0% | 0.8% | 0% |

| Miura (2004) [7] | 678 | 1 | 678 | 459006 | 0.145% | 0.145% | 0% |

| Miura (2006) [9] | 678 | 1 | 678 | 459006 | 1% | 0% | 0% |

| Lin and Fan [10] | 32 | 15 | 480 | 14880 | 3.5% | 1.5% | a few[10] |

The table shows three key performance indicators:

- False Acceptance Rate (FAR) – the probability that an unauthorized person is accepted as an authorized person;

- False Rejection Rate (FRR) – the probability that an authorized person is rejected as an unauthorized person;

- Failure To Enroll (FTE) – the probability that a given user will be unable to enroll in a biometric system due to an insufficiently distinctive biometric sample.

These three metrics can lead to a wrong estimate performance if two of them are used without the third one. They are linked to determine the performance of a system and dependent on one another.

5. Generalized scheme of the system

The simplified access control system consists of three modules:

- biometric data reader module;

- identification module;

- the access module.

The input information for such a system is a video stream. From it, a sequence of frames is allocated, to each of which the hand detection algorithm is applied. Having received the frame area with the object, it is necessary to compare the key features of the received object with those available in the database. As a result, we get a reference to a specific person. If there is no match, an access violation attempt is logged. In the case of identity verification, access rights are checked.

The main limitation is the fact that the system can never measure a given function with absolute accuracy: good measurements require precise equipment, and, as a rule, the object must be positioned in a certain way to achieve useful results. The consequences of a system failure will depend on the application. If the user is falsely rejected by the security system, the damage is usually limited as the user can just try again. If, on the other hand, the impostor is falsely accepted by the system, the potential damage could be great. For this reason, a low FAR is usually more important to a security system than a high TAR.

Conclusions

At this stage of the master's work a comparative analysis of the existing categories of methods for solving the problem. The directions in the solution of a problem are defined. During the study of the subject area, significant problems were identified that may arise in the processing of video data flow, namely the complexity of identifying a person when changing the angle of shooting a feature.

List of sources

- Р. М. Болл, Дж. Х. Коннел, Ш. Панканти, Н. К. Ратха, Э. У. Сеньор. Руководство по биометрии: Пер. с англ. – М.: Техносфера, 2007. – 369 с.

- Ворона В. А., Тихонов В. А. Системы контроля и управления доступом. Уч. пособие. – М.: Горячая линия – Телеком, 2010 – 272 с.

- T. Frank. (2007, May) Face recognition next in terror fight. Internet. [Online]. Режим доступа: [Ссылка]

- M. Kane. Face recognition grew even before 9/11. [Online]. Режим доступа: [Ссылка]

-

L. Wang and G. Leedham,

A thermal hand vein pattern verification system,

Lecture Notes in Computer Science, pp. 58-65, 2005. -

K. Fan, C.-L. Lin, and W.-L. Lee,

A study of hand vein recognition method,

in 16th IPPR Conference on Computer Vision, Graphics and Image Processing. Kinmen, ROC: IPPR, August 2003. -

Y. Ding, D. Zhuang, and K. Wang,

A study of hand vein recognition method,

in International Conference on Machatronic and Automation. Niagara Falls, Canada: IEEE, 2005. -

J. Cross and C. Smith,

Thermographic imaging of the subcutaneous vascular network of the back of the hand for biometric identification,

in Security technology, 1995. Proceedings. Institute of Electrical and Electronics Engineers 29th Annual 1995 International Carnahan Conference on. Sanderstead, UK: IEEE, 1995. -

A. Nagasaka, T. Miyatake, and N. Miura,

Feature extraction of finger-vein patterns based on repeated line tracking and its application to personal identification,

Machine Vision and Applications, vol. 15, pp. 194 - 203, 2004. -

N. Miura, A. Nagasaka, and T. Miyatake,

Personal identification device and method,

US Patent #2005/0 047 632A1, 2005. - On-Device, Real-Time Hand Tracking with MediaPipe. [Online]. Режим доступа: [Ссылка].

- Hand Tracking (GPU). [Online]. Режим доступа: [Ссылка]

- ИК-камера своими руками! [Online]. Режим доступа: [Ссылка]