Abstract

Contents

- Introduction

- 1. Theme urgency

- 2. Purpose and objectives of the study

- 3. General Description of QoS Load Balancing Mechanisms

- 4. Analysis of existing algorithms and methods of load balancing with quality of service

- Conclusion

- References

Introduction

The constant increase in the volume of transmitted traffic with limited resources of the existing infocommunication network has become a prerequisite for the emergence of a number of scientific research problems on the effective use of the latter. [1]

Resource efficiency is one of the decision-making criteria in the network management process. This criterion is fundamental for a telecom operator. For the end user, the main criterion for using a particular type of application is the fulfillment of the quality of service (QoS) parameters. In general, the ability to manage a network based on the above criteria is possible in MPLS (Multiprotocol Label Switching) networks or in IP networks, but with the possibility of organizing DiffSerf (Differentiated Service) schemes.

1. Theme urgency

Despite the fact that there are a large number of redistribution algorithms, however, they have a number of disadvantages, including uneven distribution of traffic between servers, lack of consideration of the dynamic nature of traffic, inefficient use of resources, and significant response time. Since the edge network device of the IP / MPLS network is a "bottleneck", the task of studying load balancing methods and developing a method that takes into account quality of service parameters and allows to increase the efficiency of using network resources is urgent.

2. Purpose and objectives of the study

The purpose of the research work is to increase the efficiency of using the resources of the IP / MPLS network while ensuring the specified level of quality of service by developing a load balancing method.

To achieve this goal, it is necessary to solve the following tasks:

When researching algorithms and methods of load balancing in telecommunication systems and computer networks should be noted the following quality of service parameters:

- analysis of existing models and methods of resource management in IP / MPLS networks based on load balancing;

- development of an algorithm for efficient redistribution of the load ensuring a given level of quality of service, depending on the parameters of incoming traffic [3];

- development of a method for efficient redistribution of the load ensuring a given level of quality of service, depending on the parameters of incoming traffic [3];

- evaluation of the effectiveness of the developed method by means of simulation and development of recommendations for its practical use.

Research object: the process of traffic control in IP / MPLS networks.

Subject of research: models and algorithms for load redistribution in IP / MPLS networks.

3. General Description of QoS Load Balancing Mechanisms

When studying algorithms and methods of load balancing in telecommunication systems and computer networks, the following quality of service parameters should be noted [2]:

- available bandwidth (B, bandwidth);

- packet loss rate (Pl, Packet Loss);

- delay (D, Delay);

- delay jitter (J, jitter).

The choice of balancing method depends on the specific conditions in which this balancing is to be carried out. For example, to achieve the following goals:

- predictability: it is necessary to know and understand how the balancing system will behave under certain conditions;

- even loading of system resources;

- scalability;

- efficiency;

- reduction of query execution time;

- reduced response time.

To implement efficient load balancing between nodes in a high-bandwidth network, the algorithm must have the following indicators:

- fast data processing and transmission to the network;

- bandwidth reservation;

- implementation of the distribution algorithm based on QoS requirements for certain types of traffic [4];

- flexibility of using queuing algorithms at network nodes.

Recently, the application layer has been increasingly used to solve the problem of load balancing [6]. At the same time, the decision-making process takes into account the type of client, the requested URL, information from the cookie, the capabilities of a particular server and the type of application program, which allows optimizing the use of system resources.

Quite significant advantages can be provided by the GSLB system, which is able to solve the problem of balancing for arbitrarily located server farms, taking into account their distance from the client. This system can support several different load balancing algorithms and provide optimal service to customers around the world. For administrators, the system makes it possible to form a flexible resource management policy.

One way to speed up service is caching. With a well-configured cache, the percentage of requests that are satisfied by the cache can be as high as 40%. At the same time, the acceleration of service can be improved 30 times.

Archiving of data can serve as another method of accelerating service, since in this option the level of congestion of the network channels is reduced.

Load balancing management can be combined with the application firewall feature (70% of successful intrusions exploit application vulnerabilities) and over SSL over the VPN tunnel. SSL is a cryptographic protocol that ensures a secure connection between a client and a server.

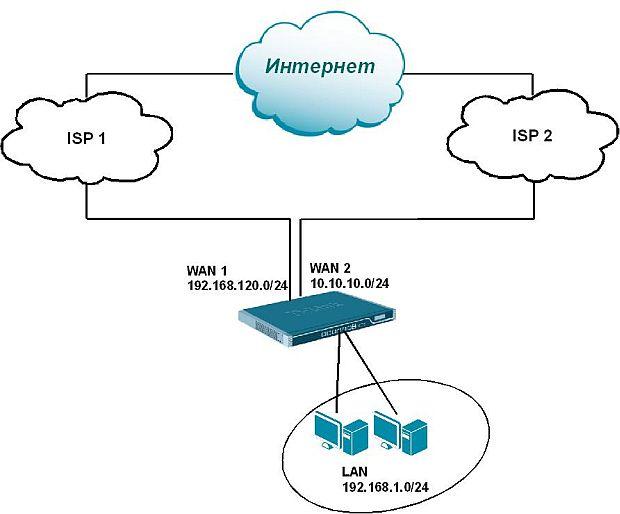

To achieve maximum bandwidth and fault tolerance, firewalls allow you to distribute or balance the load using all available Internet channels (servers) simultaneously. For example, you can avoid a situation when packets transmitted over the network go through one provider, while Internet access through another provider is idle. Or distribute services and route traffic through all available Internet channels. Or distribute services and route traffic through all available Internet channels. It is possible to configure load balancing if connections with providers are made with different types of connection (Static IP, PPPoE, PPTP / L2TP), as well as for balancing traffic passing through VPN tunnels installed on different physical interfaces (Figure 1).

Рисунок 1 – Figure 1 - An example of using load balancing in a network segment

The balancing procedure is carried out using a whole set of algorithms and methods corresponding to the following levels of the OSI model:

- network layer;

- transport layer;

- application layer;

Balancing at the network level involves solving the following problem: you need to make sure that different physical machines are responsible for one specific server IP address.

With DNS balancing, multiple IP addresses are allocated to one domain name. The server to which the client's request will be directed is usually determined using the Round Robin algorithm (the methods and algorithms for balancing will be described in detail below).

When using an NLB cluster, servers are combined into a cluster consisting of input and compute nodes. Load distribution is carried out using a special algorithm.

Territorial balancing is carried out by placing the same services with the same addresses in geographically different regions of the Internet (this is how the Anycast DNS technology works, which we have already written about).

Balancing at the transport level is the simplest: the client contacts the load balancer, which redirects the request to one of the servers, which will process it. The choice of the server on which the request will be processed can be carried out in accordance with a variety of algorithms:

- by a simple circular search;

- by choosing the least loaded server from the pool, etc.

At the transport level, communication with the client is closed on the balancer, which works as a proxy. When balancing at the application level, the load balancer operates in the "smart proxy" mode. It analyzes client requests and redirects them to different servers depending on the nature of the requested content.

4. Analysis of existing algorithms and methods of load balancing with quality of service

As an assumption for the study of balancing algorithms, we will take as a basis traffic flows at the exit from the device, distributed among queues with WFQ service, which must satisfy modern QoS requirements [5]. In this case, one more assumption is made: the transport layer protocols of the OSI model will be the criterion for balancing flows; The highest priority traffic will be streams using RTP / UDP transport protocols (used for voice and video transmission in real-time communication), which will be assigned to the most demanding class with the establishment of the necessary identifiers of the TOS field in the IP packet for classification.

Load balancing algorithms can be divided into static, dynamic and adaptive. Dynamic load balancing algorithms use information about the state of the system, for example, the load of nodes, to make decisions about load balancing during the system operation.

Figure 2 - Using load balancing between hosts [6]

(Animation: 10 frames, 51 kilobytes)

Figure 2 - Using load balancing between hosts [6]

(Animation: 10 frames, 51 kilobytes)

Static algorithms do not use such information. In static algorithms, decisions about load distribution are implemented in advance, before the system starts operating, and do not change during operation.

The Round Robin balancing algorithm is a round robin iteration of requests: the first request to the first node, the second request to the second node, and so on until the last one is reached. This algorithm balances the load regardless of the protocol used in the packets. The main criterion is addressing the site by domain name. The advantages of the algorithm include the following: low cost; ease of implementation; lack of connectivity between the nodes participating in the algorithm; the algorithm works regardless of the server load. Despite its advantages, the algorithm also has the following disadvantages: ensuring an equal set of resources for each node; lack of an algorithm for checking server busyness; lack of an algorithm for checking the required bandwidth to the line; lack of ability to control flows based on the used transport protocol.

Based on the description of the Round Robin algorithm, it is impossible to draw a final conclusion that this algorithm will perform balancing with criteria required to ensure the required level of quality of service, with large volumes of traffic. Also, this algorithm does not allow optimal use of infrastructure resources due to its shortcomings.

An improved Round Robin algorithm that assigns a weighting factor to each participant in the algorithm based on its performance: a larger factor to a more performing node. This algorithm allows using the system resources more flexibly, but it also does not solve all the problems with node fault tolerance.

Dynamic algorithms, due to the use of information about the state of the system, have the ability to overtake static algorithms in efficiency, for example, a simple dynamic algorithm can be identical in efficiency to a complex static one. Due to the fact that dynamically algorithms must collect, store and analyze information about the state of the system, they are characterized by large overhead costs of balancing compared to static algorithms.

Adaptive algorithms are a special case of dynamic algorithms and, depending on the conditions and a priori knowledge of the properties of balancing algorithms, the most suitable one is selected. Information about the state of the system is an important element of the dynamic algorithm. There are various methods of collecting data on system utilization and constructing utilization characteristics. The workload of a node can be determined, for example, as a fraction of the processor time spent on solving a problem, as the number of tasks in the queue of a node, or as the probability of finding a node busy with solving a problem at any time.

The Least Connections algorithm has an undoubted advantage over the two previously discussed - control of the number of connections to the node. This feature of the algorithm allows you to distribute the load on the nodes so as to avoid the failure of one or more nodes due to overload. It does this by sending the request to the node with the fewest active connections.

There is also an improved version of this algorithm - Weighted Least Connections, which, when transmitting a request, takes into account not only the number of active connections, but also the weighting factor of the cluster node, which allows you to build a cluster service with a different set of resources.

Improved Least Connections balancing algorithms also include Locality-Based Least Connection Sheduling and Locality-Based Least Connection Sheduling with Replication Sheduling [8]. The first algorithm was created specifically for caching proxy servers, which works according to the principle: more requests are sent to nodes with the fewest active connections. Each node has its own block of client IP addresses, requests from them are redirected to the node serving this group. If the "parent" node is loaded, requests are passed to another node, less loaded. The second algorithm is improved for use in a group of nodes. That is, the block of client IP addresses is served not by one node, but by a group.

Based on the description of the Least Connections algorithm, there is a visible possibility of load balancing in such a way that the resources of the entire system are used as much as possible while ensuring the required level of service quality.

The Sticky Sessions algorithm is characterized by the distribution of incoming requests in such a way that the same node serves one group of clients. Such an algorithm is used, for example, in web servers. In the specified algorithm, requests are distributed between nodes based on the client's IP address, which is implemented by the IP hash method.

Today there are many load balancing algorithms that are able to provide the required level of service quality and meet the basic distribution requirements: efficiency; even use of system resources;predictability; decrease in response time; reducing the response time to a request.

Conclusion

Load balancing is essential to optimize resource utilization and reduce maintenance time. Places of application of load balancing algorithms can be server clusters, firewalls, and any "group" type of access to the same type of resource. The goal of load balancing is both to ensure a certain quality of service for the user and the efficiency of using the corresponding resource. [7]

Despite the fact that there are a large number of redistribution algorithms, however, they have a number of drawbacks, including the uneven distribution of traffic between servers, the lack of consideration of the dynamic nature of traffic, ineffective use significant response time. Since the edge network device of the IP / MPLS network is a "bottleneck", the task of studying load balancing methods and developing a method that takes into account quality of service parameters and allows to increase the efficiency of using network resources is urgent.

At the time of writing this abstract, the master's work has not yet been completed. Final completion: June 2021. The full text of the work and materials on the topic can be obtained from the author after that date.

References

- Костюк Д.Е. Анализ методов и алгоритмов балансировки нагрузки / Д.Е. Костюк, В.Н. Лозинская // Информационное пространство Донбасса: проблемы и перспективы : материалы III Респ. с междунар. участием науч.-практ. конф., посв. 100-летию осн-ия ДонНУЭТ. – Донецк:ГО ВПО «ДонНУЭТ» – 2020. – С. 154-157.

- Кучерявый Е.А. Управление трафиком и качество обслуживания в сети Интернет / Е.А. Кучерявый. – СПб.: Наука и Техника, 2004. – 336 с.: ил.

- Елагин, В.С. Аспекты реализации системы законного перехвата трафика в сетях SDN / В.С. Елагин, А.А. Зобнин // Вестник связи. – 2016. – № 12. С. 6 – 9.

- Klampfer Sasa, Influences of Classical and Hybrid Queuing Mechanisms on VoIP’s QoS Properties [Электронный ресурс] / Sasa Klampfer, Amor Chowdhury, Joze Mohorko, Zarko Cucej. [Источник]

- Braden R., RFC 2309. Recommendations on queue management and congestion avoidance in the Internet [Электронный ресурс] / D. Clark, J. Crowcroft, B. Davie, S. Deering, D. Estrin, S. Floyd, V. Jacobson, G. Minshall, C.Partridge, L.Peterson, K. Ramakrishnan, S.Shenker, J. Wroclawski, and L. Zhang. [Источник]

- Van Nieuwpoort Rob V. Adaptive Load Balancing for Divide-and-Conquer Grid Applications [Электронный ресурс] / Rob V. van Nieuwpoort Jason Maassen, Gosia Wrzesinsk ? a, Thilo Kielmann, Henri E. Bal.[Источник]

- Jamaloddin Golestani S. A self-clocked fair queuing scheme for broadband applications [Электронный ресурс] / Golestani S. Jamaloddin. [Источник]

- Bahaweres, R. B. Comparative analysis of LLQ traffic scheduler to FIFO and CBWFQ on IP phone-based applications (VoIP) using Opnet (Riverbed) / A. R. B. Bahaweres, Fauzi and M. Alaydrus // 2015 1st International Conference on Wireless and Telematics (ICWT). – Manado. – 2015. – pp. 1-5