|

Overview

Our research group is working to develop and operate a system that allows a physician to see directly inside a patient, using augmented reality (AR). AR combines computer graphics with images of the real world. This project uses ultrasound echography imaging, laparoscopic range imaging, a video see-through head-mounted display (HMD), and a high-performance graphics computer to create live images that combine computer-generated imagery with the live video image of a patient. An AR system displaying live ultrasound data or laparoscopic range data in real time and properly registered to the part of the patient that is being scanned could be a powerful and intuitive tool that could be used to assist and to guide the physician during various types of ultrasound-guided and laparoscopic procedures.

Head Mounted Display Research

Head mounted displays (HMDs) with appropriate characteristics for augmented reality are not commercially available. We have put a significant effort into modifying existing HMDs and building our own HMDs for use in our augmented reality projects.

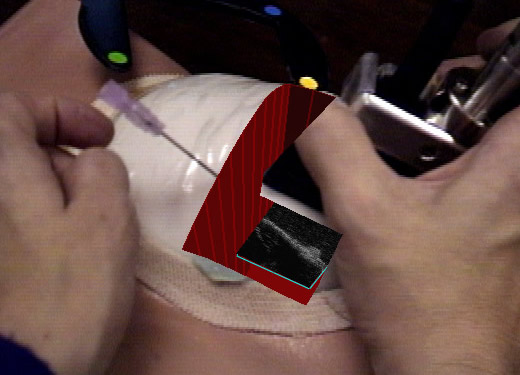

Ultrasound-guided Breast Biopsy

In recent years, ultrasound-guided biopsy of breast lesions has been used for diagnostic purposes, partially replacing open surgical intervention. Ultrasound guidance is also often used for needle localization of some lesions prior to biopsy, as well as for cyst aspiration. Ultrasound guidance for such interventions, however, is difficult to learn and perform. One needs good hand-eye coordination and three-dimensional visualization skills to guide the biopsy needle to the target tissue area with the aid of ultrasound imagery. We believe that the use of computer-augmented vision technology can significantly simplify both learning and performing ultrasound-guided interventions. We are, therefore, targeting our current and near-term future research efforts toward building a system that will aid a physician in performing an ultrasound-guided needle biopsy. Results from preliminary experiments with phantoms and with one human subject have been encouraging.

Early Augmented-Reality Systems.

Our initial ultrasound visualization system, featured the ability to display a small number of individual ultrasound slices of a fetus superimposed onto the pregnant patient's abdomen. The system used traditional chroma-keying techniques to combine rendered ultrasound data with a digitized video image from a head-mounted camera. In the initial system, alignment was poor (due to poor tracking), the images were not clear, and the sense of the 3D shape of the fetus was lacking. The first improvement to the system was to reconstruct the individual slices of ultrasound data into a volume. This algorithm made use of UNC's custom high-performance graphics engine, Pixel-Planes 5. Updates were slow, however, and the images were still not convincing. We thus moved to a system that featured on-line data acquisition and off-line rendering. These images set a quality standard for later systems, though we still wanted to return to a real-time system. Also, as the supporting technologies improved, we incorporated these into the system. Alignment was improved by new camera and ultrasound probe calibration methods, and by improvements in instrument and head tracking.

Intermediate Real-Time System.

The ultrasound research group moved to a prototype real-time augmented reality system based on an SGI Onyx Infinite Reality? (IR) high-performance graphics workstation equipped with a Sirius Video real-time capture unit. This system made use of the high-speed, image-based texturing capability available in the IR. The Sirius captured both HMD camera video and ultrasound video. The camera video was displayed in the background; the ultrasound video images are transferred into texture memory and displayed on polygons emitted by the ultrasound probe inside a synthetic opening within the scanned patient. The display this system presented to the user resembles the display offered by the earlier on-line volume reconstruction system, but the images obtained are vastly superior to those generated by the initial system. This system could sustain a frame rate of 10 Hz for both display update and ultrasound video grabbing and also provides high-resolution ultrasound slice display and rendering for up to 16 million ultrasound pixels. This system used a technique designed to correct image errors from the magnetic head tracking system by tracking landmarks in the video imagery. Other techniques such as prediction, interpolation of past readings, and reordering of computation in order to reduce apparent latency have proven useful in further reducing registration errors.

Unlike the initial system, the ultrasound slices are not reconstructed into a volume, but are displayed directly as translucent polygons with ultrasound video images mapped on them. A large number of such directly rendered ultrasound slices can give the appearance of a volume data set. Ultrasound probe tracking was performed with a high-precision, mechanical tracking device that provided correct registration between individual ultrasound slices.

This system was used to demonstrate the possibility of using augmented reality to enhance visualization for laparoscopic surgery .

Current Real-Time System

We recently moved our prototype real-time augmented reality system from an SGI Onyx InfiniteReality(tm) to the department?s SGI Reality Monster. We make use of the digital video input capabilities of the Reality Monster by simultaneously capturing imagery from the HMD cameras, and the ultrasound imager into texture memory. The new system uses only a single opto-electric tracking system with greater overall precision than previous systems.

New applications of our system require advances in tracking, image processing, and display devices. In collaboration with the University of Utah, we designed and built a video see-through head-mounted display and are experimenting with alternatives to HMDs. Currently available tracking systems limit our ability to precisely register real and synthetic imagery leading to research into improving tracking systems. As we began to develop our system for laparoscopic surgery, we have found a need to develop instruments and algorithms for rapidly acquiring the three dimensional geometry inside a body through a laparoscope.

Первоисточник

|