Job-shop scheduling and visibility studies with a hybrid ACO algorithm

Introduction

Manufacturing today is primarily cooked down to all-out efforts into profitability. Factories are moved to low-salary countries in order to ensure that profits are maintained and stockholders kept happy. Decisions like these are met with debates about morale, ethics and responsibilities that companies have to society, since losing an entire manufacturing plant can be devastating to a community. An alternative to industrial relocalization is trying to maintain profitability through development of effective production schedules, better utilization of resources and overall better planning in existing manufacturing plants. The significance of effective planning methods has, in other words, increased and will likely continue to do so.

The focus of this chapter is to solve the MT10 job-shop scheduling problem using 4 different variants of the Ant Colony Optimization (ACO) algorithm and to try to rank them. A hybrid model, that uses a postprocessing algorithm to improve the resulting schedule, is also tried for all four ACO versions. The term visibility is explained in the context of job-shop scheduling, and incorporated into the test runs. When we are talking about job-shop scheduling problems (JSP), we mean a set of machines M, a set of jobs J and a set of operations O. For each operation there is a job to which it belongs, a machine on which it it has to be processed, a predetermined processing time on that machine as well as a predetermined processing order on the machines. The problem is to minimize the makespan while ensuring that no more than one job can be processed at the same time on the same machine, and seeing to that when a job starts, it must be completed (and can’t be interrupted). There have been numerous publications of successful algorithms applied to job-shop problems. Among exact mathematical methods are Mixed integer linear programming and Branch & Bound, among approximation methods there are List Scheduler Algorithms (see Panwalker & Iskander, 1977 for a survey), that assign one operation at a time from a list that is sorted by some priority rule, Shifting Bottleneck by Adams et al. (1988), Simulated Annealing by van Laarhoven et al. (1988), Tabu search was first used in job shop scheduling by Taillard (1989) and a Genetic algorithm approach by Nakano and Yamada (1991). A newcomer in these approaches to the JSP, ant colony optimization has become an increasingly popular candidate when it comes to algorithms that mimic behaviour of processes that exist in nature. The first ACO algorithm was introduced by Marco Dorigo in Swarm Intelligence: Focus on Ant and Particle Swarm Optimization 356 his doctoral thesis (1992) and was called an Ant System (AS). Since then AS has matured into an algorithm that does very well when it comes to problem types that are formulated as a traveling salesman problem (TSP) as well as the quadratic assignment problem (QAP). As a result of research into ACO algorithms, some very successful variants have emerged.

We have the Elitist AS (EAS) proposed by Dorigo et al. (1996), in which the pheromone updating rules are biased towards the best solution found so far, the idea being to exploit the solution components within that solution. Ant Colony System (ACS) by Dorigo and Gambardella (1997) has several modifications to the original AS. It uses a modified rule when an ant chooses the next travel node, it uses a best-so-far pheromone update rule but applies pheromone evaporation only to the trail that belong to solution components that are in the best-so-far solution. It also uses a local pheromone update rule to decrease the pheromone values on visited solution components, in order to encourage exploration. Rank-based AS (RAS) by Bullnheimer et al. (1999), is a variant where the elite ant as well as a selection of ants with good solutions during that iteration get to update the pheromone trails. MAX-MIN AS (MMAS) by Stutzle and Hoos (2000), is an approach that updates the pheromone trails, according to some convergence measure, with either the iteration-best ant or the best-so-far ant. The algorithm uses a lower bound for the pheromones (>0) as well as restricting the maximum amount of pheromone a trail can have. The lower bound encourage ant exploratory behaviour and the upper bound is prohibiting premature convergence due to the elite solution dominating the other solutions. Hypercube Framework (HCF) by Blum and Dorigo (2004) is more of a framework for implementing ACO algorithms. Among the benefits are automatic scaling of pheromone values to the interval [0,1]. In a paper by Colorni et al. (1993) AS was applied into job-shop scheduling and proved to be a noteworthy candidate when faced with the task of chosing a suitable algorithm for scheduling problems. The conclusions in the aforementioned paper were that AS is one of the most easily adaptable population-based heuristics so far proposed and that its computational paradigm is indeed effective under very different conditions.

As an example of ACO robustness, Jayaraman et al. (2000) used an ACO algorithm in solving a combinatorial optimization problem of multiproduct batch scheduling as well as the continuous function optimization problem for the design of multiproduct plant with single product campaigns and horizon constraints. Further real-world applications with regard to ACO algorithms would be using ACO to solve an established set of vehicle routing problems as done by Bell and McMullen (2004) and a dynamic regional nursescheduling problem in Austria by Gutjahr and Rauner (2005). The former paper concluded the results were competetive and in the latter paper ACO was compared to a greedy assignment algorithm and achieved highly significant improvements. Kuo-Ching Ying et al. (2004) applied the ant colony system to permutation flow-shop sequencing and effectively solved the n/m/P/Cmax problem, and commented that this suggests that the ant colony system metaheuristic is well worth exploring in the context of solving different scheduling problems. An example of ACO and flowshops in recent use would be a paper by Gajpal and Rajendran (2006), where they used a new ACO algorithm (NACO) to minimize the completion variance of jobs, showing that work with ACO algorithms is an ongoing process to modify and improve the original AS and apply it to a variety of scheduling problems. For two of the top performing ACO algorithms, ACS and MMAS, convergence to the optimal solution has been proved (Dorigo and Stutzle, 2004 as well as Stutzle and Dorigo, 2002). It is worth to remember that convergence results do not allow prediction of how quickly an optimal solution can be found.

Problem description

The Job-Shop Scheduling Problem (JSP) can be characterized as n jobs to be processed on m machines. In general it is a set of concurrent and conflicting goals to be satisfied using a finite set of resources where resources are called machines and basic tasks are called jobs. Each job is a request for scheduling a set of operations according to a process plan which specifies precedence restrictions. We have:

For each operation  there is a job Ji to which it belongs, a machine Mj on which it has

to be run and a processing time pij of the operation uij, where pij is a nonnegative integer.

Every job is a chain of operations and every operation has to be processed on a given

machine for a given time. The task is to find the starting times of all operations such that the

completion time of the very last operation is minimal. The chain order of each job has to be

maintained and each machine can only process one job at the same time. No job can be

preempted; once an operation starts it must be completed. The solution s to an instance of

the n x m JSP specifies a processing order for all of the jobs on each machine and implicitly

defines an earliest starttime and earliest completion time for each operation. The maximum

of the completion times is called makespan and most research address the problem of

makespan minimization.

Given an instance of JSP we can associate with it a disjunctive graph G = (V, A, E), where V

is the node set, A is the conjunctive arc set and E is the disjunctive arc set. The nodes V

correspond to all of the operations and two dummy nodes, a source and a sink. The

conjunctive arcs A represent the precedence relationships between the operations of a single

job and the disjunctive arcs E represent all pairs of operations to be performed on the same

machine. All arcs emanating from a node have the processing time of the operation

performed at that node as their length. The source has conjunctive arcs with length zero

emanating to all the first operations of the job and the sink has conjunctive arcs coming from

all the last operations. A feasible schedule corresponds to a selection of one arc from each

disjunctive arc pair such that the resulting directed graph is acyclic, i.e. no loops can be

found. The problem of minimizing the makespan reduces to finding a set of disjunctive arcs

which minimize the length of the critical path in the directed graph. An in-depth description

of disjunctive graphs with regard to job-shop problems can be found in for instance the

article about AS and JSP by Colorni et al. (1993).

there is a job Ji to which it belongs, a machine Mj on which it has

to be run and a processing time pij of the operation uij, where pij is a nonnegative integer.

Every job is a chain of operations and every operation has to be processed on a given

machine for a given time. The task is to find the starting times of all operations such that the

completion time of the very last operation is minimal. The chain order of each job has to be

maintained and each machine can only process one job at the same time. No job can be

preempted; once an operation starts it must be completed. The solution s to an instance of

the n x m JSP specifies a processing order for all of the jobs on each machine and implicitly

defines an earliest starttime and earliest completion time for each operation. The maximum

of the completion times is called makespan and most research address the problem of

makespan minimization.

Given an instance of JSP we can associate with it a disjunctive graph G = (V, A, E), where V

is the node set, A is the conjunctive arc set and E is the disjunctive arc set. The nodes V

correspond to all of the operations and two dummy nodes, a source and a sink. The

conjunctive arcs A represent the precedence relationships between the operations of a single

job and the disjunctive arcs E represent all pairs of operations to be performed on the same

machine. All arcs emanating from a node have the processing time of the operation

performed at that node as their length. The source has conjunctive arcs with length zero

emanating to all the first operations of the job and the sink has conjunctive arcs coming from

all the last operations. A feasible schedule corresponds to a selection of one arc from each

disjunctive arc pair such that the resulting directed graph is acyclic, i.e. no loops can be

found. The problem of minimizing the makespan reduces to finding a set of disjunctive arcs

which minimize the length of the critical path in the directed graph. An in-depth description

of disjunctive graphs with regard to job-shop problems can be found in for instance the

article about AS and JSP by Colorni et al. (1993).

The hybrid-ACO algorithm

The algorithm consists of two parts. We have the ACO part, where ants crawl over the searchspace trying to construct a feasible tour. When all ants have constructed their tour, the timestamps have also been calculated for the individual operations in the schedule defined by a tour, which allows us to calculate the makespan. The postprocessing part springs to life when there is a complete schedule to operate on. The (global) pheromone update of the ACO occurs only after the postprocessing has finished, this is due to the postprocessing affecting the makespan of the schedule formed by the tour of the ant. After the pheromone update ACO continues with the next iteration.

The postprocessing algorithm

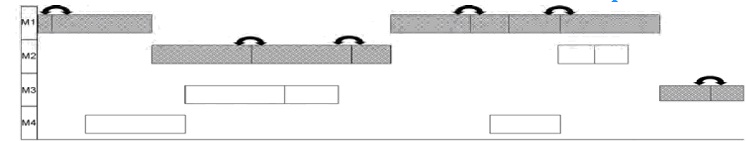

After all ants have constructed their tour, a postprocessing algorithm is applied. This algorithm is effectively a local search procedure, based upon the approach of Nowicki and Smutnicki (1996). The local search begins by identifying the critical path in the constructed schedule. The critical path can be decomposed into a number of blocks where a block is a maximal sequence of adjacent operations that require the same machine. Block length can vary from just one operation to all operations that are scheduled on one machine. Given a block, swapping operations take place. We start from the last block in the critical path which has a size larger than 1 and its last operation in the block. The block size must be larger than 1 since otherwise no swap can be made. The identified operation is swapped with its predecessor in the same block, and the necessary changes are made into the tour of the ant as well as the timestamps of the scheduled operations. If the swap improves the makespan, it is accepted, otherwise the swap is undone and the next pair in the block is up for swapping. If a block contains no more swaps we move to the preceeding block. Note that an accepted swap means that the critical path may change and a new critical path must be identified. If no swap of operations in the critical path improve the makespan, the local search ends. This means that the tour of an ant may change in the postprocessing part of the algorithm. The tour of the ants after the very first completed postprocessing run may differ radically from the one presented by the first iteration of the ACO, but succeeding postprocessing runs after the first round of calculations are much easier on the ants and are not interrupting the pheromone trails too much. Figure 1 shows a critical path and possible swaps for an example schedule.

What is visibility?

An additional problem when working with ant systems is that of visibility. There are similarities between priority rules used in heuristic approaches and the visibility of a single ant, both are trying to evaluate and make a choice of where to go next from a specific node. Usually visibility is referred to as the neighbourhood of the ant, i.e. the nodes that are close to the node the ant is currently staying on. It is a measure of what nodes the ant can see around it when standing on a specified node. In equation 1, the parameter ?ij is our measure of visibility and in TSP-problems the meaning is clear and all values of ?ij can be computed a priori, since the node distances are known. No matter which node the ant stands on, the distance to all other nodes can be fetched from a pre-calculated distance table. When it comes to schedules it is not entirely straightforward what visibility is and what effect it has on computations with regard to ACO. The distance in time units from a node in the tour to the next is not known until you have calculated the timestamps for the entire tour so far. Another thing with ACO and the MT-10 problem is that the tabu list (already visited nodes) alone is not enough. Since the tasks in every job have to be done in correct order, that is, task A3 has to be done before A4 etc., a candidate list is needed. The candidate list has all the legal node choices an ant can make from the node it is currently standing on. This means that only the selection probabilities for the nodes in the candidate list need to be calculated, which speeds up the algorithm. In this case visibility for an ant is restricted to only the nodes in the candidate list.