Âåðíóòüñÿ â áèáëèîòåêó

SmartSnap: addressing 3D pointing anisotropy in Virtual Reality CAD application

Àuthors: Michele Fiorentino, Giuseppe Monno, Antonio E. Uva. SmartSnap

Source: http://www.graphicon.ru/2004/Proceedings/Technical/s1[5].pdf

Abstract

Developing an industry-complaint virtual reality CAD application is difficult because of the limited understanding of the interaction techniques in virtual environments. The aim of this paper is to give a qualitative and quantitative evaluation of human precision with a 3D direct spatial input during a VR modeling session. In particular we focused on a set of frequent tasks performed in a 3D CAD system: pointing, picking and line sketching. For this purpose, we developed a specific application called SpaceXperiment to support our experiments. All the performed tests show that that user looses precision easier along the direction perpendicular to the projection screen, that we call depth direction. The pointing precision and accuracy values measured by SpaceXperiment allowed the design of drawing aids such as ‘snaps’ and ‘grips’ which are essential to assist the user during modeling sessions in a 3D CAD environment. The quantitative results of the tests leaded to the development of an innovative ellipsoid shaped snap called “SmartSnap”, which overcomes the high pointing anisotropy preserving high snap resolution. The presented results offer a significant contribution for developers of modeling applications in Virtual Reality.

1. INTRODUCTION

One of the most limiting factors for 3D modeling, as established by

many studies [24] [12], is the use of two degrees of freedom

devices (monitor, keyboard and mouse) for creating 3D forms.

Nowadays, virtual reality technology provides an enhanced

interface, based on stereographic vision, head tracking, real time

interaction and six degrees of freedom (6DOF) input. This VR

based interface is thus candidate to be the ideal workspace for next

generation 3D modeling applications.

Virtual environment interaction paradigm is often based upon direct

manipulation, which allows a effective transfer of object

manipulation skills developed in the physical world into humancomputer

interaction (HCI). Direct object manipulation generally

involve three elements: a controller, the physical device held in the

user hand, a cursor, which is the virtual representation of the user

finger, and a target, which is a particular hot spot in the virtual

environment. [21].

Thanks to the recent developments in tracking system technology,

new interesting insights of HCI in virtual reality can be carried out.

In fact, previous tracking systems, like magnetic and acoustic ones,

suffered from drawbacks in precision, latency, resolution and

repeatability of measurements [15]. Due to this reason, much of the

research effort was diverted towards tracking error reduction,

filtering and position prediction. Newly developed high precision

optical systems [1] consent nowadays a better understanding of

spatial input human interaction. Different factors can be isolated and

analyzed: limb posture, speed, and direction.

The term spatial input or 3D input refers in this work to interfaces

based upon free space technologies such as camera-based or

magnetic trackers, as opposed to desktop devices such as the

mouse or the Spaceball [10].

In particular, the interaction in Virtual Environments (VE) has been

proven to be strictly application and hardware dependent [3].

Literature on the specific modeling purpose is actually limited to

isolated experimental implementations, and results are far to be

systematic and generically applicable. The not completely explored

3D input\output interface limits nowadays the use of Virtual Reality

(VR) only to academic and research world.

In order to develop a virtual reality CAD application which really

takes advantages of the stereoscopic visualization and the six

degree of freedom (6DOF) input, a contribution is needed in the

understanding the principles and the rules which govern the

modeling tasks in a virtual environment.

The ongoing development by the authors of the Spacedesign

application [6], a VR based CAD system (VRAD), has given rise to

different issues concerning the interface design optimization such as:

widget and snap dimension, tracking filtering and user’s intention

recognition. A VR application, called SpaceXperiment, has been

thus developed to provide a configurable and systematic test bed for

the 3D modeling interaction. The influencing parameters and the

correlations among them are analyzed and discussed in order to

improve the VRAD Spacedesign application.

The purpose of this work is to examine human bias, consistency and

individual differences when pointing, picking and lines sketching in a

virtual environment, and therefore to provide useful information for

future computer interface design.

The goal of this work is to study those elements specifically for a

modeling application by collecting significant data regarding user’s

sessions and configuring parameters and tools in order to improve

the effectiveness of the interface. The literature about this topic is

wide but very scattered as underlined in the next section.

2. RELATED WORK

Human computer interface within a 2D environment has been is

object of study since the introduction of the computer. The simplest

form of interaction, the pointing, has been investigated for different

devices by many authors using the Fitts’ law in various forms [7]

[13].

Hinckley [9] presents an interesting survey of design issues for

developing three-dimensional user interfaces, providing suggestion

and examples. The main contribution is the synthesis of the

literature available scattered results, observations, and examples into

a common framework, in order to serve as a guide to researchers or

systems builders who may not be familiar with spatial input.

Graham et al. [8] explore 3D spatial pointing by comparing virtual

and physical interaction, using a semi-transparent monoscopic

display and a 3D tracking system. Two different approach are

tested: a virtual mode, displaying only computer generated images,

and a physical mode, where the graphics display is turned off and

the subjects can see through the mirror to the workspace below.

The results suggest that movement planning and kinematic features

are similar in both conditions, but virtual task takes more time

especially for small targets. Moreover changes in target distance

and width effect the spatial temporal characteristics of pointing

movement.

Bowman [3] et al. develop a test bed to compare different basic VR

interaction techniques for pointing, selection and manipulation. The

authors note that the performance depends on a complex

combination of factors including the specific task, the virtual

environment and the user. Therefore applications with different

requirements may need different interaction techniques.

Poupyrev et al. [17] develop a test bed which evaluates

manipulation tasks in VR in an application-independent way. The

framework provides a systematic task analysis of immersive

manipulation and suggest a user-specific non Euclidean system for

the measurement of VR spatial relationship.

Mine et al. [14] explore manipulation in immersive virtual

environments using the user’s body as reference system. They

present a unified framework for VE interaction based on

proprioception, a person's sense of the position and orientation of his

body and limbs. Test are carried out about the body-relative

interaction techniques presented.

Wang et al. [25] investigate combined effects of controller, cursor

and target size on multidimensional object manipulation in a virtual

environment. Test revealed that the same size of controller and

cursor improved object manipulation speed, and the same size of

cursor and target generally facilitate object manipulation accuracy,

regardless their absolute sizes.

Paljic [16] reports two studies on the Responsive Workbench. The

first study investigates the influence of manipulation distance on

performance in a 3D location task. The results indicate that direct

manipulation and 20 cm distance manipulation are more efficient

than for 40 and 55 cm distances. The second study investigates the

effect of two factors: the presence or absence of a visual clue, and

the scale value, which is a variation of the scale (1 or 1.5) used to

map the user's movements to the pointer. Task performance is

significantly lower when using the visual clue, and when using the

1.5 scale.

Boritz [2] investigate the ability to interactively locate points in a

three dimensional computer environment using a six degree of

freedom input device. Four different visual feedback modes are

tested: fixed viewpoint monoscopic perspective, fixed viewpoint

stereoscopic perspective, head-tracked monoscopic perspective and

head-tracked stereoscopic perspective. The results indicate that

stereoscopic performance is superior to monoscopic performance

and that asymmetries exist both across and within axes. Head

tracking had no appreciable effect upon performance.

Zhai [28] presents an empirical evaluation of a three-dimensional

interface, decomposing tracking performance into six dimensions

(three in translation and three in rotation). Tests revealed subjects’

tracking errors in the depth dimension were about 45% (with no

practice) to 35% (with practice) larger than those in the horizontal

and vertical dimensions. It was also found that subjects initially had

larger tracking errors along the vertical axis than along the

horizontal axis, likely due to their attention allocation strategy.

Analysis of rotation errors generated a similar anisotropic pattern.

Moreover, many authors [19][4][11][5] developed virtual reality

based CAD applications, describing the specific implementation and

the results achieved. But such contribution are often single and

isolated study, without a systemic performance evaluation and the

definition of precise guidelines.

From the related work presented, it can be summarized that

literature offer several approaches to human interaction

understanding in virtual reality. Previous work has also shown how

interaction techniques in virtual environments are complex to

analyze and evaluate, because the variety of hardware configuration

(immersive VR, semi-immersive VR, desktop VR, type of input

devices) and the specific application. Some approaches try to be

more general decomposing each interaction into smaller task, but

specific study is necessary. This paper gives a practical and

substantial contribution for CAD modeling applications.

In the next section we illustrate our experimental approach for the

interaction evaluation within our VR CAD system. In particular we

analyze pointing, picking and lines sketching interaction tasks.

3. EXPERIMENT DESIGN

The aim of this paper is to give a qualitative and quantitative evaluation of human performance in a virtual environment while performing modelling tasks. For our tests we selected a set of the most frequent tasks performed in a CAD system: pointing, picking and line sketching. These tasks are similar for both 2D and 3D CAD system. Using stereoscopic display and a 6DOF tracked pointer, the following tests were carried out:

- the measurement of the ability of the user in pointing a fixed point;

- the analysis of the sketched lines traced by the user when following a virtual geometry, in order to discover preferred sketching methods and modalities;

- the user’s the ability to pick points in 3D space in order to evaluate human performance in object selection.

International Conference Graphicon 2004, Moscow, Russia, http://www.graphicon.ru/ SpaceXperiment application was used for these tests. Position, orientation and timestamp of the pointer (pen tip) was recorded, for every test, for subsequent analysis.

3.1 Participants

Voluntary students from the faculty of mechanical engineering and

architecture were recruited for the tests. All participants were

regular user of a windows interface (mouse and keyboard). None

had been in a VR environment before. All the user were given a

demonstration of the SpaceXperiment system and were allowed to

interact in the virtual workspace for approximately 20 minutes in

order to become acquainted with the perception of the virtual 3D

space. Moreover all the user performed a double set of tests. The

first set was considered a practice session and the second a data

collection session. All subjects were right handed, and had normal or

corrected-to-normal vision. Subjects had experience using a

computer. Informed consent was provided before the experiment.

3.2 Apparatus

The experiments were conducted in the VR3lab at the Cemec of

the Politecnico di Bari, on the VR facility which normally runs the

Spacedesign application. Our experimental test bed comprises of a

hardware system and a software application called

SpaceXperiment.

3.2.1 Hardware

The Virtual reality system used for the experiments is composed by

a vertical screen of 2.20m x 1.80m with two polarized projectors

and an optical 3D tracking system by Art [ART]. Horizontal and

vertical polarized filters in conjunction with the user’s glasses make

possible the so called passive stereo vision. The tracking system

uses two infrared (IR) cameras and IR-reflective spheres, the

markers, to calculate their position and orientation in space by

triangulation. The markers, which are of 12mm of diameter, are

attached to the interaction devices according a unique pattern which

allows them to be identified by the system. The user handles a

transparent Plexiglas pen with 3 buttons, which is represented in VR

with a virtual simulacrum. The user is also provided with a virtual

palette (a Plexiglas sheet) that can be used to retrieve information

and to display the virtual menus and buttons (Figure 1, 2).

An Dtrack motion analysis system, based on two ARTtrack1

cameras, records the three-dimensional position of infrared markers

placed on the user’s devices, and stores the results in data files for

further analysis.

A stereoscopic, head coupled graphical display was presented on th

screen, using orthogonally polarized glasses. The experiment was

conducted in a semi-dark room.

3.2.2 Software implementation

SpaceXperiment is the application addressed to the testing of 3D

interaction in a virtual reality environment. It is built upon the

Studierstube library [Schmalstieg 1996], which provides the VR

interface, the so-called Pen and tablet metaphor: the non-dominant

hand holds the transparent palette with virtual menus and buttons;

the other handles the pen for application-related tasks.

The incoming data from the tracking system are sent directly by

ethernet network to the SpaceXperiment application via the

OpenTracker library. This is an open software platform, based on

XML configuration syntax, is used to deal with tracking data from

different sources and control the transmission and filtering.

The system is set up in such a way that the size of the virtual

objects displayed on the screen corresponded to their real

dimensions. Because of the similarity of the platform between

SpaceXperiment and Spacedesign, test results from former can be

easily applied to the latter.

3.2.3 Tracking system calibration

After following the correct calibration procedure for the tracking

system, as described by the manufacturer, we performed a series of

tests to verify the precision and accuracy of the tracking system by

Art. We fixed the markers in 10 different position of the tracking

volume and recorded the measures of the system.

We find out that the system is capable to perform an average

precision of 0.8 mm in the position of the target. This result is

compatible with the manufacturer specification (0.4 mm) because

our system if provided of only two cameras vs. the four cameras

used for the tech. specification. In any cases this error is way lower

than the expected measure values, therefore we can be confident

that our future evaluations will be free of systematic measure error.

4. EXPERIMENT 1: POINTING STATIONARY MARKERS

In this first experiment we investigated the ability of the user to be

‘accurate’ in a pointing task. This precision is statistically evaluated

while the user points for a limited amount of time a marker fixed in

the space.

4.1 Procedure

The user is presented with a virtual marker in the 3D workspace.

He/she is asked to place the tip of the virtual pen as close as

possible to the centre of the marker. Once the user has reached the

centre of the marker with the pen tip in a stable manner, he/she is

asked to click on the pen button and keep the pen in the same

position for 5 seconds. The pointing task is repeated for 3 points in

different positions in space:

4.2 Results

Recording a position for 5 seconds on our system corresponds to

approximately 310 sample points. Hence we applied a statistical

analysis to the recorded data to evaluate mean, variance and

deviation from the target point. In order to determine any possible

anisotropy in the error values, the position vectors are projected onto

three orthogonal reference directions:

From Figure 3 it is possible to notice that:

Table 1 - Statistic error values (mm) for the performed test

| Total deviance | Horiz.Range (95%) | Vert.range (95%) | Depth range (95%) | |

| Max Error | 17,31 | 7,28 | 9,53 | 19,50 |

| Mean Error | 6,21 | 4,81 | 5,29 | 10,12 |

Table 2 - Average Ratios between error ranges along different directions

| Depth/Vertical | Depth/Horizontal | Horizontal/Vertical | |

| Max Error | 2.0 | 2.7 | 0.8 |

| Mean Error | 1.9 | 2.7 | 0.9 |

5. EXPERIMENT 2: SKETCHING LINES

The intention of this test is to evaluate the user’s ability to sketch as

closely as possible a reference geometry visualised in the 3D

environment.

5.1 Procedure

The user must follow, as accurately as possible, a virtual geometry

displayed in the 3D workspace. By moving the pen with its button

pressed a 3D free hand sketch is traced. As soon as the button is

released a new geometry is shown and the tracing task must be

repeated for the following: horizontal line, vertical line, depth line

(line drawn ‘out of’ the screen), and rectangular frame aligned with

the screen plane. The user is required to perform the experiment

five times with different modalities as follows:

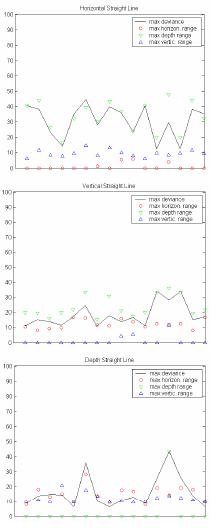

5.2 Results

The deviation of the sketched line from its reference geometry

represents how sketching precision and accuracy vary according to

the sketching direction. We considered for the error metric the

deviance, which is the distance between the pen tip and its closest

point on the reference. The range of the deviance error is

evaluated in each reference direction: horizontal range, vertical

range and depth range (Figure 4, Table 3).

The following considerations can be made accordingly to the

obtained results:

5.2.1 Anisotropic error

The higher error along the depth direction, already noticed in the

experiment 1 is confirmed: the error along the depth direction, is

about 1,8-2,6 times the error along horizontal and vertical

directions (Table 4).

Table 3 - Table 3: Error values (mm) for Mode A

| Total deviance | Horiz.range | Vert.range | Depth range | |

| Max Value | 40,8 | 22,6 | 17,8 | 41,9 |

| Mean Value | 21,8 | 13,7 | 10,8 | 28,6 |

Table 4 - Average Ratios between error ranges along different directions for Mode A

| Depth/Vertical | Depth/Horizontal | Horizontal/Vertical | |

| Max Error | 2.3 | 1.8 | 1.3 |

| Mean Error | 2.6/td> | 2.1 | 1.3 |

5.2.2 Direction influence

Each user is more comfortable in sketching the same line in his

favourite direction. If the user sketches the line inverting the starting

and ending points, this yields definitively worse errors along all the

three reference directions. Inverting the direction, in our tests,

increases the error magnitude by an average factor of 1,9 (see

Table 5).

Table 5 - Error ratios for normal sketching (Mode A) over reversed direction (Mode B).

| Total deviance | Horiz.range | Vert.range | Depth range | |

| Reversed/Normal | 1.9 | 1.2 | 1.3 | 2.1 |

A noticeable result is that the inversion influences more the error

along the depth direction as this error nearly doubles along the other

reference directions. This is an additional confirmation that the user

loses

5.2.3 Speed influence

Our results show that the sketching speed influences the error not in

a predictable way. We tested the usual sketching patterns at low,

normal and high speed (Mode C,D,E).

For most users the error magnitude increases both at high speed and

at low speed. An increase in the error can be expected at high

speed, but not at low speed. This behaviour can be explained with

the fact that a moderate speed tends to stabilize vibrations in human

hand.

6. EXPERIMENT 3: PICKING CROSS HAIR MARKERS

The intention of this test is to evaluate the ability of the user in

performing the picking of a 3D three dimensional cross hair target

fixed in a random position. We analyse both precision and time

performance.

6.1 Procedure

A semi transparent [26] cross hair appears in a random position of

the workspace together with a highlight parallelepiped representing

the target bounding-box as shown in Figure 2. The user picks the

centre of the target using the pen button. We repeat the picking

operation for ten different points, and the user must return in a

‘home’ position before picking the next target. Different sounds

accompany each different step guiding the user during the test. We

record: picking position, the time to pick and the time to enter into

the target’s bounding-box are recorded in a text file during every

test session.

6.2 Results

The time interval to move from the ‘home’ position to the target

bounding-box is related to the reaction time and suggests the

maximum velocity of user’s movements, whilst the time to click the

centre of the marker shows how fast the user can perform accurate

movements. An analysis of these parameters yielded the following

results:

6.2.1 Deviance:

The error values are shown in the following Table 6. In a similar

manner to the above-mentioned experiments 1 and 2, the error along

the depth direction is considerably higher then the error along the

other directions.

Table 6 - Statistic error values for the performed test

| Deviance(mm) | Horiz.error(mm) | Vert.error(mm) | Depth error | Depth error/Horiz.error | Depth error/Vert.error | |

| Max Value | 24,04 | 12,90 | 16,23 | 32,25 | 2,5 | 2,0 |

| Avg.Value | 7,26 | 1,69 | 2,32 | 2,97 | 1.8 | 1.3 |

6.2.2 Time considerations:

The time interval necessary to perform the picking operation can be

split into two contributions:

Time to pick = Time to reach the bounding box + Time spent inside the bounding box

The corresponding average times have been evaluated using

statistical analysis and are shown in the following Table 7.

Table 7 - Time values (milliseconds) for the performed test

| Min | Max | Average | |

| Time to reach target Bounding-Box | 1207 | 2448 | 640 |

| Time inside target Bounding-Box | 1914 | 3271 | 750 |

| Time to Pick (total) | 3121 | 5016 | 1703 |

Our tests have shown, as expected, that the time needed to reach the bounding box of the target is proportional to the distance of the target from the “home position”. This is in accordance with the previously mentioned Fitts’ Law. Moreover the error magnitude decreases with the time spent inside the bounding-box more than with the total time to pick. This can be explained by the fact that the user moves quickly to the bounding box and then, once inside, points precisely the target.

7. DISCUSSION

The high tracking precision available nowadays with optical systems

allowed us to evaluate the human interaction process in VR.

All the performed tests show that that user looses precision easier

along a defined direction. We can identify this direction with the

direction perpendicular to the projection screen, that we call depth

direction. The first experiment, regarding the static pointing, has

firstly validated the anisotropy hypothesis, and has shown an

average error of 10.1 mm along the depth direction and an

average error of 4.8 mm and 5.3 mm along the vertical and

horizontal direction.

The error evaluated is considerably higher than the precision of the

system evaluated in Section 3.2.3, therefore we can assume our

results valid as regards the systematic error.

The second experiment, concerning the line sketching, has

confirmed the results of the previous experiment. The error

reasonably increases (28.6 mm, 13.7 mm and 18.8 mm for d, h, v

directions), effect explainable by the fact that the hand of the user is

now moving, but the ratio between the error along the depth

direction and the vertical and horizontal direction does not

change considerably.

The third experiment is the most significant in our opinion, because

regards the most performed task in a VR environment: the picking

or selection. Also this experiment validated the error anisotropy

hypothesis confirming the error ratio between the different

directions.

8. APPLICATION OF RESULTS: SMARTSNAP

The SpaceXperiment application has as main goal of first testing

and then improving interfaces to increase the performances of.

VRAD.

The measured pointing precision and accuracy allow the

optimization and calibration of smart drawing aids such as ‘snaps’

and ‘grips’ which are essential to assist the user during modeling

sessions in a 3D CAD environment, because of the lack of a

physical plane support (like the mouse pad) in direct input tasks.

SpaceDesign already implemented ‘snaps’ which were the natural

extension of any 2D CAD ‘snap’. The original shape was a cube

where the ‘snap’ semi-edge dimension was empirically defined in

35 mm.

After this set of experiments we implemented a new tool with the

idea that the overall dimensions of this aid should be proportional to

the average and maximum pointing error. Since our tests revealed

that, in 95% of the cases, the pointing error is below 24mm, we

introduced in SpaceDesign a ‘calibrated spherical snap’ (Fig. 7)

with Radius = k x 24(mm); where k>1 is a ‘comfort’ multiplying

factor. A reasonable value which seems to work well with our

system is k=1,2. This new design has brought a significant volume

reduction from 343000 mm3 to 100061 mm3, i.e. the volume of the

‘calibrated spherical snap’ is 29% of the original ‘cube snap’

volume. Obviously, this volume decrease translates in a better

resolution of the snap system, which can be very useful in the case

of very complex models, with a high density of possible snapping

points.

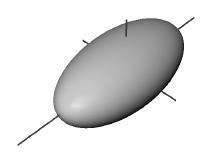

The next step was the design of an aid that could take into

consideration the high pointing anisotropy encountered in all our

experiments. The results showed that, although VR has the

advantage of the 3D perception, the user is not capable of judging

the added depth dimension as well as the other two dimensions.

Therefore, we introduced a modified ‘calibrated ellipsoid snap’

with the major axis aligned along the depth direction (Fig. 8). In this

case, we define the three lengths of the semi-axis as follow:

RadiusDepth = k x 24(mm)

RadiusVertical = 0.5 x RadiusDepth = k x 12(mm)

RadiusHorizontal = 0.4 x RadiusDepth = k x 10(mm)

With these dimensions, the volume of this snap (volume of the

ellipsoid) drops down to 20846 mm3, i.e. the 21% of the ‘calibrated

spherical snap’ volume and the 6% of the original ‘cube snap’

volume.

We finally performed a new set of tests to verify the effectiveness

of the new snap design. We just repeated the ‘picking cross hair

markers’ experiment checking the time needed to pick a marker

with the snap activated. Results showed, as expected, that the time

needed to reach the target is proportional to the distance of the

target from the “home position”. However, the main result is that

no significant time difference is noticeable switching among the

three snaps , while the snap volume changes considerably, as

illustrated above.

Therefore, we validate the introduction of the ‘calibrated ellipsoid

snap’, called SmartSnap in SpaceDesign.

9. CONCLUSIONS AND FUTURE WORK

High tracking precision and cheap VR reality setups are getting

more and more widespread in industry and academia. In this paper

we have developed the SpaceXperiment interaction test bed in order

to improve the interaction techniques within our VR CAD system,

SpaceDesign. The results achieved can be directly extended to

other similar applications, and their context is clearly general. We

introduced the ‘calibrated ellipsoid snap’ to take into

consideration the high pointing anisotropy while keeping an high

resolution in the snap system.

At the moment, we are testing new smart snap design where the

axes of the ellipsoid and its dimensions dynamically vary according

to the position of the user head and hands. In the future we also

intend to use some of the results carried out with the experiments

described in this paper, including also the maximum and average

speed values registered during Experiment 3 (see Table 8), to

calibrate further aids and tools for sketching, e.g. filters to discard

scattered tracking errors, line segmentation algorithms and user

intention interpretation.

Table 8 - Average speed values (mm/s) during the test for the three test: slow, medium, fast.

| Average values Mode C(slow) | Average values Mode D(medium) | Average values Mode E(fast) | |

| Max speed for each user | 212,7 | 423,0 | 695,8 |

| Avg.speed for each user | 66,8 | 153,4 | 379,5 |

Acknowledgement

This work has been made possible thanks to the funding of the ‘Centro di Eccellenza di Meccanica Computazionale CEMEC (Bari – Italy).

10. REFERENCES

- ART, Advanced Realtime Tracking GmbH, ARTtrack1 & DTrack IR Optical Tracking System, www.ar-tracking.de.

- Boritz, James and Booth, Kellogg S., “A Study of Interactive 3D Point Location in a Computer Simulated Virtual Environment”, In Proceedings of ACM Symposium on Virtual Reality Software and Technology '97, Lausanne, Switzerland, Sept. 15-17, pp. 181-187.

- Bowman D., Johnson D., Hodges L. F., “Testbed evaluation of immersive virtual environments”, in Presence: Teleoperators and Virtual Environments Vol.10, No.1, 2001, pp. 75-95.

- Dani T.H., Wang L., Gadh. R. “Free-Form Surface Design in a Virtual Enviroment”, proceedings of ASME '99 Design Engineering Technical Conferences, 1999, Las Vegas, Nevada.

- Desiger J., Blach R, Wesche G., Breining R.: “Towards Immersive Modelling-Challenges and recommendations :A Workshop Analysing the Needs of Designers”, Eurographics 2000.

- Fiorentino M., De Amicis R., Stork A., Monno G.; “Spacedesign: conceptual styling and design review in augmented reality”, In Proc. of ISMAR 2002 IEEE and ACM International Symposium on Mixed and Augmented Reality, Darmstadt, Germany, 2002, pp. 86-94.

- Fitts P. M., “The information capacity of the human motor system in controlling the amplitude of movement.” Journal of Experimental Psychology, Vol. 47, No.6, 1954, pp. 381-391.

- Graham E. D., MacKenzie C. L., “Physical versus virtual pointing”, Proceedings of the SIGCHI conference on Human factors in computing systems: common ground, Vancouver, British Columbia, Canada, 1996, pp. 292-299.

- Hinckley ,Pausch, Goble, Kassell, “A Survey of Design Issues in Spatial Input" in proc. of ACM UIST'94 Symposium on User Interface Software & Technology, 1994, pp. 213-222.

- http://www.3dconnexion.com/products.htm

- Hummels C., Paalder A., Overbeeke C., Stappers P.J., Smets G., “Two-Handed Gesture-Based Car Styling in a Virtual Environment”, proceedings of the 28th International Symposium on Automotive Technology and Automation (ISATA '97), D. Roller, 1997, pp 227-234.

- J. Deisinger, R. Blach, G. Wesche, R. Breining, and A. Simon, “Towards Immersive Modeling - Challenges and Recommendations: A Workshop Analyzing the Needs of Designers”, in Proceedings of the 6th Eurographics Workshop on Virtual Environments, Amsterdam. June 2000.

- MacKenzie, I. S. “Fitts' law as a research and design tool in human-computer interaction”. Human- Computer Interaction, 7,1992, 91-139.

- Mark R. Mine, Frederick P. Brooks, Carlo H. Sequin”, Moving objects in space: exploiting proprioception in virtualenvironment interaction”, Proceedings of the 24th annual conference on Computer graphics and interactive techniques, 1997. Dani T.H., Wang L., Gadh. R. “Free-Form Surface Design in a Virtual Enviroment”, proceedings of ASME '99 Design Engineering Technical Conferences, 1999, Las Vegas, Nevada.

- Meyer K., Applewhite H., Biocca F., “A Survey of Position Trackers, Presence: Teleoperators and Virtual Environments, Vol. 1, No. 2, 1992, pp. 173-200”.

- Paljic A., Jean-Marie Burkhardt, Sabine Coquillart, "A Study of Distance of Manipulation on the Responsive Workbench",IPT'2002 Symposium (Immersive Projection Technology), Orlando, US, 2002.

- Poupyrev I., Weghorst S., Billinghurst M., Ichikawa T., “A framework and testbed for studying manipulation techniques for immersive VR”, Proc. of the ACM symposium on Virtual reality software and technology , Lausanne, Switzerland, 1997, pp. 21-28.

- Purschke F., Schulze M., and Zimmermann P., Virtual Reality - "New Methods for Improving and Accelerating the Development Process in Vehicle Styling and Design", Computer Graphics International 1998, 22 - 26 June, 1998, Hannover, Germany.

- Sachs, E., Roberts, A., Stoops, D.: “3Draw: A Tool for Designing 3D Shapes”, IEEE Computer Graphics and Applications, 11, 1991, pp 18-26.

- Schmalstieg D., Fuhrmann A., Szalavari Z., Gervautz M., “Studierstube –An Environment for Collaboration in Augmented Reality”, in Proc. of CVE 96 Workshop, Nottingham, GB, 1996, pp. 19-20.

- Wang Y., C.L. MacKenzie, and V.A. Summers, “Object manipulation in virtual environments: human bias, consistency and individual differences”, in Proceedings of ACM CHI'97 Conference on Human Factors in Computing Systems, , pages 349--350, 1997.

- Wang Y., Christine L. MacKenzie: “Object Manipulation in Virtual Environments: Relative Size Matters”, in Proc. CHI 1999, 48-55

- Wesche G., Droske M., “Conceptual Free-Form Styling on the Responsive Workbench”, proceedings of VRST 2000, Seoul, Korea, 2000, pp 83-91.

- Wesche G., Marc Droske, “Conceptual free-form styling on the responsive workbench”, in Proc. VRST 2000, Seoul, Korea, 2000, 83-91.

- Yanqing Wang, Christine L. MacKenzie: “Object Manipulation in Virtual Environments: Relative Size Matters”, CHI 1999: 48-55

- Zhai S., William Buxton, Paul Milgram, “The “Silk Cursor”: investigating transparency for 3D target acquisition”, Proceedings of the SIGCHI conference on Human factors in computing systems: celebrating interdependence, 1994.

- Zhai, S., Milgram, P, “Anisotropic human performance in six degree-of-freedom tracking: An evaluation of threedimensional display and control interfaces”, IEEE Transactions on Systems, Man, and Cybernetics-Part A: Systems and Humans, Vol 27, No.4, 1997, pp. 518- 528.

- Zhai, S., Milgram, P., Rastogi, A., “Anisotropic Human Performance in Six Degree-of-Freedom Tracking: A Evaluation of 3D Display and Control Interfaces”, IEEE Transactions on Systems, Man and Cybernetics, Part A: Systems and Humans.Vol.27, No.4, pp 518-528, July 1997.