Source: http://www.generation5.org/

Machine vision is an incredibly difficult task - a task that seems relatively trivial to humans is infinitely complex for computers to perform. This essay should provide a simple introduction to computer vision, and the sort of obstacles that have to be overcome.

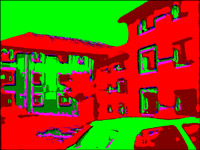

We will be looking at the picture at the right throughout the essay. We will be making a few changes though - we will say that the picture is an 8-bit 640x480 images (not the 200x150 24-bit image it actually is) since this is the "standard" size and colour-depth of a computer image.

We will be looking at the picture at the right throughout the essay. We will be making a few changes though - we will say that the picture is an 8-bit 640x480 images (not the 200x150 24-bit image it actually is) since this is the "standard" size and colour-depth of a computer image.

Why is this important? Well, the first consideration/problem of vision systems is the sheer size of the data it has to deal with. Doing the math, we have 640x480 pixels to begin with (307,200). This is multiplied by three to account for the red, green and blue (RGB) data (921,600). So, with just one image we are looking at 900K of data!

So, if we are looking at video of this resolution we would be dealing with 23Mb/sec (or 27Mb/sec in the US) of information! The solution to this is fairly obvious - we just cannot deal with this sort of resolution at this speed at this colour depth! Most vision systems will work with greyscale video with a resolution of 200x150. This greatly reduces the data rate - from 23Mb/sec to 0.72Mb/sec! Most modern day computer can manage this sort of rate very easily.

Of course, receiving the data is the smallest problem that vision systems face - it is processing it that takes the time. So how can we simplify the data down further? I'll present two simple methods - edge detection and prototyping.

The process of edge detection is surprisingly simple. You merely look for large changes in intensity between the pixel you are studying and the surrounding pixels. This is achieved by using a filter matrix. The two most common edge detecion matrices are called the Laplacian and Laplacian Approximation matrices. I'll use the Laplacian matrix here since the number are all integers. The Laplacian matrix looks like this:

1 1 1 1 -8 1 1 1 1Now, let us imagine we are looking at a pixel that is in a region bordering a black-to-white block. So the pixel and its surrounding 8 neighbours would have the following values:

255 255 255 255 255 255 0 0 0Where 255 is white and 0 is black. We then multiply the corresponding values with each other:

255 255 255 255 -2040 255 0 0 0We then add all of the values together and take the absolute value - giving us the value of 765. Now, if this value is above our threshold (normalling around 20-30, so this is way above the threshold!) then we say that point denotes an edge. Try the above calculation with a matrix that consists of only 255. Experiment with the ED256 program which allows you to play with either the Laplacian or Laplacian Approxmation matrices, even create your own.

Somebody thought it would be neat to apply this sort of technique to an image to see if there are data patterns within an image. Obviously it is different for every image, but on the whole, areas of the image can be classified very well using this techinque. Here a more specific overview of the algorithm:

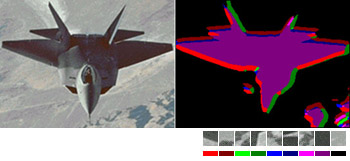

For another example, look at this picture of a F-22 Raptor. Notice how the red corresponds to the edges on the right wing (and the left too for the some reason!) and the dark green for the left trailing edges/intakes and right vertical tail. Dark blue is for horizontal edges, purple for the dark aircraft body and black for the land.

In general, edge detection helps when you need to fit a model to a picture - for example, spotting people in a scene. Prototyping helps to classify images, by detecting their prominent features. Prototyping has a lot of uses since it can "spot" traits of an image that humans do not.