Boryslav Larin

Faculty of Computer Science and Technology

Department of Applied Mathematics and Informatics

Software engineering

Object tracking method

in distributed surveillance system

Scientific supervisor: Ph.D. Yuri Ladyzhensky

Abstract

The general formulation of the problem

Introduction

Sporting events occupy an important part in modern society. In the sports industry millions of people are involved around the world. Considerable funds are spent annually to prepare, conduct, maintain various sporting events. Sporting achievements increase the prestige of the country in the region. Therefore, the development of technical tools to help improve the quality of training of athletes is an important issue for sport clubs, federations and other related organizations. Analysis of the behavior, style of play the opposing team can help develop the right tactics behavior during a match.

In Ukraine, one of the most popular sport is football. Therefore there is a need for systems that analyze the behavior of players during a football match. This question has already been the subject of research [1], [2]. However, these were the methods of analysis of video from a single camera. Such method has significant disadvantage — low accuracy of the objects in the partial or complete occlusion.

Solution for this problem may be using multiple cameras, that view the same areas of the field from different sides. This report makes analysis of existing methods for tracking and analysis of sporting events using multiple cameras.

Analysis of the architecture of a system for tracking players

In [3] architecture and algorithms that use multiple cameras provided to track movements of players during the match. The authors have some limitations that make it possible to simplify the solution of the problem. Recognized not the specific players, but their belonging to any of the five categories according to their form: first team field player, first team goalkeeper, second team field player, second team goalkeeper, the referee. Tracking movement of the ball is not considered in this work.

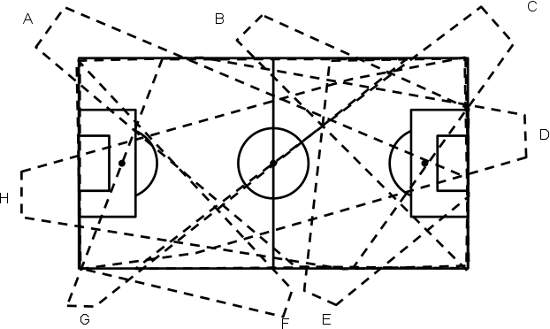

The hardware of the system consists of 8 cameras located in different parts of the stadium (see Figure 1), and 9 computers that handle incoming information. Eight of them are connected directly to the camera, one of them is compiling information and generates an output file. The output file contains the coordinates of the trajectories of detected objects during the game and the class to which they belong.

Fig. 1 — Location of cameras A-H and coverage of a football field.

The choice of location and direction of the cameras is determined by taking into account the size and other features of the stadium and demands the best review of a football field. Computer connected to the camcorder called the "Feature Server". A computer that processes information from a server characteristics are called Tracker.

Analysis of the methods of systems for tracking football players

Operation of the system (see Figure 2) is organized as follows. Tracker sends a broadcast request to the server performance. Each server reads the frames from the corresponding camera determines objects in frame and and their characteristics. Then, these characteristics are sent to Tracker that integrates the information into a single image of what is happening on the field. Tracker only receives the computed features, without visual information.

Given the lack of video on the tracker it needs to provide enough information to fully restore the situation on the football field. Each characteristic, generated by the server performance, consists of a two-dimensional plan of the field, the bounding box of the object being tracked, the errors of the object and the evaluation of membership categories. Also transmitted an identification number of the Feature Server for setting bond paths between the various cameras.

Implemening communication of Tracker and Feature Server by the "request-response" principle can solve the problem of synchronization between all the cameras that are in the system. Synchronization characteristics from different servers is based on tracker's timestamps, which are preserved when sending the request and noted in all of the server responses.

Server's task is to obtain a video stream with a corresponding camera, searching for the changed parts in the frame, to determine their characteristics and categories, to which found objects belong.

First there is a determination of changes in the frame. The initial frame status is generated with a mixture of Gaussian sets and of known in advance. Then the initial state is subtracted from the next frame of video, resulting in an altered fragments of the frame. Then, the recognition of individual objects in a frame [4]. For this purpose the Kalman filter is used. As its parameters are the bounding box and centroid coordinates of each player.

For an isolated object his measurements are obtained after subtracting the background, without additional Kalman filters, which add uncertainty to the model. In such cases, the measurement error is taken constant, since the calculation of the background is a per-pixel operation.

For groups of players measurements obtained from estimates of their positions, which leads to an increase in the error coordinate location of the player. The last step of the data on the size of the object is added to an evaluation of its category. This is implemented using the histogram intersection [5].

The result of Feature Server for each object is a vector of seven elements. The five elements represent a type of people's uniform who are on the field (two types of field players, two types of goalkeeper's uniform and referees). Another element corresponds to the object "ball" and the last element — the "other", which includes noise or other unidentified objects on the pitch.

To compare the data of several players with multiple cameras, the tracker is also used three-step algorithm. In the first phase found characteristics compared with the already determined before the object trajectory and these trajectories are updated. The second step is to create new paths for the objects of those characteristics which have not been matched to existing paths in the first step. At the end a fixed number of people in each of the categories used to identify the members of each category.

In [3], each object on the field is described by the state, including a position on the field and the acceleration with which the moving object at any given time, as well as an error and the estimate of belonging to the category. State is updated, if possible, by merging measurements from different cameras. From one camera to one measurement can be taken a maximum of one characteristic, as in the lens of one camera, one person (or object) can not be displayed twice. Merging measurement includes the position of the object, the generalized uncertainty and aggregate estimates of the category. If there are no available measurements for one of the objects to update the state is taken to its previous state.

Trajectories of objects are updated measurements from different cameras as follows. For each camera for a variety of objects and associated sets of measurements based associative array. You can use different associative methods, such as the nearest neighbor method, the method of joint probability [6]. Then a single measurement for each object integrates the measurement of other cells, weighted by the level of precision of estimates for each camera.

After comparing the measurements with the existing paths may remain such measurements, which were not appropriate trajectory. All such measurements from different cameras in pairs are checked to search for new objects. If the distance between the two measurements of objects smaller than a certain limit value, it is considered to have found a new object.

At the last stage, if it is determined more than 25 objects, some of them selected the 25 most plausible and issued as a result of the user. Probability of getting a result set of objects depends on the length of the object tracking system, the evaluation categories and the duration of the intersections with other objects.

You can highlight a few observations about the work method in the current implementation. To work correctly, need high quality images transmitted from the camera. System errors on a few cells may lead to a significant deterioration in the quality of recognition.

In the current implementation, when entering the field of view camera of two players that are overlapping, it will recognize a single object, rather than two. Like object, most likely to be discarded system, because of its weight when merging data from different cameras will be small. However, such behavior in the system in any case will make an additional error. You can fix this by organizing feedback from the tracker to the servers performance.

Game moments with a large cluster of players in one place give inaccurate results of the evaluations, and erroneous initialization of new paths, where players once again diverge from the point of congestion. In the extreme case, such situations may be intractable and require manual correction of the user.

Feedback system from the central computer to the video processors is considered in [7]. Hardware, as in [3], contains separate computers to handle the video stream from each camera and host computer.

The main computer contains information about objects system, and that in the scope of what the camera is a particular object. When you receive data about objects in the next moment the central computer updates the state of tracked objects by combining information on the same object from different cameras.

Nodes that process information from the cameras can monitor the situation when an object goes out of sight of the camera. In such cases, the sites continue to update the data of tracked objects based on the previous states of the object: position, direction, speed. However, this increases the error in the state of monitored object. Therefore, the loss of sight of the object from the camera, the node informs the central computer. In this case, the CPU is no longer taken into account data from the node that has lost the object. When returning an object in the camera field of view of its state at the node is updated according to information received from the video, and the CPU resumes the adoption of object data from the camera.

The system under consideration for the selection of different objects uses an algorithm to MPEG-7 Angular Radial Transformation [8]. To find the required objects using the knowledge base with the contours of tracked objects. Then the found object is converted to curves, and then processed by a Kalman filter to update the object state at a site that handles information from the camera.

To combine the data using state merging objects on the basis of weights determined from the error condition. Merge data is possible using two methods: the sensor-sensor, which takes into account only data from sites handlers videpotoka, and sensor system that takes into account data from the handlers, as well as previous models generated by the state.

In our system, you must choose the right weight when you merge data from the nodes of video processors. Abnormal weight gain can lead to deterioration of the track. In particular you may receive a lower quality track than using a single camera.

Conclusion

In an analysis of architectures and algorithms for tracking objects using multiple cameras, it was determined that the system must meet certain requirements. Need high-quality video equipment, which will provide no additional image noise, high speed video data transfer from the camera to the device information processing. Algorithms of the system must effectively suppress the noise when capture information and to have mechanisms to screen out implausible data misuse detection of large clusters of sites in one place.

Bibliography

- Середа А. А. , Ладыженский Ю. В. . «Разработка автоматизированной системы анализа видеозаписей спортивных соревнований.» Доклад на региональной студенческой научно-технической конференции "Інформатика та комп'ютерні технології", ДонНТУ, Донецк, 2005.

- Ладиженський Ю.В. А.О. Середа «Відстежування об’єктів у відеопотоці на основі відстежування переміщення фрагментів об’єктів», Наукові праці Донецького національного технічного університету. Серія: «Обчислювальна техніка та автоматизація». Випуск 17 (148). – Донецьк : ДонНТУ, 2009. – 127-134 сс.

- Xu, M.; Orwell, J.; Lowey, L.; Thirde, D.; ‘Architecture and algorithms for tracking football players with multiple cameras’, Digital Imaging Res. Centre, Kingston Univ., Kingston Upon Thames, UK, pp. 232-241, (2005).

- Xu, M.; Ellis, T.; ‘Partial observation vs. blind tracking through occlusion’, Proc.BMVC, pp.777-786, (2002).

- Kawashiima, T.; Yoshino, K.; Aoki, Y.; ‘Qualitative Image Analysis of Group Behavior’, CVPR, pp.690-3, (1994).

- Bar-Shalom, Y.; Li, X.R.; ‘Multitarget-Multisensor Tracking: Priciples and Techniques’, YBS, (1995)

- M.K. Bhuyan, Brian C. Lovell, Abbas Bigdeli, "Tracking with Multiple Cameras for Video Surveillance," dicta, pp.592-599, 9th Biennial Conference of the Australian Pattern Recognition Society on Digital Image Computing Techniques and Applications, 2007

- Julien Ricard, David Coeurjolly, Atilla Baskurt, Generalizations of angular radial transform for 2D and 3D shape retrieval, Pattern Recognition Letters, Volume 26, Issue 14, 15 October 2005, Pages 2174-2186

- Wei Du, Jean-Bernard Hayet, Justus Piater, and Jacques Verly "Collaborative Multi-Camera Tracking ofAthletes in Team Sports", 2006, pp.2-13

- Toshihiko Misu and Seiichi Gohshi and Yoshinori Izumi and Yoshihiro Fujita and Masahide Naemura, "Robust Tracking of Athletes", 2004

Note

When writing this master of the abstract work is not completed yet. Final Completion: December 2011 Full text of the work and materials on the subject can be obtained from the author or his manager after that date.