Abstract

During the last 5 years, research on Human Activity Recognition (HAR) has reported on systems showing good overall recognition performance. As a consequence, HAR has been considered as a potential technology for ehealth systems. Here, we propose a machine learning based HAR classifier. We also provide a full experimental description that contains the HAR wearable devices setup and a public domain dataset comprising 165,633 samples. We consider 5 activity classes, gathered from 4 subjects wearing accelerometers mounted on their waist, left thigh, right arm, and right ankle. As basic input features to our classifier we use 12 attributes derived from a time window of 150ms. Finally, the classifier uses a committee AdaBoost that combines ten Decision Trees. The observed classifier accuracy is 99.4%.

1. Introduction

With the rise of life expectancy and ageing of population, the development of new technologies that may enable a more independent and safer life to the elderly and the chronically ill has become a challenge [1]. Ambient Assisted Living (AAL) is one possibility to increase independence and reduce treatment costs, but it is still imperative to generate further knowledge in order to develop ubiquitous computing applications that provide support to home care and enable collaboration among physicians, families and patients..

Human Activity Recognition (HAR) is an active research area, results of which have the potential to benefit the development of assistive technologies in order to support care of the elderly, the chronically ill and people with special needs. Activity recognition can be used to provide information about patients’ routines to support the development of e-health systems, like AAL. Two approaches are commonly used for HAR: image processing and use of wearable sensors.

The image processing approach does not require the use of equipment in the user’s body, but imposes some limitations such as restricting operation to the indoor environments, requiring camera installation in all the rooms, lighting and image quality concerns and, mainly, users’ privacy [2]. The use of wearable sensors minimizes these problems, but requires the user to wear the equipment through extended periods of time. Hence, the use of wearable sensors may lead to inconveniences with battery charges, positioning, and calibration of sensors [3].

In this project we built a wearable device with the use of 4 accelerometers positioned in the waist, thigh, ankle and arm. The design of the wearable, details on the sensors used, and other necessary information for the reproduction of the device are shown on Section 3. We collected data from 4 people in in different static postures; and dynamic movements with which we trained a classifier using the AdaBoost method and decision trees C4.5 [3, 5]. The design of the wearable, data collection, extraction and selection of features and the results obtained with our classifier are described in Section 4. Conclusion and future work are discussed in Section 5.

2. Literature Review

The results presented in this section are part of a more comprehensive systematic review about HAR with wearable accelerometers. The procedures used for the results of this paper are the same used in a traditional systematic review: we defined a specific research question, used a search string in the database, applied exclusion criteria and reviewed resulting publications in qualitative and quantitative form. For the quantitative analysis, we collected metadata from articles and used descriptive statistics to summarize data. The method application is described as follows:

- Research Question: What are the research projects conducted in recognition of human activities and body postures with the use of accelerometers?

- Search string: (((("Body Posture") OR "Activity Recognition")) AND (accelerometer OR acceleration)). Refined by: publication year: 2006 – 2012;

- Results in IEEE database: 144 articles;.

- Exclusion criteria:

- Result: 69 articles for quantitative and qualitative analysis.

- For each accelerometer: Euler angles of roll and pitch and the length (module) of the acceleration vector (called as total_accel_sensor_n);

- Variance of roll, pitch and module of acceleration for all samples in the 1 second window (approximately 8 reads per second), with a 150ms overlapping;

- A column discretizing the module of acceleration of each accelerometer, defined after a statistic analysis comparing the data of 5 classes;

- A comparative table of the researches in the HAR from wearable accelerometers;

- A wearable device for data collection of human activities;

- The offer of a public domain dataset with 165,633 samples and 5 classes, in order to enable other authors to continue the research and compare the results.

- Use of accelerometers in smartphones;

- HAR by image processing;

- Not related to human activity (robots, in general);

For the quantitative analysis the metadata drawn from the articles were as follows: research title, year, quantity of accelerometers, use of other sensors, accelerometers position, classes, machine learning technique (or threshold based algorithms), number of subjects and samples, test mode (training dataset + test dataset, cross-validation with modes: k-fold, leave-one-example-out, or leave-one-subject-out), percentage of correctly classified samples. It was observed in relation to the publication year, that there is a growing number of publications on HAR with Wearable Accelerometers, as shown in Figure 1, which shows evidence of the importance of the approach for the Human Activity Recognition community.

Fig. 1. IEEE publications based on wearable accelerometers’ data for HAR.

In the set of articles assessed, it was observed that the subject independent analysis has been less explored: just 3 out of 69 articles presented a subject independent analysis. The primary alternatives presented by the authors in order to improve the prediction performance in subject independent tests are: (1) increase of dataset, performing the data collection from subjects of different profiles; (2) adapting learning to a subject from data collected from subjects with similar physical characteristics [11]; and (3) investigation of subject independent features and more informative of the classes [22].

Among the articles assessed, we observed a discussion on the importance of the location of the accelerometers on the body. The positions in which wearable accelerometers most commonly mounted on are the waist, next to the center of mass, and chest. A research investigates specifically the development of classifiers adaptable to different amounts of accelerometers [20].

It was also observed that the main problematic issue found was the unavailability of the dataset, which restrains the comparison of results between researches. There is also a lack of information about the orientation of the sensors axis in most of the researches, although the location is usually well described. In some research it was not informed the model of sensor used. The absence of information about orientation and sensors model impairs the reproduction of the wearable devices.

In order to enable the reproduction of the literature review discussed in this article, all publications assessed in this paper are available in RIS format, in the following web address: http://groupware.les.inf.puc-rio.br/har. In this research, the bibliographic management and publishing solution used was the EndNote X5™. The library in EndNote format is also available in this project web address.

3 Building Wearable Accelerometers for Activity Recognition

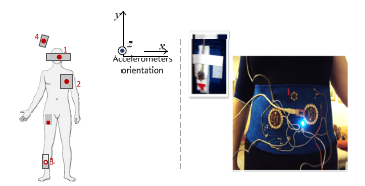

Our wearable device comprised 4 tri-axial ADXL335 accelerometers connected to an ATmega328V microcontroller. All modules were of the Lilypad Arduino toolkit. The wearable device and the accelerometers’ positioning and orientation diagram are illustrated in Figure 2.

Fig. 2. Wearable device built for data collection

The accelerometers were respectively positioned in the waist (1), left thigh (2), right ankle (3), and right arm (4). All accelerometers were calibrated prior to the data collection. The calibration consists of positioning the sensors and the performance of the reading of values to be considered as “zero”. From the calibration, the read values of each axis during data collection are subtracted from the values obtained at the time of the calibration.

The purpose of the calibration was to attenuate the peculiar inaccuracy issues of this type of sensor. Because of this, the sensors were calibrated on top of a flat table in the same position. Another regular type of calibration is the calibration by subject [3], in which the accelerometers are read and calibrated after positioned in the subjects’ bodies. The calibration by subject may benefit the data collection provided that itenables the obtainment of more homogeneous data. However, it makes the use of the wearable after completion more complex.

4 Building Wearable Accelerometers for Activity Recognition

We took the following steps to develop a classifier for the data achieved from the 4 accelerometers: data collection, data pre-processing, feature extraction, feature selection, and 10-fold cross-validation type tests to assess the accuracy of the classifier developed.

4.1 Data Collection

We collected data during 8 hours of activities, 2 hours with each one of the 4 subjects: 2 men and 2 women, all adults and healthy. The protocol was to perform each activity separately.

Although the number of subjects is small, the amount of data collected is reasonable (2 hours for each subject) and the profile is diverse: women, men, young adults and one Elder. At total it was collected 165,633 samples for the study; the distribution of the samples between the classes is illustrated in Figure 3.

Fig. 3. Frequency of classes between collected data

4.2 Feature Extraction

From the data collected from the tri-axial accelerometers it was performed a data preprocessing, following some instructions from [24]. It was generated a 1 second time window, with 150ms overlapping. The samples were grouped and descriptive statistic was used for generating part of the derivate features. The derivate features of acceleration in axis x, y, and z and of the samples grouped are listed as follows:

From the data collected from the tri-axial accelerometers it was performed a data preprocessing, following some instructions from [24]. It was generated a 1 second time window, with 150ms overlapping. The samples were grouped and descriptive statistic was used for generating part of the derivate features. The derivate features of acceleration in axis x, y, and z and of the samples grouped are listed as follows:

4.3 Feature Selection

With the purpose of reducing the use of redundant features and select more informative features in relation to the classes, we used Mark Hall’s selection algorithm based on correlation [25]. The algorithm was configured to adopt the “Best First” method, which has a greedy strategy based on backtracking. The 12 features selected by through this procedure were: (1) Sensor on the Belt: discretization of the module of acceleration vector, variance of pitch, and variance of roll; (2) Sensor on the left thigh: module of acceleration vector, discretization, and variance of pitch; (3) Sensor on the right ankle: variance of pitch, and variance of roll; (4) Sensor on the right arm: discretization of the module of acceleration vector; From all sensors: average acceleration and standard deviation of acceleration.

4.4 Classifier for Activity Recognition

Ross Quinlan’s [4] C4.5 decision tree was used in connection with the AdaBoost ensemble method [5] for classifying tasks. The C4.5 tree is an evolution proposed by Ross Quinlan to the ID3 algorithm (Iterative Dichotomiser 3) and its main advantage over the ID3 is a more efficient pruning. The boosting AdaBoost method “tends to generate distributions that concentrate on the harder examples, thus challenging the weak learning algorithm to perform well on these harder parts of the sample space” [5]. In a simplified manner, with the use of AdaBoost, the C4.5 algorithm was trained with a different distribution of samples in each iteration, thus favoring the “hardest” samples.

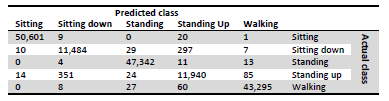

We used AdaBoost with 10 iterations and configured the C4.5 tree for a confidence factor of 0.25. The overall recognition performance was of 99.4% (weighted average) using a 10-fold cross validation testing mode, with the following accuracies per class: “sitting” 100%, “sitting down” 96.9%, “standing” 99.8%, “standing up” 96.9%, and “walking” 99.8%. The confusion matrix is presented in Table 1.

Table 1. Confusion Matrix

The results obtained in this research are very close to the top results of the literature (99.4% in [14], and 99.6% in [15]), even though, it is hard to compare them. Each research used a different dataset, a different set of classes, and different test modes.

5 Conclusion and Future Works

This work discussed a literature review on the recognition of activities using wearable accelerometers data obtained , a wearable device consisted of 4 accelerometers , and the data collection procedure, extraction and selection of features for the development of a classifier for human activities; The main contributions of this article are:

In future works we want to include new classes in the dataset and investigate the classifier’s performance with the use of accelerometers in different positions and in different quantities. Another future work is the qualitative recognition of activities, which consistsin recognizing different specifications for the performance of the same activity, such as different specifications for weight lifting.

References

1. Yu-Jin, H., Ig-Jae, K., Sang Chul, A., Hyoung-Gon, K.: Activity Recognition Using WearableSensors for Elder Care. In: Proceedings of Second International Conference on Future Generation Communication and Networking, FGCN 2008, vol. 2, pp. 302–305 (2008), doi:10.1109/FGCN.2008.165

2. Gjoreski, H., Lustrek, M., Gams, M.: Accelerometer Placement for Posture Recognition and Fall Detection. In: 7th International Conference on Intelligent Environments, IE (2011)

3. Lei, G., Bourke, A.K., Nelson, J.: A system for activity recognition using multi-sensor fusion. In: Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBC (2011)

4. Salzberg, S.L.: C4.5: Programs for Machine Learning by J. Ross Quinlan. Morgan Kaufmann Publishers, Inc. (1993) Machine Learning 16(3), 235–240 (1994) ISSN: 0885-6125, doi: 10.1007/bf00993309

5. Freund, Y., Schapire, R.E.: Experiments with a New Boosting Algorithm. In: International Conference on Machine Learning, pp. 148–156 (1996)

6. Liu, S., Gao, R.X., John, D., Staudenmayer, J.W., Freedson, P.S.: Multisensor Data Fusion for Physical Activity Assessment. IEEE Transactions on Biomedical Engineering 59(3), 687–696 (2012) ISSN: 0018-9294

7. Yuting, Z., Markovic, S., Sapir, I., Wagenaar, R.C., Little, T.D.C.: Continuous functional activity monitoring based on wearable tri-axial accelerometer and gyroscope. In: 5th International Conference on Pervasive Computing Technologies for Healthcare, Pervasive- Health (2011)

8. Sazonov, E.S., et al.: Monitoring of Posture Allocations and Activities by a Shoe-Based Wearable Sensor. IEEE Transactions on Biomedical Engineering 58(4), 983–990 (2011)

9. Reiss, A., Stricker, D.: Introducing a modular activity monitoring system. In: Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBCñòåì // Èçâ. ÐÀÍ. Òåîðèÿ è ñèñòåìû óïðàâëåíèÿ. 1999. ¹ 4. Ñ. 72-78.