Formal description and modeling of facial expressions of emotions

Content

- Introduction

- 1. PROBLEM STATEMENT

- 1.1 Relevance of the topic

- 1.2 Purpose and Objectives of the Study, Expected Results

- 1.3 Alleged scientific novelty and practical significance

- 2. REVIEW OF RESEARCH AND DEVELOPMENT

- 2.1 Review of international sources

- 2.2 Review of national sources

- 3. DESCRIPTION OF THE USED METHODS AND ALGORITHMS

- 3.1 Description of the stage for obtaining the contours of the main parts of the face

- Output

- References

Introduction

A person is a peculiar mirror in which, to a greater or lesser degree, the dynamics of a person’s actual experiences are displayed. Thanks to this, the person performs signaling and regulatory functions, acting as one of the channels of non-verbal communication.

In recent years, non-verbal, mimic human behavior has been the subject of intensive research. Studies have allowed us to offer a kind of "formula" of some mimic expressions, thus not only was an important step towards rigorous experimental study of the expression, but also the problem of differentiated perception of facial expressions was raised.

The lack of development of the issue of differentiated perception of facial expressions rather sharply contrasts with practical needs (forensic science, virtual reality, imaginative computer, etc.), and initiated these studies.

In [1], the authors proposed a formal psychological model of emotions. Also, in this work it is proposed to use NURBS-curves to represent the contours of the main parts of the face. Considering that there is no need for the multiplicity of control vertices for this task, NURBS curves can be simplified to B-spline curves. Based on the research results obtained in [1], it is possible to create a system for restoring facial fragments based on information obtained from its mimic images.

1. PROBLEM STATEMENT

1.1 Relevance of the topic

Methods and algorithms for analyzing and synthesizing the emotional state of a person’s face are an integral part of artificial intelligence systems and tools aimed at researching, building and implementing algorithmic and hardware-software systems and complexes with artificial intelligence elements based on human intellectual activity modeling. Modeling and recognition of emotions, as one of the channels of non-verbal signaling and regulatory communication, reproduces the dynamics of actual human experiences, is a relevant and important area of research with the goal of creating computer recognition and synthesis of visual images. Non-verbal mimic transmission of information by man has become the subject of intense research. Studies have allowed us to identify some approaches to the formalization of emotions: models of emotions in psychology, Darwin's evolutionary theory of emotions, Wundt's "associative" theory, James-Lange's "peripheral" theory, Cannon-Bard theory, psychoanalytic theory of emotions, Weinbaum's vascular theory of emotion expression and her modification, Anokhin's biological theory of emotions, frustration theories of emotions, cognitive theories of emotions, Simonov's information theory of emotions, Izard's theory of differential emotions, coding system with Toyan person or FACS system proposed by Ekman and others. As a result, an important step was taken towards a rigorous experimental study of the expression display and the problem of the differentiated perception of facial expressions was posed.

Means of analysis and synthesis of the human face, emotions on it, are researched and developed in the leading scientific organizations of the world, in particular, at the Massachusetts Institute of Technology, Oxford, Cambridge, Stanford, Moscow, St. Petersburg universities and the like. In Ukraine, such problems are studied at the Glushkov Institute of Cybernetics, the International Scientific and Training Center for Information Technologies and Systems, the Taras Shevchenko Kyiv National University and other institutions.

In this master’s thesis, the formal model of emotions proposed by psychologists was used to recognize emotions on a person’s face and to model facial contours with neutral facial expressions. The results of this study can be used to simulate human intellectual activity, for use in artificial intelligence systems.

1.2 Purpose and objectives of the study, expected results

The purpose of this master’s thesis is to research and develop methods, algorithms and software for the restoration of facial fragments through his facial expressions. To achieve this goal, the following tasks were formulated:

- analyze the national and foreign studies conducted in which the problem of recognition and modeling of facial expressions on a person’s face is considered;

- consider the approaches of formalizing emotions, analyze their mimic manifestation on a person’s face;

- develop methods and algorithms for automatically finding the contours of the main parts of the face, determining the emotions in the image, converting B-spline curves to the facial contours with neutral facial expressions;

- on the basis of the developed methods and algorithms, develop software for the restoration of facial fragments from his facial expression.

Subject of research: methods, algorithms and software for the analysis and subsequent synthesis of mimic expressions of emotions in a photographic image of a person’s face.

Object of study: photographic image of a person's face with various mimic manifestations of emotions.

1.3 Alleged scientific novelty and practical significance

The scientific novelty of this work is the development of a multimedia technology and an artificial intelligence tool for analyzing and synthesizing mimic expressions of emotions, as well as creating a software product for facial restoration using facial images.

The software developed in this master's thesis can be used in state and private security structures, law enforcement agencies, for example, to track people who are angry or in an aggressive state in order to prevent crimes. It is possible to use the developed means of artificial intelligence to increase the effectiveness of personal identification systems, by switching to an image with neutral mimicry, which is easier to compare with the base of standards. It is also possible to use a software product as a means of tracking the work of operators of dispatching systems. In addition, it is possible to use a software product for modeling emotions on 3D models of a human face.

2. REVIEW OF RESEARCH AND DEVELOPMENT

2.1 Review of international sources

Most foreign methods for analyzing facial expressions are based on the use of FACS. After receiving the FACS set, a set of rules and a dictionary of emotions are used to analyze emotional facial expression.

But for the analysis and modeling of facial expressions, it is necessary to understand the mechanism of its creation. The muscles of the face - the main mechanism that determines facial expressions. The expression of the face is determined by the eleven main muscles. In fact, more than twenty muscles form the face. However, many of them play only the role of muscle support and do not show a direct effect on facial expression. The main muscles of the face include:

- chewing muscle;

- a muscle that raises the upper lip;

- big zygomatic muscle;

- a muscle that lowers a corner of the mouth;

- a muscle that lowers the lower lip;

- chin muscle;

- circular muscle of the mouth;

- a muscle that wrinkles the brow;

- circular muscle of the eye;

- frontal muscle;

- muscle of laughter (subcutaneous muscle of the neck).

In [10], the author, having studied the anatomy of a human person, determined exactly which muscles and how they participate in certain expressive changes in the face. In order to model the emotional expression of the face, it is necessary to first determine in more detail their dependence on the movement of the muscles of the face. In [2, 3], a system is described for describing all visually noticeable movements on the face. The system, called Facial Action Coding System or FACS, is based on recalculating all the “action units” of a person that cause facial movements. Some muscles cause more than one movement unit, therefore the correspondence between movement units and muscle movement is approximate.

The FACS has 46 movement units that record changes in facial expressions and 12 units that describe changes in head orientation and gaze. FACS coding is performed by people who are taught to classify facial expressions, based on the anatomy of facial movement, that is, they are taught to determine how the muscles change facial expressions separately and in combination. FACS coding divides up facial expressions, putting it into specific units of movement, which led to facial expressions. The definition of movement units in FACS is descriptive and independent of the emotions that are recreated on the face.

2.2 Review of national sources

It is worth paying attention to studies conducted in the CIS countries, in particular Russia and Ukraine. A lot of scientific works and dissertation research are devoted to means of analysis and synthesis of a human face and emotions, including works by Y. Krivonos, Y. Krak, O. Barmak, V. Leont'ev, T. Luguev and others.

Based on the classification of emotions [4], a formal psychological model of emotions was proposed in [1]. In [5], this model was extended to simulate and recognize the mimic manifestations of a person’s emotional states.

For the formalization of emotions, in order to avoid ambiguity with their phenomenological description, it is proposed to proceed to the study of situations in which these emotions arise [4]. That is, when determining emotions, the most general form describes the situation in which they arise.

The author offers the presentation of basic emotions in the form of three binary signs:

- the sign ξ1 indicates a sign of emotion (positive 1 or negative 0);

- the sign ξ2 indicates the time when the emotion originated in relation to the event (predicting 0 or stating 1); The

- sign ξ3 indicates the direction of the emotion (directed at itself 1 or at external objects 0).

In the proposed model [6], the basis of the space of mimic signs of emotional states is based on the experience of the experimenter, requires a certain qualification and, accordingly, gives mixed results, since the statement of the same muscle manifestation differs from different people.

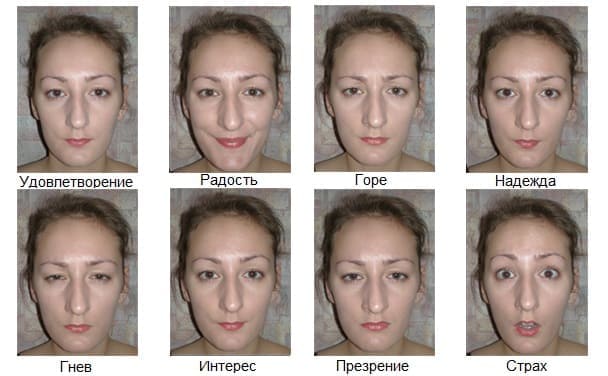

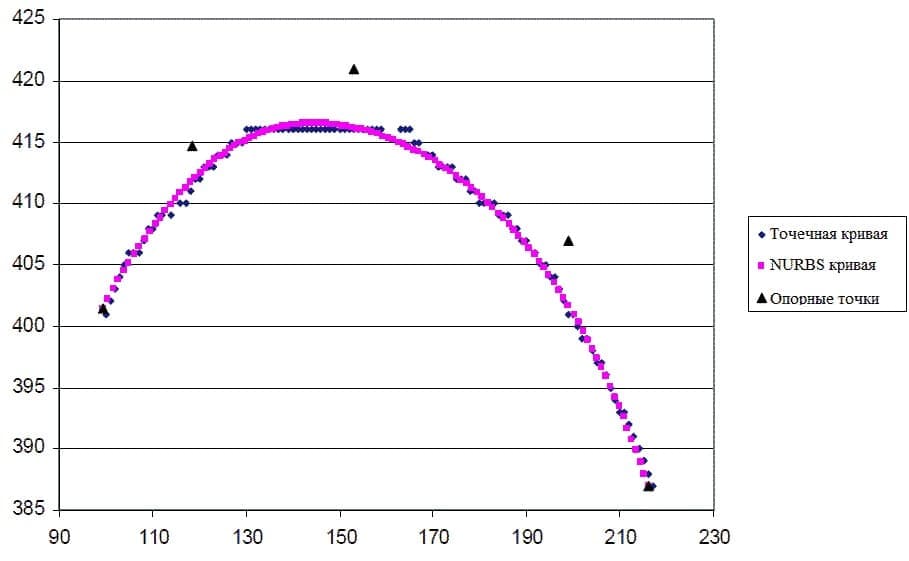

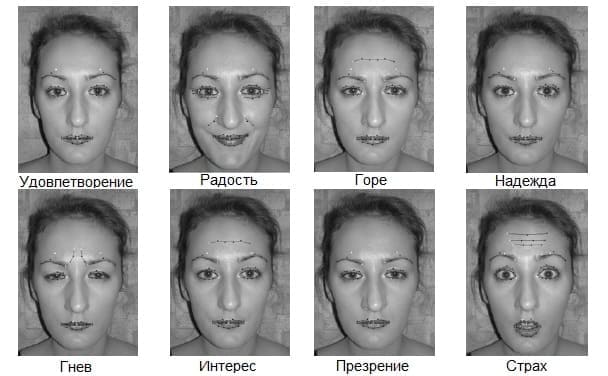

In order to move from the phenomenological definition of the characteristic mimic features to a certain formalization in [1], it was proposed to use NURBS-curves [7]. Eyebrows, eyelids and lips were stored and processed as such. Picture 2.1 presents eight basic emotions for a particular person. Picture 2.2 shows an example of a NURBS curve for the right eyebrow with emotion joy. Based on the fact that the support points of NURBS curves uniquely determine the curve itself, only the support point vectors were taken into consideration. Such a presentation greatly simplifies processing. Picture 2.3 shows eight basic emotions for a particular person with superimposed NURBS curves.

Picture 2.1 - Eight basic emotions for a particular person

Picture 2.2 - Example of a NURBS curve for the right eyebrow with emotion joy

Having obtained the coordinates of all the necessary reference points for all eight basic emotions of a particular person, one can automatically determine his arbitrary emotional state using the proposed mathematical model in [1, 6]. For example, for the emotional state of wine, the following coefficients of the combination of basic states were obtained: α1 = α3 = α4 = α6 = α7 = α8 = 0, α2 = 0.7, α5 = 0.3, where αi - correspond to the following basic emotions: joy, sorrow, hope , fear, satisfaction, anger, interest, contempt. According to [4], a state that consists of a combination of satisfaction (α5 = 0.3) and grief (α2 = 0.7) corresponds to the emotion of wine.

Picture 2.3 - Eight basic emotions for a particular person with superimposed NURBS curves

3. DESCRIPTION OF THE USED METHODS AND ALGORITHMS

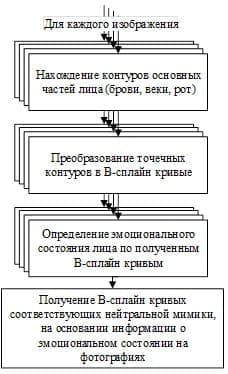

The task of recognizing and modeling emotions on a face is a complex process involving a number of subtasks [8, 9]. When solving the problem of restoration of facial fragments by his facial expression, there are four main stages: finding the contours of the main parts of the face, bringing these contours to B-spline curves, determining the emotion on the face, and bringing the contours of the parts of the face to a neutral state. In Picture 3.1, you can see the sequence of actions for solving the problem at a high level. Below will be considered in detail each step of solving the problem.

Picture 3.1 - Sequence of actions to solve the problem

3.1 Description of the stage of obtaining the contours of the main parts of the face

Since the position of the head in the images is rarely constant, at the very beginning the input image needs to be normalized. Normalization allows you to go to the same coordinate system with the same grid step for the entire set of photos. Usually the normalization process is carried out on the basis of the centers of the pupils, and the distance between them serves as a unit of measurement. To find the coordinates of the centers of the pupils, there are quite a few methods. However, most of them are aimed at finding the edge of the pupil, and the task of finding the center is secondary. The task of finding the edge of the pupil is one of the intermediate steps in the task of identifying a person by the iris. But in the task of recognizing and modeling emotions, there is no need to find the edge of the pupil, just its center is enough. Therefore, a method was developed for finding the center of the pupil, which slightly eases the computational complexity of the problem.

With the coordinates of the centers, you can determine the inclination of the head relative to the horizon line, as well as an approximate idea of the position of a person’s face in the image. Based on the difference in the coordinates of the centers of the pupils along the axis of ordinates, the angle of rotation of the head is calculated, after which the image is rotated by the calculated value. Performing this rotation allows you to avoid errors in the further localization of parts of the face.

Also, the distance between the pupils is used to scale the original image. Based on this distance, the main parts of the face are localized in the image, which reduces the complexity of searching for their contours. Localization is performed based on a priori knowledge of the approximate structure of a person’s face. To find the centers of the pupils, an algorithm was developed, the main steps of which can be seen in Picture 3.2.

Picture 3.2 - Basic steps of the pupil search algorithm

Once the pupil center points have been obtained, you can start searching for the contours of the main parts of the face, and this is the eyelids, eyebrows and mouth (Picture 3.3).

Picture 3.3 - Finding control points on the face

The contours of the eyelids are separately for each eye. And first there are the contours of the upper eyelid, and then the lower. First, the original area is smoothed by Gaussian and median filters, which will get rid of noise and partially eyelashes. We obtain the contour of the upper eyelid using the Sobel differential operator. The lower border is difficult to obtain, based on the analysis of the edges of the image, so this border was approximated by two straight lines. First you need to find the edge point of the center of the century, for which the Sobel operator was also used. Then by connecting the leftmost and rightmost points of the upper eyelid with the center of the lower eyelid obtained, you can get a fairly accurate approximation of the lower eyelid.

Eyebrow contours are also found separately. Preliminarily, we use a Gaussian and median filter, since the structure of the eyebrow is often heterogeneous. To get the contours of the eyebrow, we use the Sobel operator. But the contour of the eyebrow is quite massive and redundant, so it needs to be skeletonized (to bring in a line with a width of 1 pixel). Let us consider the center between the upper and lower border of the eyebrow.

The contour of the mouth is not expressive enough, therefore, methods based on edge analysis are not applicable for this task. To find the mouth, an approach based on color segmentation was used. In the RGB color space, it is difficult to achieve a clear separation of the image into lip / non lip classes. Therefore, after applying the Gaussian and median filters, it is necessary to switch to the R / G color space, after normalizing the R and G channels. It is much easier to find lips in the resulting color space. Threshold segmentation is used for this.

Output

The proposed mathematical model and holistic information technology for the automatic determination of the arbitrary emotional state of a particular person as a convex combination of certain basic states. To do this, using the mathematical model and the original software creates a basic space of emotional states of a particular person. Further, the arbitrary emotional manifestation of this person decomposes as a convex combination of emotional states in this space. To build the basis of the space of emotional states, flexible patterns of contours of the main facial zones are used. Flexible patterns are described using NURBS curves. Setting the pattern on a point contour of a particular image is performed using the B-spline approximation, by solving the redefined non-uniform system of linear equations. The proposed technology has practical value in the systems of visual control over operators of complex industries (nuclear power, etc.) for automatic control over their emotional state.

References

- Кривонос Ю.Г. Моделирование и анализ мимических проявлений эмоций / Ю.Г. Кривонос, Ю.В. Крак, А.В. Бармак // Доклады НАНУ, 2011. - №12. - С. 51-55.

- Ekman P. Learning to Make Facial Expressions / P. Ekman, W.V. Friesesen. – Part II. – Palo Alto, 2009. Режим доступа: http://mplab.ucsd.edu/wp-content/uploads/wu_icdl20091.pdf

- Ekman P., Facial Action Coding System. / P. Ekman, W.V. Friesesen. – Part II. – Palo Alto, 2006. Режим доступа: https://pdfs.semanticscholar.org/99bf/8ac8c131291d771923d861b188510194615e.pdf

- Леонтьев В.О. Классификация эмоций / В.О. Леонтьев. – Одесса : ИИЦ, 2006. – 84 с.

- Ефимов А.Н. Моделирование и распознавание мимических проявлений эмоций на лице человека / Г.М. Ефимов // Искусственный интеллект, 2009. - C. 532-542.

- Крак Ю.В. Синтез мимических выражений эмоций на основе формальной модели / Ю.В. Крак, А.В. Бармак, М. Ефимов // Искусственный интеллект, 2007. - №2 - C. 22-31.

- Piegl L. The NURBS Book / Les Piegl, Wayne Tiller. – [2nd Edition]. – Berlin : Springer-Verlag, 1996. – 646 p.

- Ekman P. Cross–cultural studies of facial expression / P. Ekman, W.V. Friesesen // Darwin and facial expression: A century of research in review. – N.Y : Academic, 2010. – P. 196-222.

- Ekman P. Measuring facial movement / P. Ekman, W. Friesen // Environmental Psychology and nonverbal Behavior, 1976. – Р. 56-75. Режим доступа: https://link.springer.com/article/10.1007/BF01115465

- Изард К. Эмоции человека / К. Изард. – М. : Изд–во МГУ, 2010. – 439 с.

- L. Olsson / “From unknown sensors and actuators to actions grounded in sensorimotor perceptions” / L. Olsson, C. Nehaniv, and D. Polani – Connection Science, vol. 18, no. 2, pp. 121–144, 2006.