General-purpose computing on GPU with CUDA

Авторы: Komarichev R. E., Girovskaya I. V.

Источник: Young scientists' researches and achievements in science: сборник докладов научно-технической конференции для молодых учёных (Донецк, 16 апреля 2020 г.) / ответств. за вып. Е. Н. Кушниренко. — Донецк: ДонНТУ, 2020. — с. 56-62.

Abstract

Komarichev R. E., Girovskaya I. V. — General-purpose computing on GPU with CUDA. In the article the common differences of CPU and GPU are described, reviewed how CUDA works in basics. Two common GPGPU tasks are analyzed and explained on an example.

Everyone who ever tried to program started with small scripts that resolved simple tasks. Such programs don't need high computing power and can be fine executed on CPU with single core. You can walk a long way never facing a problem of lack of power. But there are so many cases where sequential solution won't be quick enough to use in real situations.

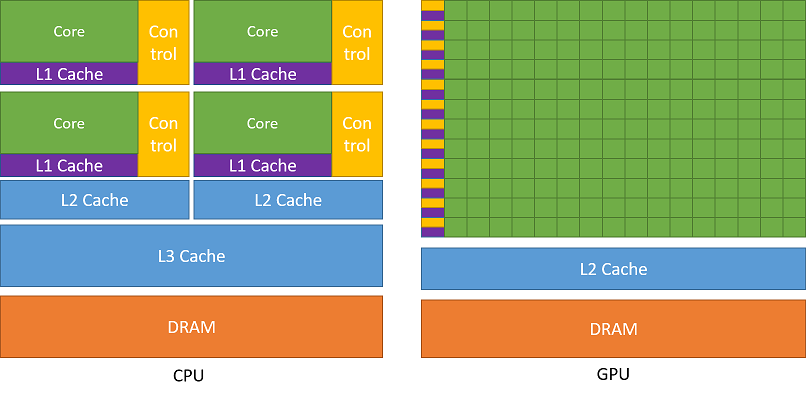

As you might know, CPU (central processing unit) and GPU (graphics processing unit) are both processors, but they're optimised for different types of objectives.

The main task of CPU is to execute chains of instructions as quick as possible. It designed to perform several chains at the same time or to split one chain of instructions to many and after that merge them together again. Each instruction depends on another and that's why CPU has so few computing cores. All the emphasis is on speed of execution and reduction of downtime that is achieved with cache and pipelines.

The main task of GPU is rendering of graphics and visual effects. In fact, it's work is operating on a huge number of independent tasks, so it has much more memory but not as fast as CPU has. Also modern GPU has thousands of computing cores whereas CPU most often has 2-8.

There are many differences in multithreading support. CPU executes 1-2 computing threads per core. GPU can launch several thousands threads for each of it's multiprocessor. Switching between threads on CPU costs hundreds of clock cycles, GPU switches several threads in a single clock cycle. In CPU most of chip area is occupied by instruction buffers, hardware branch prediction and huge cache sizes, while in GPU most of area is execution units (Fig. 1) [1].

Figure 1. CPU and GPU chips

One of the most common operation that performs on GPU much faster than on CPU is matrix multiplication. Let's say we have two square matrices A and B and their product is matrix C. According to the rules of matrix multiplication, each C's element is sum of products A's row and B's column.

For N=100 we have to perform (100+99)*100*100=1990000 math operations, not to mention index increments. Pretty much to compute sequentially, and the bigger N the longer it takes to compute. A thing is each C's element is independent on any other, so we don't have to wait for result of C1,1 to start computation of C1,2 or any other. Thus we can efficiently parallelise these tasks.

The popular technology for such purposes today is CUDA – parallel computing architecture developed by NVIDIA Corporation that can significantly increase computing performance through the use of GPUs. It is widely used by software developers, scientists and researchers in various fields such as video and image processing, computational biology and chemistry, modeling fluid dynamics, reconstructing images obtained by computed tomography, seismic analysis and more. To use this technology you need any NVIDIA GeForce 400 series video card or later and C/C++ programming skills. Specifications of latest cards are described below (Table 1) [2][3][4].

Table 1. Specifications of latest NVIDIA video cards

| Model | Number of CUDA cores | Base Clock (MHz) | Memory amount |

|---|---|---|---|

| GTX 1050 | 640 768 |

1354 1392 |

2 GB GDDR5 3 GB GDDR5 |

| GTX 1050 Ti | 768 | 1290 | 4 GB GDDR5 |

| GTX 1060 | 1152 1280 |

1506 1506 |

3 GB GDDR5 6 GB GDDR5X |

| GTX 1070 | 1920 | 1506 | 8 GB GDDR5 |

| GTX 1070 Ti | 2432 | 1607 | 8 GB GDDR5 |

| GTX 1080 | 2560 | 1607 | 8 GB GDDR5X |

| GTX 1080 Ti | 3584 | 1481 | 11 GB GDDR5X |

| GTX 1650 | 896 896 |

1485 1410 |

4 GB GDDR5 4 GB GDDR6 |

| GTX 1650 SUPER | 1280 | 1530 | 4 GB GDDR6 |

| GTX 1660 | 1480 | 1530 | 6 GB GDDR5 |

| GTX 1660 Ti | 1536 | 1500 | 6 GB GDDR6 |

| GTX 1660 SUPER | 1408 | 1530 | 6 GB GDDR6 |

| RTX 2060 | 1920 | 1365 | 6 GB GDDR6 |

| RTX 2070 | 2304 | 1410 | 8 GB GDDR6 |

| RTX 2070 SUPER | 2560 | 1605 | 8 GB GDDR6 |

| RTX 2080 | 2944 | 1515 | 8 GB GDDR6 |

| RTX 2080 SUPER | 3072 | 1650 | 8 GB GDDR6 |

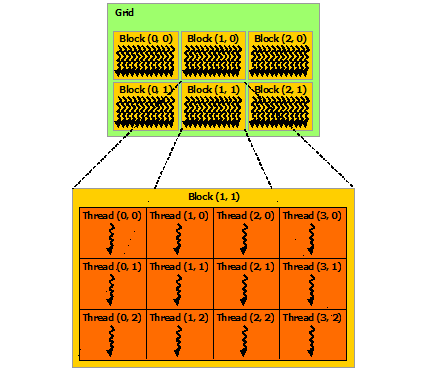

CUDA C++ extends C++ and allows us to define C++ functions, called kernels, that are executed N times in parallel by N different CUDA threads. To define the count of parallel threads and distribute them data CUDA used concepts of blocks and grids. Call to kernel launches grid of blocks, each block has many parallel threads (Fig. 2).

Figure 2. Grid of thread blocks

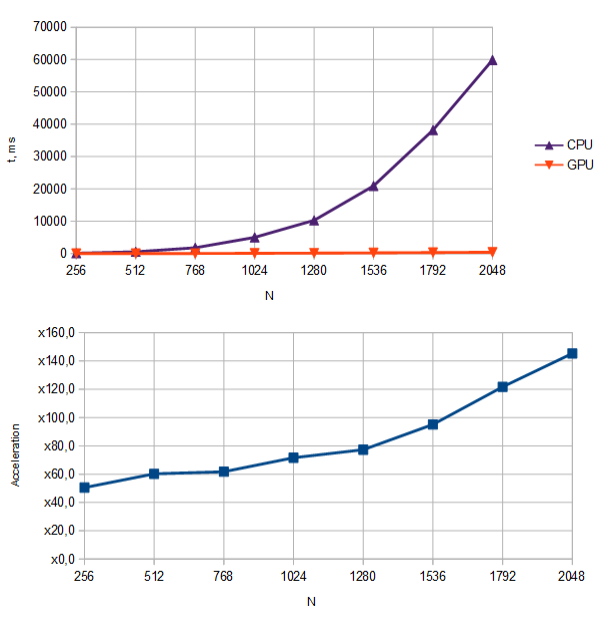

Let's do some experiments and see how much benefit we'll get with CUDA. The hardware is Intel Core i7-8750H vs NVIDIA GeForce RTX 2060. In each experiment we will multiply two square matrices of size NxN on CPU (single thread) and GPU and measure the time elapsed (Table 2). Blocks will always have dimension 32x32 and grid size will be calculated according to this fact and to size of matrices so that each element of C is computed in its own thread.

Table 2. Time costs of matrix multiplication on CPU and GPU

| N | CPU, ms | GPU, ms | Acceleration |

|---|---|---|---|

| 256 | 101 | 2 | x50,5 |

| 512 | 542 | 9 | x60,2 |

| 768 | 1789 | 29 | x61,7 |

| 1024 | 5013 | 70 | x71,6 |

| 1280 | 10278 | 177 | x77,3 |

| 1536 | 20925 | 220 | x95,1 |

| 1792 | 38214 | 314 | x121,7 |

| 2048 | 59879 | 420 | x145,3 |

As we can see, using GPU operation takes much less time. Figure 3 illustrates the resulting acceleration.

Figure 3. Matrix multiplication on CPU and GPU. Time vs N (above) and acceleration vs N (below)

But to be honest, it should be noted that computing on GPU requires additional overhead on memory exchange. Every time we want to perform GPU computation we have to send input data from host memory to device memory and, after it done, send output data back, whereas when using CPU, all data is on host all the time. Lucky to us we have pretty few data in this experiment and its transfers almost didn't take a time and results are still relevant. But we should keep this moment in mind.

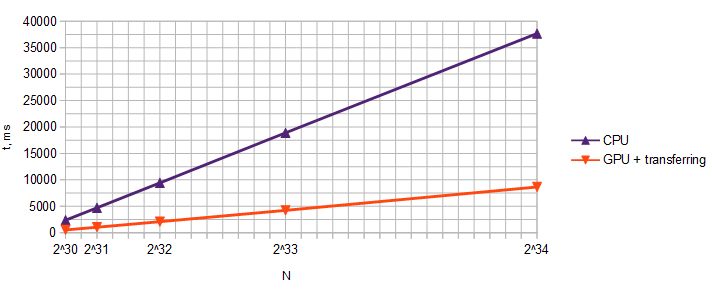

To see influence of memory exchanging let's do another experiment. We have two enormous arrays of numbers A and B and want to find array C of the same size where Ci = Ai + Bi. In this situation number of operations per element is 1 (just one sum), unlike to previous where it was 2N-1 (calculate N products and find their sum). Thus one element is faster to compute and we can take much larger N without increasing experiment duration (Table 3).

Table 3. Time costs of array summing on CPU and GPU

| N | CPU, ms | GPU w/o transferring, ms | One transfer of N elements, ms | GPU with transferring, ms | Acceleration |

|---|---|---|---|---|---|

| 2^30 = 1073741824 | 2374 | 42 | 165 | 537 | x4,4 |

| 2^31 = 2147483648 | 4714 | 90 | 316 | 1038 | x4,5 |

| 2^32 = 4294967296 | 9431 | 176 | 640 | 2096 | x4,5 |

| 2^33 = 8589934592 | 18907 | 366 | 1290 | 4236 | x4,5 |

| 2^34 = 17179869184 | 37678 | 706 | 2650 | 8656 | x4,4 |

As we can see, time of execution increases linearly (Fig. 4) because different N do not affect the amount of work for one element. Acceleration now is many times worse because of need to transfer huge amount of data between host and device.

Figure 4. Time costs of arrays summing on CPU and GPU

The conclusion is efficiency of GPU-accelerated computing is directly proportional to the number of operations performed on an individual set of data independently of the others.

References

- CUDA C++ Programming Guide [Электронный ресурс] // CUDA Toolkit Documentation. URL: https://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html (дата обращения: 15.04.2020 г.).

- GeForce 10 Series Graphics Cards [Электронный ресурс] // NVIDIA. URL: https://www.nvidia.com/en-us/geforce/10-series/ (дата обращения 15.04.2020 г.).

- GeForce GTX 16 Series Graphics Card [Электронный ресурс] // NVIDIA. URL: https://www.nvidia.com/en-us/geforce/graphics-cards/16-series/ (дата обращения 15.04.2020 г.).

- GeForce RTX 20 Series and 20 SUPER Graphics Cards [Электронный ресурс] // NVIDIA. URL: https://www.nvidia.com/en-us/geforce/20-series/ (дата обращения 15.04.2020 г.).