Abstract on the topic of graduate work

Content

- Introduction

- 1. Analysis of non-contact gesture control systems

- 1.1 Relevance of the study

- 1.2 Overview of non-contact gesture control systems

- 1.3 Purpose and objectives of the system

- 2. Concepts for solving the problem of object detection and tracking

- 3. Algorithms of the main programme and subprogrammes

- Conclusions

- List of Sources

Introduction

Touch interfaces have virtually taken over developed markets, which has led to changes in user expectations and UX professionals' views on human-machine interfaces Human-Computer Interaction (HCI). Now, following the sensory contactless technology is slowly beginning to penetrate the industry gesture and Natural Language Interaction (NLI). The proliferation of these technologies promises to change the UX industry, from the heuristics we use to the design patterns and end results. Touch interfaces have made user interaction with computing devices more natural and intuitive. With the widespread adoption of touch technologies, new concepts of interaction have begun to emerge. With the efforts of Microsoft and Apple, respectively, touchless gesture interfaces and natural language interfaces (NLI), which have been waiting for their time, are now finally beginning to penetrate the industry.

Touchless gesture technology reduces the number of interface elements in productivity applications by treating the objects displayed on the screen as if they were real physical objects. This technology also makes it possible to use a computer in environments where touching it for any reason is undesirable, such as in the kitchen or in the operating theatre. Hence the challenge of developing systems proximity control.

1. Analysis of non-contact gesture control systems

1.1 Relevance of the study

Systems of non-contact gesture control of technical systems are a technology that allows you to control devices without using classic interfaces (mouse, keyboard, touchscreen display, etc.). Instead, the user can use gestures, such as: hand or finger movements, to control the technical systems. Such systems are necessary to ensure a more convenient and a natural way of interaction between a person and a technical device, as opposed to traditional means. In particular, they can be useful for people with disabilities who have difficulty using the traditional ways of governance.

Advantages of non-contact gesture control systems include:

- Better control: the user can use gestures that are natural to them, making interaction more comfortable and convenient.

- Safety: contactless gesture control systems can to be used in technical devices where traditional buttons and switches can be dangerous to the user. For example, in medical equipment that is used during surgeries.

- Increasing productivity: non-contact gesture systems controls can reduce the amount of time it takes to complete a task because the user can quickly perform actions using gestures.

Research in the field of non-contact gesture control of technical systems is relevant and in demand nowadays. This is due to a number of reasons:

- The increasing popularity of devices that use touchless gesture control. This applies not only to smartphones and tablets, but also to cars, televisions, game consoles, medical devices and other devices.

- Increased interest in virtual and augmented reality. Non-contact gesture control is one of the most natural and intuitive ways to interact with virtual objects, making it particularly important for the development of virtual and augmented realities.

- The need to improve the user experience. Touchless gesture control can improve the user experience by making it more natural and simple.

- The development of new technologies, such as artificial intelligence and machine learning, which allow for more accurate gesture recognition and more precise control.

In this regard, research in the field of non-contact gesture control technical systems are not only relevant, but will continue to evolve in the future the next few years. This will improve the user experience, create more user-friendly and intuitive interfaces, and expand the applications of touchless gesture control.

1.2 Overview of non-contact gesture control systems

СNon-contact gesture control systems can be divided into two main categories: those based on image analysis and those that obtain information in other ways, for example: various gloves, bracelets and biomedical sensors.

Image analysis based systems use cameras and computer vision algorithms to recognise and interpret gestures. Cameras are designed to capture video images of the user space, and then computer vision algorithms process the resulting image, highlighting gestures, identifying them and converting them into control signals for technical systems. Such systems in their turn differ in the methods of image capture and the following types of cameras can be used for image capture, for example:

- RGB cameras — record the intensity of light reflected from objects in the scene in each of the three colour channels (red, green and blue). By combining these values, the camera forms a colour image. The most common method of image capture due to its wide availability and low cost relative to other methods.

- IR cameras (infrared cameras) — register infrared radiation, which is electromagnetic radiation with a wavelength longer than visible light. The main advantage of infrared cameras is the ability to record thermal radiation of different levels, and therefore, the results of video capture with such cameras do not depend on the illumination of the scene.

- RGB-D cameras (depth cameras or depth cameras) — in contrast to the previously discussed cameras, which allow you to get two-dimensional in addition, it allows to obtain information about the depth of the scene. Such cameras in turn use different methods: stereo vision, structured light method and time–of–flight method to measure the time of light flight to the scene object.

Stereo vision – uses two or more cameras (can be either RGB or IR) placed at some distance from each other. By analysing the parallax of the frames (the difference between the images caused by the difference in their positions) the system can determine the depth of each point in the scene.

A method of using structured light. The camera projects a pattern, such as a grid or a series of infrared beams, 8 onto the scene and then registers distortions of this sample caused by the geometry of objects in the scene. Analysing the obtained data allows you to determine the depth of each point in the scene.

A method called time–of–flight ("time–of–flight" or ToF camera) uses a measurement of the time it takes to travel from the source to the scene object and back to the camera.

Depth cameras allow you to more accurately determine the distance and position of objects in the scene. However, such cameras have these limitations: limited range, interference between cameras (for multi-camera systems), complexity of data processing and high cost.

Examples of applications of non-contact gesture control systems can be seen in the in various fields:

Medical devices: non-contact gesture control systems can be used in medical devices to allow medical personnel to operate the device without risk of infection (minimally invasive surgeries), as well as other medical tasks. The Leap Motion Controller is used as a simulator for recovery from injuries or surgeries, its capabilities allow it to recognise hand and finger gestures and use them to control virtual objects [5]. To diagnose, monitor and treat various diseases (Parkinson's disease, sleep disorders, etc.) use Intel RealSense, which uses depth cameras to collect diagnostic data on the patient's movements, pulse and breathing.

Figure 1.1 – Intel RealSense Appearance

Automotive industry: touchless gesture control systems can be used in cars to control the navigation system, radio and other functions without distracting the driver from the road. An example of the implementation of such a system in this area is a system called Cadillac User Experience (CUE), which allows drivers to control various car functions using hand gestures [5]. For example, the driver can control the navigation system, radio, air conditioning, and other functions without having to press physical buttons or switches. The CUE system uses a high-resolution camera located at the top of the car interior to track the driver's gestures. Gestures, such as hand and finger movements, are recognised and converted into commands that control vehicle functions. The advantage of such a system is impact. The image to be processed and analysed is obtained from a and not reducing your concentration on driving.

Interactive entertainment: touchless gesture control systems can be used to control characters in computer games, which can make the game more interactive. In this area, perhaps the best example would be the following Microsoft Kinect, as it was originally designed for such tasks. Kinect recognises the player's movements and translates them into the game, allowing the player to control characters and objects without contact with the controller. Kinect recognises the player's movements and translates them into the game, allowing control of characters and objects without contact with the controller. This allows players to control the game in a more natural way and creates a more immersive gaming environment [5]. Microsoft Kinect uses a depth camera (specifically the ToF camera) and an RGB–camera that supplements the depth camera information for more accurate recognition. Microsoft Kinect uses computer vision and machine learning algorithms to recognise user movements. Computer vision algorithms can recognise different body shapes and determine their position in space, while machine learning can take into account different movements and gestures that users can make. Thus, Microsoft Kinect uses a combination of technologies, including a depth camera, computer vision algorithms and machine learning, to recognise movements Intel RealSense uses a combination of different technologies to recognise gestures, including a depth camera, RGB–camera, infrared camera, microphones, and software. Intel RealSense uses a combination of different technologies for gesture recognition, including depth camera, RGB–camera, infrared camera, microphones and software software. Data from all cameras is combined and processed by software that uses machine learning algorithms to gesture and motion recognition. Machine learning allows you to analyse data about the movement, shape and location of objects and determine the corresponding gestures.

1.3 Purpose and objectives of the system

The aim of the work is to research and develop an intelligent system for non-contact gesture control of technical systems.

The main objective is to develop a machine vision system capable of performing that it allows drivers to operate vehicle functions without taking their eyes off the road real-time video image analysis, in which image segmentation is performed in order to recognise objects (hand gestures) and generate a control image impact. The image to be processed and analysed is obtained from a using an RGB camera (webcam, video camera).

2. Concepts for solving the problem of object detection and tracking

Human hand detection and tracking using a video camera can be solved using various computer vision and machine learning techniques and technologies. Let's consider some of them.

Computer vision methods for solving this problem can be described by the following sequence of operations:

Colour segmentation. This method uses skin colour information, as human skin colour is usually within a certain range of RGB or HSV values. The colour range that corresponds to the skin colour is determined. This range can be determined experimentally or using skin colour data in colour space (e.g. HSV). A threshold is then used filter to select pixels whose colour is within this range. The use of morphological operations such as erosion and dilation can help to remove noise and improve the contours of the detected arm [6].

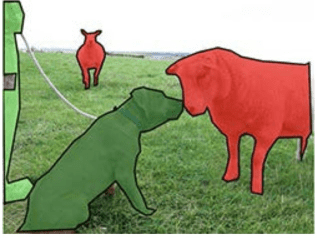

Figure 2.1 – Semantic segmentation of an image

Morphological operations. After colour segmentation, the following are applied morphological surgeries to improve outcomes. Erosion allows for a reduction in the size of the area, while expansion, on the contrary, increases the size. These operations are useful for removing small details or filling gaps in contours [8].

Deep learning methods use labelled datasets, unlike the following methods of computer vision, let us consider some popular deep learning algorithms for solving the problem of object detection and detection [2].

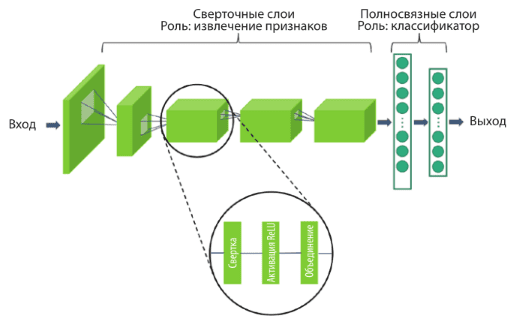

Converged neural networks (CNNs) are a class of neural networks developed specifically for image processing and analysis. They have been successfully used in many computer vision tasks, including object detection, including human hands.

CNN's main components:

Convolutional layers: The main feature of CNNs are convolutional layers. These layers perform convolution operation by scanning the input image using convolution filters. The convolution filters allow extract local features such as edges, textures and shapes from different parts of the image. The convolutional layers help the neural network to automatically learn the characteristics of objects [1].

Pooling layers: Convolution layers are often followed by pooling layers (e.g., maximum pooling layers). They reduce the size of the feature maps, removing redundant details and averaging information within localised areas. This reduces the number of parameters in the network and improves the invariant to scale and small changes in objects.

Full-link layers: At the end of the CNN, there are full-link layers that perform object classification. They can take features, extracted from previous layers and predict whether the object in the image is a hand or some other object.

Training with labelled data: CNN training requires labelled datasets containing images with hands and labels indicating their positions. During training, CNNs are customised weights of convolution and fully connected layers so as to minimise the error on the training dataset.

Data augmentation: To make the model more robust and generalisable, data augmentation can be used. This involves randomising image transformations such as rotations, lighting changes, and zooming.

CNNs are powerful tools for detecting and classifying objects in images, including hands. They are able to extract high-level features from images and train on large and diverse datasets. Their successful application requires hyperparameter optimisation and requires computational resources for training and inference.

Figure 2.2 – Generalised representation of a convolutional neural network (CNN)

Hybrid methods for human hand detection and detection combine the advantages of computer vision and deep learning techniques to provide a more accurate and robust solution. They can be used in complex scenarios where a single method may not be effective enough.

The principle of hybrid methods may include the following steps:

Segmentation and pre-filtering: First, colour segmentation can be applied to highlight areas that may contain a hand. This can be useful for quick and coarse detection.

Using a deep neural network: Then, on segmented areas, we can apply a pre-trained convolutional neural network (CNN) that is trained on an object detection task. This neural network will look for features characteristic of hands and try to detect them accurately in the image.

Filtering and post-processing: The results obtained from a deep neural network can be subjected to filtering and post-processing. These can include removing false positives, combining close detected hands, or other filtering techniques to improve accuracy.

3. Algorithms of the main programme and subprogrammes

A touchless gesture control system uses hybrid methods for hand detection and gesture recognition in a real-time camera image. The tool for implementing the hybrid method is the Mediapipe framework, which includes many different methods and functions for image processing. In addition to Mediapipe, the OpenCV library's cv2 vision module is an indispensable tool.

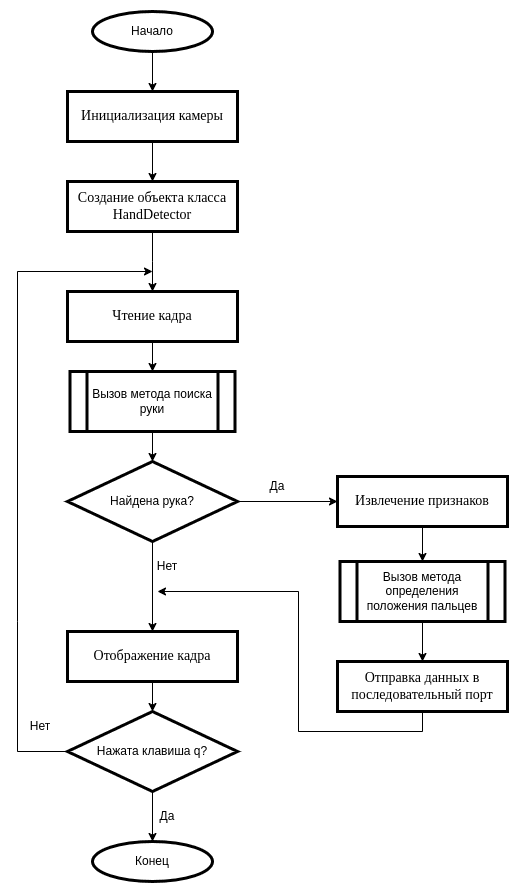

In the main programme (Fig. 3.1), the cv2 module is connected to work with the image, namely reading frames of the video stream, outputting the processed image and drawing detected object points on it. In addition to cv2, the HandDetectorModule containing the hand detection class and its methods is connected. After the creation of an object of the HandDetector class is performed to initialise the camera.

Figure 3.1 – Algorithm of operation of the main programme

In the main loop of the programme, operations are performed to remove the frame from the video stream, the obtained frame is processed by the findHands method, to find the hands in the image, the results are saved to the variables hands and img. A check for the presence of data in the hands variable and if present (a hand has been detected) the extracted attributes are processed by the fingersUp method, which determines the position of the fingers of the hand. The output of the method represents binary values: 0 - finger down, 1 - finger up.

The finger data is sent to the serial port, for further processing by the controller.

This is followed by the frame output. If a hand has been detected in the frame, the output image will be labelled with 21 the point found by the detector and 25 highlighted with a frame indicating type of hand (left or right).

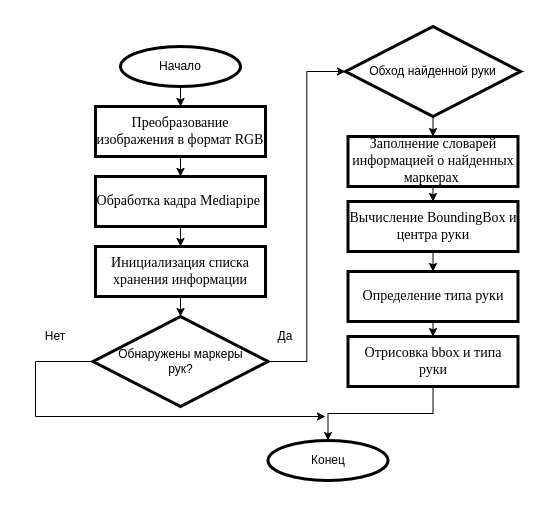

The hand finding algorithm findHands (Fig. 3.2) performs one of the most important tasks - hand detection in the image. The first step is to transform it colour scheme into RGB. Then the transformed frame is processed by the Mediapipe library pretrained model. The output of Mediapipe are the coordinates of 21 markers on the hand (Fig. 3.3). The computation of the object boundaries is performed - the coordinates of the BoundingBox and the hand type are computed, and rendering is performed. At the end of the algorithm, lists and dictionaries containing information about the presence of the marker of the object with coordinates are returned to the main program.

Figure 3.2 – Algorithm of the findHands method

Figure 3.3 – System operation principle (animation: 7 frames, 107.38 KB)

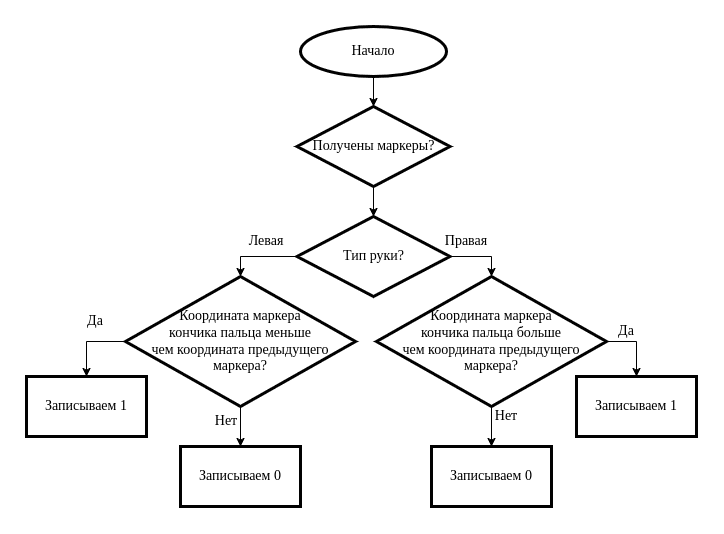

The fingersUp algorithm (Fig. 3.4) allows to determine whether a finger of a hand is raised or lowered. Since each found marker has its own identification number and coordinate, you can determine whether one marker is above or below the other.

Figure 3.4 – Algorithm of thumb condition identification

Conclusions

Non-contact gesture control systems are already on the market, used experimentally in various fields, but have not been widely used due to the high cost, complexity of integration due to the use of expensive depth cameras.

The purpose and task of creating a management system is formulated, and the main requirements of the system to be developed are put forward.

The main advantage of hybrid methods lies in their ability to combine the strengths of computer vision methods (e.g., fast and coarse-grained detection) and deep learning methods (high accuracy and ability to learn on big data). This allows achieving high accuracy and reliability in different scenarios where a single method may not be efficient enough. Therefore, it is advisable to use hybrid methods to solve the problem at hand.

Software algorithms have been developed.

List of sources

Ришал Харбанс - Грокаем алгоритмы искусcтвенного интеллекта. — СПб.: Питер, 2023. — 368 с.: ил. — (Серия «Библиотека программиста»). ISBN 978-5-4461-2924-9

Траск Эндрю - Грокаем глубокое обучение. — СПб.: Питер, 2019. — 352 с.: ил. — (Серия «Библиотека программиста»). ISBN 978-5-4461-1334-7

Основы искусственного интеллекта в примерах на Python. Самоучитель. -СПб.: БХВ–Петербург, 2021. -448 с.: ил. -(Самоучитель)

Python: Искусственный интеллект, большие данные и облачные вычисления. — СПб.: Питер, 2020. — 864 с.

Обзор Leap Motion. Электронный ресурс: https://habr.com/ru/companies/rozetked/articles/190404/

Якупова, В. В. Подход к решению задачи распознавания жестов рук на основе интеллектуальных методов / В. В. Якупова, И. И. Кагиров // Мавлютовские чтения : материалы XV Всероссийской молодежной научной конференции: в 7 томах , Уфа, 26–28 октября 2021 года. Том 4. – Уфа: Уфимский государственный авиационный технический университет, 2021. – С. 193-197. – EDN DYGQFD.

Recognition of Russian and Indian sign languages used by the deaf people / R. Elakkiya, M. G. Grif, A. L. Prikhodko, M. A. Bakaev // Science Bulletin of the Novosibirsk State Technical University. – 2020. – No. 2-3(79). – P. 57-76. – DOI 10.17212/1814-1196-2020-2-3-57-76. – EDN BQFODJ.

Искусственный интеллект с примерами на Python. : Пер. с англ. - СПб. : ООО «Диалектика», 2019. - 448 с.