Abstract

Content

- Introduction

- 1. Theme urgency

- 2. Goal and tasks of the research

- 3. Overview of researches and developments

- 4. The analysis of augmented reality construction solution in real time for mobile devices.

- Conclusion

- References

Introduction

Augmented reality (AR) is a live, direct or indirect, view of a physical, real-world environment whose elements are augmented by computer-generated sensory input such as sound, video, graphics or GPS data. It is related to a more general concept called mediated reality, in which a view of reality is modified (possibly even diminished rather than augmented) by a computer. As a result, the technology functions by enhancing one’s current perception of reality. By contrast, virtual reality replaces the real world with a simulated one. Augmentation is conventionally in real-time and in semantic context with environmental elements, such as sports scores on TV during a match. With the help of advanced AR technology (e.g. adding computer vision and object recognition) the information about the surrounding real world of the user becomes interactive and digitally manipulable. Artificial information about the environment and its objects can be overlaid on the real world [1].

1. Theme urgency

There is a wide range of areas of science and technology, which can be used in augmented reality. The first are the following:

- medicine;

- engineering and design;

- cartography and GIS;

There are also a number of methods to implement augmented reality, but today use of three-dimensional augmented reality on mobile platforms is the urgent problem. Mobile devices are inefficient, so current methods can not be fully applied to them, in consequence of which there is a need for research in this area and the development of new methodologies for building augmented reality for portable devices.

2. Goal and tasks of the research

The aim of this research is to develop an approach to the construction of three-dimensional augmented reality, that will reduce hardware costs on the target mobile platforms.

Main objectives of the study:

- Analysis of solutions of real time augmented reality for mobile devices.

- Rating ways to reduce hardware expenses by tracking augmented reality markers.

- Characteristics identification of real-time three-dimensional objects image integration methods and assessment of their applicability to mobile devices.

- Analysis of the application of various videoframe pre-processing methods using ARM processors architecture.

- Synthesis optimal image pre-processing algorithm.

Object of study : three dimensional models real time video embedding.

Subject of study : application of augmented reality methods for mobile platforms.

Within the framework of the master's research the next scientific results are planned:

- Development of image pre-processing algorithm, focused on the hardware costs reduce on mobile platforms.

- Application of the latests AR markers tracking advances to improve performance of mobile augmented reality systems.

3. Overview of researches and developments

Today, there are a lot of projects for mobile augmented reality platforms. Most of them involves solving a specific problem, using augmented reality as a tool and providing an augmented reality product for the end user.

These projects are good examples of how augmented reality is used, and thus justify the relevance of solving this problem, but implementation of algorithms and methods remains proprietary. It is necessary to consider solutions that provides end user development tools.

ARToolKit from The Human Interface Technology Lab at the University of Washington.

The most interesing reserch was made at The Human Interface Technology Lab [3]. Here are the basic principles:

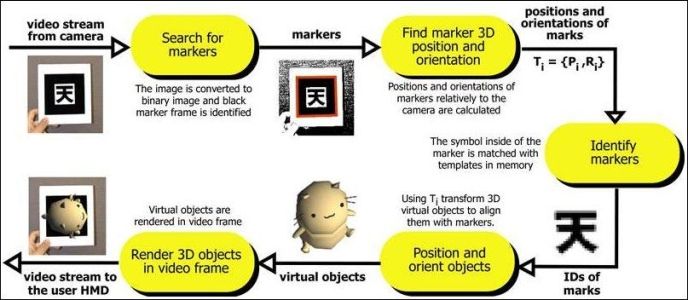

ARToolKit applications allow virtual imagery to be superimposed over live video of the real world. Although this appears magical it is not. The secret is in the black squares used as tracking markers. The ARToolKit tracking works as follows:

- The camera captures video of the real world and sends it to the computer.

- Software on the computer searches through each video frame for any square shapes.

- If a square is found, the software uses some mathematics to calculate the position of the camera relative to the black square.

- Once the position of the camera is known a computer graphics model is drawn from that same position.

- This model is drawn on top of the video of the real world and so appears stuck on the square marker.

- The final output is shown back in the handheld display, so when the user looks through the display they see graphics overlaid on the real world.

The figure below summarizes these steps. ARToolKit is able to perform this camera tracking in real time, ensuring that the virtual objects always appear overlaid on the tracking markers.

Figure 1 – ARToolKit Basic Principles [16]

Limitations

There are some limitations to purely computer vision based AR systems. Naturally the virtual objects will only appear when the tracking marks are in view. This may limit the size or movement of the virtual objects. It also means that if users cover up part of the pattern with their hands or other objects the virtual object will disappear.

There are also range issues. The larger the physical pattern the further away the pattern can be detected and so the great volume the user can be tracked in. Table 1 shows some typical maximum ranges for square markers of different sizes. These results were gathered by making maker patterns of a range of different sizes (length on a side), placing them perpendicular to the camera and moving the camera back until the virtual objects on the squares disappeared.

| Pattern Size (inches) | Usable Range (inches) |

| 2.75 | 16 |

| 3.5 | 25 |

| 4.25 | 35 |

| 7.37 | 50 |

This range is also affected somewhat by pattern complexity. The simpler the pattern the better. Patterns with large black and white regions (i.e. low frequency patterns) are the most effective. Replacing the 4.25 inch square pattern used above, with a pattern of the same size but much more complexity, reduced the tracking range from 34 to 15 inches.

Tracking is also affected by the marker orientation relative to the camera. As the markers become more tilted and horizontal, less and less of the center patterns are visible and so the recognition becomes more unreliable.

Finally, the tracking results are also affected by lighting conditions. Overhead lights may create reflections and glare spots on a paper marker and so make it more difficult to find the marker square. To reduce the glare patterns can be made from more non-reflective material. For example, by gluing black velvet fabric to a white base. The 'fuzzy' velvet paper available at craft shops also works very well.

4. The analysis of augmented reality construction solution in real time for mobile devices.

Goal is to make a computing device able to embed three dimensional models in video at real time. One of the easiest ways is to place markers on the video, device would make the necessary calculations and place an object upon them. For these purposes, you can use two-dimensional bar codes.

Barcode will be based on the Reed-Solomon encryption algorithm.

The video will be threated as a set of static images, each of which will be handled separately. Image processing occurs in stages:

- Bringing images to grayscale

- Binarization

- Closed areas detection

- Edges detection

- Marker corners allocation

- Coordinates transformation

- Object projection in desired position

- Combining projection of the object and the source image

Figure 2 - Step by step image processing

(Animation: 8 shots, 7 cycles of repetition, 160 KB)

Color image translation is produced by luminosity criteria, defined by the formula:

GS = 0.21 x R + 0.72 x G + 0.07 x B,

Where GS – resulting pixel in shades of gray, and R, G, B – the color components of the original image.

Binarize can be made either by the method of local adaptation or by the method of Otsu [10]. Given the characteristics of the selected markers, and that both approaches are close enough in performance, Otsu method is appropriat.

To define an enclosed areas on a white background combination of algorithms is usually used.

Image contours highlighting is produced by Sobel algorithm [11]. Then Douglas-Peucker algorithm corner extraction is used [12].

Marker corners coordinates may be located not perpendicular. The sides of the square formed by them are the coordinate axes. Thus it is possible to determine the position of "camera" relative to the marker and hence the embedded object and the reference point of the origin [13].

Hough transform is used for coordinate transformation [14].

Rationale for these methods and algorithms can be studied in detail in the article [15].

In this paper, each stage of augmented reality time costs were calculated. Testing was conducted on the following devices:

Table 2 - Test stand

| Milestone | NexusOne | |

| CPU (MHz) | 550 | 998 |

| RAM (MB) | 256 | 512 |

| Camera (MP) | 5.02 | 4.92 |

The results of each step time spent:

Table 3 - Test results

| Imaging | Marker recognition | Object projection | Total | |

| Milestone | 249 | 289 | 30 | 684 |

| NexusOne | 40 | 78 | 13 | 162 |

It can be seen that the highest costs are marker detection costs. Thus it is needed to set the number of recognition operations to minimum. For this additional step шы needed - detected marker tracking. This task will require significantly less time, since all the initial steps up to the projection are simplified.

Conclusion

Master's thesis is devoted to the actual scientific task of three dimensional models real time video embeddingю As part of effected research there are carried out:

- Based on the analysis of existing solutions has been found that the area of augmented reality is still under development and is doesn't have universal solutions.

- Based on the analysis of methods for solving the problem of augmented reality image preprocessing methods were selected.

- Various stages time-consuming tests were made. The results showed that the cost of the projection and insertion of objects can be neglected.

- The possible methods to improve image processing were proposed.

Further research is focused on the following aspects:

- Qualitative improvement of the proposed approach to the three dimensional models real time video embedding

- Characteristics identification of real-time three-dimensional objects image integration methods and assessment of their applicability to mobile devices.

- Analysis of the application of various methods of pre-processing training video series on the architecture of ARM processors and optimal synthesis of image pre-processing algorithm.

- Analysis of the application of various videoframe pre-processing methods using ARM processors architecture.

- Application of the latests AR markers tracking advances to improve performance of mobile augmented reality systems.

During writing this abstract the master's work is not yet complete. Final completion: December 2013. Full text of the work and materials on the topic can be obtained from the author or his scientific adviser after that date.

References

- Бойченко И.В., Лежанкин А.В. Дополненная реальность: состояние, проблемы и пути решения // Доклады ТУСУРа, № 1 (21) – 2010. – часть. 2. – с. 161-165.

- Raghav Sood Pro Android Augmented Reality // Apress. – 2012. – pp. 346.

- Kipper G. Augmented Reality: An Emerging Technologies Guide to AR // Syngress – 2012. – pp. 208

- Український стартап AR23D Studio [Электронный ресурс]. – Режим доступа: startupline.com.ua – Яз. рус.

- Дополненная реальность от МТС [Электронный ресурс]. – Режим доступа: http://today.mts.com.ua/ – Яз. рус.

- Дополненная реальность: настоящее и будущее (конференция от Microsoft) [Электронный ресурс]. – Режим доступа: http://www.optimization.com.ua/reports/augmented-reality-conference-2012.html – Яз. рус.

- Акчурин В.А. "Разработка системы расширенной реальности для моделирования трехмерных сцен" Руководитель: к.т.н., доц. К.А. Ручкин

- Тодораки М.И. "Наложение трёхмерных объектов на видеоряд в технологии расширенной реальности" Руководитель: доцент, декан факультета КНТ А.Я. Аноприенко

- Дуденко М.В. "Позиционирование объекта в технологии расширенной реальности" Руководитель: доцент, декан факультета КНТ А.Я. Аноприенко

- Otsu's method [Электронный ресурс]. – Режим доступа: http://en.wikipedia.org/... – Яз. англ.

- Fisher R., Perkins S., Walker A., Wolfart. E. Feature Detectors – Sobel Edge Detector // HIPP2 – Image Processing Learning Resources – 12 pp.

- Алгоритм Рамера-Дугласа-Пекера [Электронный ресурс]. – Режим доступа: http://en.wikipedia.org/... – Яз. рус.

- Kato H., Billinghurst M. Marker Tracking and HMD Calibration for a Video-based Augmented Reality Conferencing System // Faculty of Information Sciences, Hiroshima City University – 2002. – 10 pp.

- Преобразование Хафа [Электронный ресурс]. – Режим доступа: http://en.wikipedia.org/... – Яз. рус.

- Распознавание маркера дополненной реальности [Электронный ресурс]. – Режим доступа: http://habrahabr.ru – Яз. рус.

- How does ARToolKit work? [Электронный ресурс]. – Режим доступа: www.hitl.washington.edu – Яз. англ.