Abstract

Content

- Introduction

- 1. Theme actuality

- 2. Goal and tasks of research

- 3. Overview of researches and developments

- Conclusion

- References

Introduction

Nowadays, research and development of human-computer interaction based on pattern recognition and visual representation of multimedia data, it is forefront in the development of modern mathematical software. The developers of these interfaces seeks to use natural ways for humans to communicate with computers using gestures, voice, facial expressions, and other modalities. Gestures are particularly promising for building user interfaces hardware and software of computers, robots, expand your options interface for people with hearing and speech.

1. Theme actuality

Relevance of the theme of the graduation project from a theoretical point of view is dictated by the need to develop methods, models and algorithms for capturing, tracking and gesture recognition hands in real time to interact with computer.

Relevance of the topic from the practical point of view is determined by the need to create software systems that can provide with the help of gestures interface with computer in real time.

In master's work, the problem for gesture recognition based on information obtained from the available visual sensors such as depth cameras.

In the literature, examine different methods for solving the problems of recognition of individual classes of gestures. In particular, there are many works devoted to the determination of static postures hand, when using RGB camera as a sensor. But the proposed solutions are either working with simple single gesture, or have a high computational complexity, which does not allow to use them in real-time systems.

The appearance of the sensor Kinect in 2010, the first camera depth available to a wider audience, opened up opportunities for the creation of systems for gesture recognition, which increased the urgency of the task gesture recognition using the camera depth. A developed for Kinect software performs determination provisions of the main joints of the human body.

In general, the tasks associated with complex dynamic gesture recognition is at the initial level. A variety of gestures and the human capacity for understanding them is so great that the problem of their recognition by computer will remain relevant for a long time.

2. Goal and tasks of research

The aim of the study is an analysis and development the existing methods of gesture recognition using a camera depth, increasing their efficiency, as well as their implementation on a platform of Microsoft Kinect.

Main tasks of research:

- Analysis of methods for hand gesture recognition based on the depth of camera, and to determine the most accurate of them.

- The development of modified methods for gesture recognition.

- Implementing using the platform Microsoft Kinect.

- To conduct experimental evaluation characteristics of the methods.

Research object: methods, algorithms and programs for capturing, tracking and recognition of human hand gestures.

Research subject: modification of methods for gesture recognition, to improve the accuracy and performance.

3. Overview of researches and developments

The first sensor depth appeared only recently (in 2010), so it was not done a lot of research in the field of hand gesture recognition using the camera depth.

The first sensor depth by Microsoft released, originally presented for the Xbox 360 in 2010, and later for PCs running Windows in 2012 [1]. There are also alternative sensors depth: Xtion Pro [2], Leap Motion [3], DUO 3D [4], Intel Perceptual Computing [5].

Recognition of hand gestures for the two complex issues: the hand detection and gesture recognition (see Figure 3.1).

Figure 3.1 - Hand gesture recognition scheme

There are various methods for detecting hands in the image. These methods can be divided into two groups: methods Vision-based approach and 3D hand model based approach. In the methods based on the appearance of the hands [6-19] in the simulation parameters used two-dimensional images, which are compared with the same parameters extracted from the input image. Methods based on 3D-models [20-26] provide a greater degree of freedom (can simultaneously detect and identify different forms of hand ), but require a large amount of calculations and the availability of a large database that includes all the poses his hands.

In [27,28] presented a method that synchronizes the virtual hand (Figure 3.2), collected from the entities, with real hand. The virtual arm represented by 27 parameters and the challenge is in finding the values of these parameters so that they more accurately characterized the gesture of the real hand. It is based on the method of particle swarm optimization (PSO) [29-31]. Software implementation can be downloaded at [35], there is a library for non-commercial use only. In [32] presented the same method for the simultaneous tracking with 2 hands.

Figure 3.2 - Virtual 3D model of hand

In [33] Hand shapes are recognized by the Chamfer Matching method, and 3D trajectories are recognized using a Finite State Machine (FSM) method.

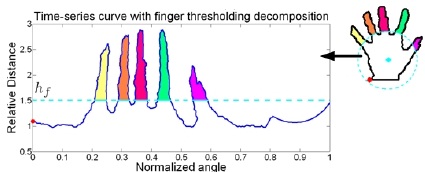

In the paper [34] presented a method Finger-Earth Mover's Distance (FEMD) to recognize hand gestures, the method proposes to submit data on hand from the camera depth in the form of the curve (Figure 3.3) and continue to work with the curve.

Figure 3.3 - Ñurve method of Finger-Earth Mover’s Distance

4. The analysis methods and to determine the most effective

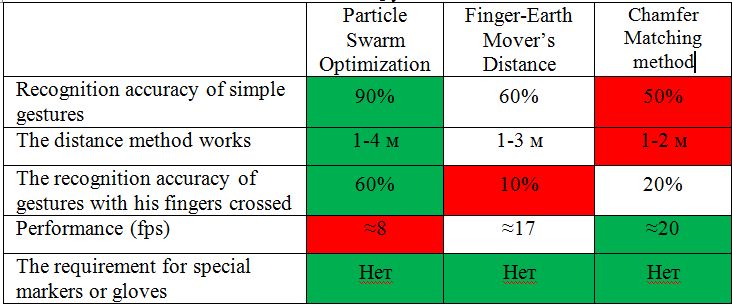

Investigated several methods for hand gesture recognition: the method of particle swarm optimization (PSO) [27,28], the method Finger-Earth Mover's Distance [34] and the Chamfer Matching method [33]. Identified several key characteristics of the methods and built a comparative table (Table 5.1). In Table. 5.1 green highlighted is the best performance techniques, and red - the worst.

Table 5.1 – Comparative characteristics of methods for hand gesture recognition

[45] made software realization of particle swarm optimization, which tracking hand, including all of the 5th finger on it.

Conclusion

Made the analysis methods for hand gesture recognition. Determine the most accurate method, it is an approach based on the method of particle swarm optimization (PSO) [34,35].

Master's work is dedicated to the research accurate methods of hand gesture recognition based on three-dimensional scanning. In the trials carried out:

- The analysis methods for hand gesture recognition based on the depth of camera

- Gave a comparative evaluation of methods.

- Identified the most accurate of them.

Further studies focused on the following aspects:

- The development of modified methods for gesture recognition.

- Implementing using the platform Microsoft Kinect.

- To evaluate the results obtained.

This master's work is not completed yet. Final completion: January 2014. The full text of the work and materials on the topic can be obtained from the author or his head after this date.

References

- Kinect - Wikipedia [Electronic resource]. – Access mode: http://ru.wikipedia.org/wiki/Kinect

- Asus Xtion Pro [Electronic resource]. – Access mode: http://ru.asus.com/Multimedia/Motion_Sensor/Xtion_PRO/

- Leap Motion [Electronic resource]. – Access mode: https://www.leapmotion.com/

- DUO 3D [Electronic resource]. – Access mode: http://duo3d.com/

- Intel Perceptual Computing [Electronic resource]. – Access mode: https://perceptualchallenge.intel.com/

- C. Chua, H. Guan, and Y. Ho. Model-based 3d hand posture estimation from a single 2d image. Image and Vision Computing, 20:191 - 202, 2002.

- N. Shimada, Y. Shirai, Y. Kuno, and J. Miura. Hand gesture estimation and model refinement using monocular camera-ambiguity limitation by inequality constraints. In Proc. of Third IEEE International Conf. on Face and Gesture Recognition, 1998.

- B. Stenger, A. Thayananthan, P. Torr, and R. Cipolla. Filtering using a tree-based estimator. In Proc. of IEEE ICCV, 2003.

- Moeslund, T.B., Hilton, A., Kruger, V.: A survey of advances in vision-based human motion capture and analysis. CVIU 104 (2006) 90–126

- Erol, A., Bebis, G., Nicolescu, M., Boyle, R.D., Twombly, X.: Vision-based hand pose estimation: A review. CVIU 108 (2007) 52–73

- K. Oka, Y.Sato and H. Koike, “Real-Time Fingertip Tracking and Gesture Recognition”, IEEE Computer Graphics and Applications, November-December, 2002, pp. 64-71.

- WU Y., HUANG T. S. Non-stationary color tracking for vision-based human computer interaction // IEEE Trans. Neural Networks. 2002. V. 13, N 4. P. 948– 960.

- MANRESA C., VARONA J., MAS R., PERALES F. J. Hand tracking and gesture recognition for humancomputer interaction // Electron. Lett. Comput. Vision Image Anal. 2005. V. 5, N 3. P. 96–104.

- MCKENNA S., MORRISON K. A comparison of skin history and trajectory-based representation schemes for the recognition of user-specific gestures // Pattern Recognition. 2004. V. 37. P. 999–1009.

- CHEN F., FU C., HUANG C. Hand gesture recognition using a real-time tracking method and hidden Markov models // Image Vision Comput. 2003. V. 21, N 8. P. 745–758.

- NG C. W., RANGANATH S. Gesture recognition via pose classification // Proc. of the 15th Intern. conf. on pattern recognition, Barcelona (Spain), 3–7 Sept. 2000. Washington DC: IEEE Computer Soc., 2000. V. 3. P. 699–704.

- HUANG C., JENG S. A model-based hand gesture recognition system // Machine Vision Appl. 2001. V. 12, N 5. P. 243–258.

- CUTLER R., TURK M. View-based interpretation of real-time optical flow for gesture recognition // Proc. of the 3rd IEEE conf. on face and gesture recognition, Nara (Japan), 14–16 Apr. 1998. Washington DC: IEEE Computer Soc., 1998. P. 416–421.

- LU S., METAXAS D., SAMARAS D., OLIENSIS J. Using multiple cues for hand tracking and model refinement // Proc. of the IEEE conf. on computer vision and pattern recognition, Madison (USA), 16–22 June 2003. Washington DC: IEEE Computer Soc., 2003. P. 443–450.

- Athitsos, V., Sclaroff, S.: Estimating 3d hand pose from a cluttered image. CVPR 2 (2003) 432

- Rosales, R., Athitsos, V., Sigal, L., Sclaroff, S.: 3d hand pose reconstruction using specialized mappings. In: ICCV. (2001) 378–385

- Romero, J., Kjellstrom, H., Kragic, D.: Monocular real-time 3D articulated hand pose estimation. IEEE-RAS Int’l Conf. on Humanoid Robots (2009) 87–92

- Rehg, J.M., Kanade, T.: Visual tracking of high dof articulated structures: An application to human hand tracking. In: ECCV, Springer-Verlag (1994) 35–46

- Stenger, B., Mendonca, P., Cipolla, R.: Model-based 3D tracking of an articulated hand. CVPR (2001) II–310–II–315

- Sudderth, E., Mandel, M., Freeman, W., Willsky, A.: Visual hand tracking using nonparametric belief propagation. In: CVPR Workshop. (2004) 189189

- de la Gorce, M., Paragios, N., Fleet, D.: Model-based hand tracking with texture, shading and self-occlusions. In: CVPR. (2008) 1–8

- Iason Oikonomidis, Nikolaos Kyriazis, and Antonis A. Argyros. Full DOF Tracking of a Hand Interacting with an Object by Modeling Occlusions and Physical Constraints. In ICCV, 2011. To appear.

- Project Report, December 18, 2003. I. Oikonomidis, N. Kyriazis, and A. Argyros, “Efficient model-based 3D tracking of hand articulations using Kinect”, in BMVC 2011, 2011.

- Sabine Helwig and Rolf Wanka. Particle Swarm Optimization in High-Dimensional Bounded Search Spaces. In Swarm Intelligence Symposium, pages 198–205. IEEE, 2007.

- Vijay John, Spela Ivekovic, and Emanuele Trucco. Articulated Human Motion Tracking with HPSO. International Conference on Computer Vision Theory and Applications, 2009.

- James Kennedy and Russ Eberhart. Particle Swarm Optimization. In International Conference on Neural Networks, volume 4, pages 1942–1948. IEEE, January 1995.

- Iason Oikonomidis, Nikolaos Kyriazis, and Antonis A. Argyros. Tracking the Articulated Motion of Two Strongly Interacting Hands, 2012.

- Zhi Li, Ray Jarvis. Real time Hand Gesture Recognition using a Range Camera. ACRA, 2009.

- Zhou Ren, Junsong Yuan, Zhengyou Zhang. Robust Hand Gesture Recognition Based on Finger-Earth Mover’s Distance with a Commodity Depth Camera. Published by ACM 2011.

- Kinect 3D Hand Tracking [Electronic resource]. – Access mode: http://cvrlcode.ics.forth.gr/handtracking/