At the time of publishing of this abstract the work on master's thesis is still in progress. The final version of the work will be available in December 2014. The text of the dissertation and materials on the topic of research can be obtained from author or his supervisor.

Novadays the most popular rendering algorithms are those based on rasterization principles. These algorithms perfectly fit SIMD architecture basis which underlies GPUs and are therefore used widely in interactive graphics. The main disadvantage of these algorithms is that pictures they synthesize are lack of realism and, especially, of physical accuracy.

Ray tracing algorithms are used to physically model the propagation of the light in virtual environments. This modeling process is extremely resource-intensive which is the main reason for that ray tracing is mostly used for static visualization. However, with the continuous growth of hardware performance, modern ray tracers are able to reach interactive frame rates.

Despite the fact that today there are many visualisation systems that allow ray tracing virtual scenes interactively or even in real-time, they often impose some restrictions on them. For example, the restrictions may be related to the support of the scene animation, to the prevalence of certain types of materials (as the rest are processed slower or not supported at all by the algorithm), to the scene geometry representation, to the number of objects in the scene and their complexity, to the support of the interior and exterior scenes.

Aim — to study and to analyze existing ray tracing algorithms and ways to improve their efficiency for GPU implementation.

Research objectives:

Object of the research: ray tracing algorithms.

The subject of the research: improving efficiency of the ray tracing algorithms and their CUDA-based implementation on GPU.

First of all, it should be noted that there are an ambiguity in the notions of forward and backward ray tracing in the papers studied. In this paper, the backward ray tracing means tracing rays from the sensor (camera, eye) to the light source.

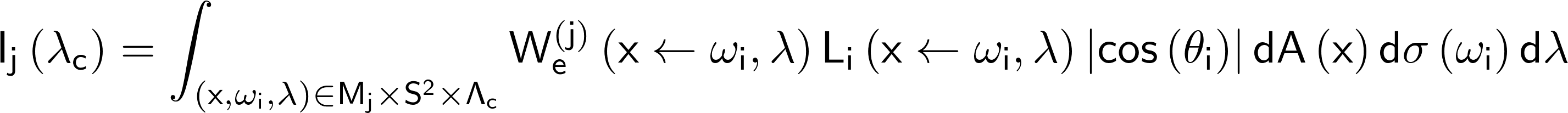

Let image plane Μs be a part of the rendered scene. The intensity of channel λc of the j-th pixel of the synthesised image associated with some region Μj on the image plane is defined by the next equation [4]:

The integration domain on the right-hand side of the equation is defined by Cartesian product of the sets denoted as follows:

The integrand uses following notations:

(x ωi, λ) — sensor responsivity function for the j-th pixel which scales Li(xωi, λ);

(x ωi, λ) — sensor responsivity function for the j-th pixel which scales Li(xωi, λ);

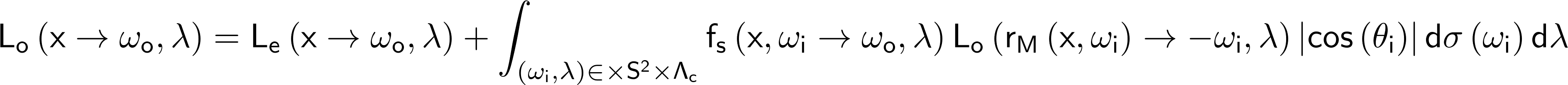

Next notations are used in equation (2):

The equation (2) is an integral equation of the second kind as the unknown function Lo(x ωo, λ) occurs both under the integral sign and outside the integral operator. The equation (2) assumes the following assertion to be true: in the absence of obstacles that photons can interact with, the radiance on the path from one surface to another does not change, that is Li(x ωi, λ)=Lo(rΜ(x, ωi) -ωi, λ). Thus, equation (2) assumes that photons can interact only with the surfaces of the scene, but not with the space inside or around them. In other words, the surfaces of the scene are surrounded by vacuum.

Ray tracing algorithms provide methods of solving equation (2). In [1] noted that while it is possible to simulate a variety of global illumination effects using equation (2), some of the more simple effects, such as direct lighting without shadows, can not be synthesized. If the path between a point on the surface of the scene and the light source is blocked by opaque surfaces, this point will lie in the shadow. On the other hand, if the overlapping light will be totally transparent, the point on the surface will not be obscured but transparent surfaces themselves will not be percieved by the sensor (because transparent surface cannot reflect or refract light). To increase the flexibility of the model (2), the authors propose using not scalar, but vector radiometric functions that include two scalar functions describing the visible and invisibleillumination

.

When the rendered scene totally consists of light sources (in this case, the integral operator on the right-hand side of equation (2) disappears completely) or when the direct illumination is rendered (light-emitting surfaces are known beforehand, so Lo(rΜ(x, ωi) -ωi, λ)=Le(rΜ(x, ωi) -ωi, λ) for all the points x, lying on the surface of light sources, for the other surfaces it can be assumed that Lo(rΜ(x, ωi) -ωi, λ)=0 or the set S2 in the integration domain can be replaced with a set of points on light surfaces), the equation (2) can be solved analytically. In general, finding partial solution to the equation requires the use of numerical methods. Various ray tracing algorithms are used to find a partial solution to the equation (2).

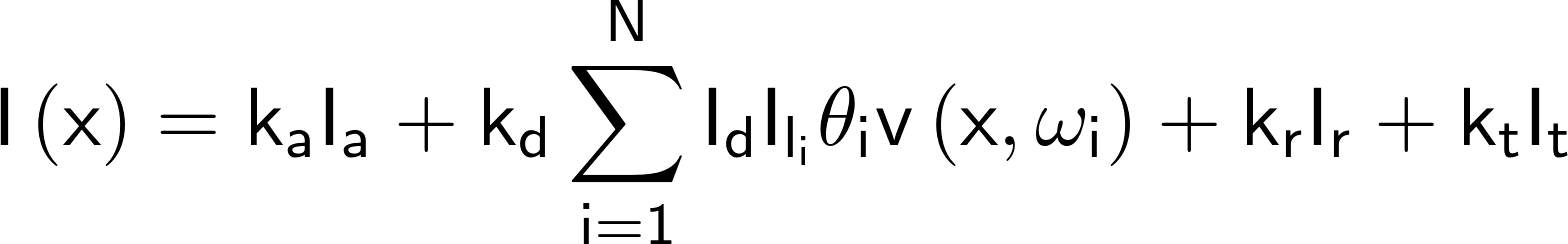

Backward ray tracing algorithm proposed by Turner Whitted in [5] is considered a classic. To approximate the instensity of the j-th pixel, this pixel is projected on the image plane and ray with an origin at the viewer's position is then traced through the center of this pixel. Each interaction of the ray with the scene surfaces requires computation of the Phong local illumination model and mixing the result with reflected and refracted components. Thus, at each point x of intersection of the ray with the surface the following global illumination model is computed:

The notation used in equation (3) is described below:

The following notation is for indices in equation (3):

Reflected and refracted components are calculated by casting two rays in the reflected and refracted directions and solving the equation (3) in each subsequent point of intersection. To render realistic reflections and refractions, corresponding coefficients must be chosen with respect to the physical laws (Fresnel's law or its approximations).

An animation below demonstrates the process of solving light transport problem using Whitted's algorithm (fig. 1). Depending on the local illumination model used, objects are denoted D (diffuse) and S(specular), and indices r and t are of the same meaning as in equation (3). L stands for light sources. Primary rays are colored in green, shadow rays (shadow feelers) are black, reflectef rays are blue and transmitted rays are magenta. На рисунке 2 также отмечены проекции точек пересечения теневых лучей с поверхностями сцены (в трёхмерном пространстве).

Light propagation model implemented by a classic backward ray tracing algorithm

(animation: number of frames: 10; frame rate: 1 frame/s; loop count: 7; size: 25.6 Kb)

It may be noticed that the secondary diffuse reflections are not modeled by the classic ray tracing algorithm (actually, the same applies to the diffuse refractions).

Path tracing algorithm was proposed in [3]. It relies on the idea that the most significant contribution into the approximation (estimate) of radiance make primary and shadow rays, that is rays lying at the beginning or at the end of the path between the sensor and the light source.

Since the classic ray tracing algorithm and its extended stochastic version named Distributed (distribution) ray tracing [2], build binary (in case of classic ray tracing) or N-ary (in case of Distribution ray tracing) trees, which include mainly intermediate rays, the computational resources are wasted (to compute illumination, which is usually has less impact on the final result, as compared to the direct illumination).

This abstract provides an overview of the basic ray tracing algorithms and their theoretical foundations. Ray tracing algorithm proposed in [5] is considered a classic and is capable only of the specular reflections and specular refractions while other indirect illumination effects are modeled loosly. To extend the variety of effectes modeled by the classic algorithm Distributed (distribution) ray tracing is proposed in [2]. Path tracing proposed in [3] improves rendering efficiency by reducing a branching factor present in previous algorithms.

Further research objectives: