Abstract

- Introduction

- 1. Theme urgency

- 2. Research goals and objectives

- 3. Methods of automatically extracting relations

- Conclusion

- References

Introduction

In the Internet contains a huge variety of texts whose authors are regular users. It can be blog articles, reviews of products, posts on social networks, etc. In this content contains a lot of valuable information.

On the one hand, the global network together with a set of search engines (such as Google) greatly simplifies the search of information about the product or service. On the other hand, Horrigan reports [19] that 58% of Internet users believe online search difficult and tedious. The fact that the volume of information on the Internet today are so large that the relevant data is simply lost in a sea of information noise.

Thus, there is a need for a tool that will help consumers to make the right decisions regarding the purchase of certain goods or services. Simply put, we need a system that will analyze the views of members of the online community regarding various subjects of discussion.

Research in this area are currently under way, and at the moment there is no optimal solution to the problem of automatic analysis of opinions.

1. Theme urgency

In recent years there has been rapid growth in the size of the Internet, including the Russian–speaking segment. Along with the increasing number of Internet users increases, and the amount of generated content. People leave messages on forums, write blog posts, comment on items on the pages of online stores and write in social networks. According to research by the All–Russian Public Opinion Research Center, the number ruskoyazynogo population regularly (at least once a month) using the Internet increased from 38% in 2010 to 55% in 2012 The number of registered social networks for these two years (from 2010 to 2012) also increased significantly – from 53% to 82% [1].

All of this content carries a lot of of information that could and should be used. There is a separate area of artificial intelligence and mathematical linguistics – natural language processing or computational linguistics. It allows you to retrieve various information, which is in the form of natural language text. One promising area of computational linguistics – the tone of the text analysis.

Tonality analysis of the text allows you to extract text emotionally charged language and emotional attitude of the authors in relation to objects, referred to in the text. Most modern systems use

a binary evaluation – positive sentiment

or negative sentiment

, but some systems used to distinguish the force tonality.

2. Research goals and objectives

Objective is to develop and study the emotional content analysis algorithm natural language messages blogs and forums.

To achieve this goal it is necessary to solve the following problems:

- review existing methods of analysis tone of the text;

- analyze the existing algorithms for analyzing the tone of the text;

- to determine the requirements for the development of algorithmic support intelligent analysis module emotional content;

- to develop a mining algorithm analysis module emotional content of natural language messages blogs and forums.

3. Methods of automatically extracting relations

In solving the problem retrieve on the most effective methods are: learning without a teacher and statistical methods. These methods do not need training data marked absent in the public domain, as compared for example with linguistic bodies established for the classical problems of computational linguistics: definition of parts of speech, lemmatization, etc.

Learning without a teacher – one of the methods of machine learning, the solution of which the system under test spontaneously trained to perform the task without interference from the experimenter. Usually, it is only suitable for applications in which are known to describe the set of objects (the training sample), and want to detect internal interconnections, dependencies, patterns that exist between objects. Unsupervised learning can be compared with the method of teaching with the teacher, for each object of the sample is given the correct answer, you need to find the relationship between the responses and objects.

Analyze an algorithm for unsupervised learning methods and apply to the problem of retrieving aspects. The basis of methods of distribution is the following idea: using a small set manually specific examples of a certain class iteratively retrieve similar text units, gradually accumulating set.

The study candidates in terms can but only n–grams ranging from 1 to 3 words containing only nouns, adjectives, verbs and adverbs. The proximity of n–grams and terms set out U_i determined by RlogF metric:

where Freg (ng, U) — the frequency of co-occurrence of n–-grams ng, and terms of U within the text fragments consisting of a fixed number of words.

Problem of extracting relations can be regarded as a problem of extracting terms frequently used by the authors of opinion [13,20].

In [13] have suggested that the terms describing the relationship may be a single nouns and noun phrases containing commonly found in opinions about the objects of the same type. Of all the n-grams words satisfying this requirement is allocated to those with a frequency exceeding one percent of the body.

Marked n-program, consisting of two or more words are tested for compactness. If n–grams compact at least two sentences, then it falls into the list of aspects.

Compactness is defined as follows:

- let f — n-grams of n words, s — a proposal containing all the words of f (possibly not located in a row);

- if the distance between any two words, adjacent to f, s in the sentence is not more than three words, then f is compact in this particular sentence.

Terms consisting of a single word, a statistical test is also tested for purity. Finds a all sentences containing the term. Among the proposals found counted proposals that do not contain the last test for compactness n–grams, which include the term. If the number of the above proposals some experimentally determined threshold, then the term is listed relationships.

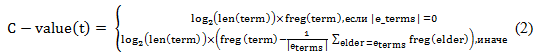

Similar statistical method to identify terms — aspects, consisting of two or more words, used in [20] and is called C-value [19]. For all n–grams, which contain only certain parts of speech, some members of the set of documents is calculated their dependence defined by the formula (2).

Where the term–n–gram, e–terms — the set of all n–grams of highest order containing term, | e–terms | cardinality of elder — element of this set.Term length in characters — len (term).

Consider the example illustrating the operation of C–value method. Suppose that in the case of opinions about cell phones bigram retina display

occurs 8 times, containing its trigrams great

retina display

and retina display worse

there are 3 and 2 times respectively. Then, accordingtotheformula (2):

C–value(retina display)=log(13)*(8-1/2(2+3))≈20

C–value(great retina display)= log(18)*3≈13 (3)

C–value(retina display worse)= log(18)*2≈8

If experimentally established threshold C–value for this case is 15, only n–gram retina display

fall into a variety of terms — aspects.

To solve the problem of determining the polarity of the proposals and short messages effective as supervised learning algorithms and methods based on dictionaries.

Disadvantage of supervised learning method is to formulate the training body with examples from the domain in which the classifier will be used. However, a similar problem and have a vocabulary methods: weight terms dictionary, compiled for one domain can be ineffective for another.

Conclusion

Based on the analysis, we can conclude that all methods of analysis can be classified as supervised learning. Their results differ from those used performance metric.

The work of these methods usually reaches more than 70% accuracy. Researchers often combine approaches to achieve the best results. For example, scientific work VG Vasiliev, S. Davydov and Khudyakova MV [6] uses a linguistic approach, complete with machine learning methods for the correction of the individual classification rules through learning.

More popular is the linguistic approach, as algorithms based on rules give more accurate results, due to the fact that the work of these methods is closely related to the semantics of the words, in contrast to machine learning methods that operate with statistics and probability theory.

References

- Turney P. Thumbs up or thumbs down? Semantic orientation applied to unsupervised classification of reviews // Proceedings of ACL–02, 40th Annual Meeting of the Association for Computational Linguistics, Association for Computational Linguistics, 2002, pp. 417–424.

- Васильев В. Г., Худякова М. В., Давыдов С. Классификация отзывов пользователей с использованием фрагментных правил // Компьютерная лингвистика и интеллектуальные технологии: по материалам ежегодной международной конференции «Диалог». Вып. 11 (18), М.: Изд-во РГГУ, 2012, С. 66–76.

- Котельников Е. В. Автоматический анализ тональности текстов на основе методов машинного обучения // Компьютерная лингвистика и интеллектуальные технологии: по материалам ежегодной международной конференции «Диалог». Вып. 11 (18), М.: Изд–во РГГУ, 2012, С. 27–36.

- Esuli A., Sebastiani F. Determining the Semantic Orientation of Terms through Gloss Classification // Conference of Information and Knowledge Management (Bremen). ACM, New York, NY, 2005, pp. 617–624.

- Hu M., Liu B. Mining and Summarizing Customer Reviews // KDD, Seattle, 2004, pp. 168–177.

- Худякова М.В., Давыдов С., Васильев В.Г. Классификация отзывов пользователей с использованием фрагментных правил. РОМИП 2011., С. 87–102.

- Клековкина М.В. Метод классификации текстов по тональности, основанный на словаре эмоциональной лексики// Компьютерная лингвистика и интеллектуальные технологии: по материалам ежегодной международной конференции «Диалог». Вып. 10 (18), М.: Изд–во РГГУ, 2011, С. 51–67.

- Вишневская Н.И. Программа анализа тональности текстов на основе методов машинного обучения // Дипломная работа, М. 2013, 9–17.

- Chisholm E., Kolda T. G. New term weighting formulas for the vector space method in information retrieval. Technical Report Number ORNL-TM-13756,Oak Ridge National Laboratory, Oak Ridge, TN, March 1999, С. 105–120.

- Debole F., Sebastiani F. Supervised term weighting for automated text categorization.Proceedings of the 2003 ACM symposium on Applied computing SAC 03,2003, Vol. 138(Ml), pp. 784–788.

- Joachims T. A probabilistic analysis of the Rocchio algorithm with TFIDF for text categorization. Proceedings of 14th International Conference on Machine Learning, Nashville, TN, 1997, pp. 143–151.

- Joachims T. Text categorization with support vector machines: learning with many relevant features. Proceedings of 10th European Conference on Machine Learning, Chemnitz, Germany, 1998, pp. 137–142.

- Lan M. (2007) A New Term Weighting Method for Text Categorization. PhD Theses, pp. 35–113.

- Lan M., Tan C. L., Su J., Lu Y. (2009), Supervised and Traditional Term Weighting Methods for Automatic Text Categorization, IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 31, no. 4, pp. 721–735.

- Lewis D. D. Naive (Bayes) at forty: The independence assumption in information retrieval. Proceedings of 10th European Conference on Machine Learning, Chemnitz, Germany, 1998, pp. 4–15.

- LIBSVM — A Library for Support Vector Machines, available at: http://www.csie.ntu.edu.tw/~cjlin/libsvm/.

- Masand B., Linoff G., Waltz D. Classifying news stories using memory-based reasoning. Proceedings of SIGIR-92, 15th ACM International Conference on Research and Development in Information Retrieval, Copenhagen, Denmark, 1992, pp. 59–65.

- Mihalcea R., Tarau P. Textrank: Bringing order into texts. Proceedings of the Conference on Empirical Methods in Natural Language Processing, Barcelona, Spain, 2004, pp. 404–411.

- Pang B., Lee L. (2008), Opinion Mining and Sentiment Analysis, Foundations and Trends® in Information Retrieval, no. 2, pp. 1–135.

- Pang B., Lee L., Vaithyanathan S. Thumbs up? Sentiment classification using machine learning techniques. Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), 2002, pp. 79–86.

- Salton G., Buckley C. (1988), Term–weighting approaches in automatic text retrieval, Information Processing & Management, Vol. 24, no. 5, pp. 513–523.

- Sebastiani F. (2002), Machine learning in automated text categorization ACM Computing Surveys, Vol. 34, no. 1, pp. 1–47.

- Святогор Л. Семантический анализ текстов естесственного языка: цели и средства / Л. Святогор, В. Гладун // XV th International Conference “Knowledge-Dialogue-Solution” KDS-2 2009, Киев, Украина, Октябрь, 2009.

- Кушнарев А.В. Семантические модели природно-речевых методов в системах тестирования// реферат выпускной работы магистра Факультет вычислительной техники и информатики ДонНТУ. 2012.

- Арбузова О. В. Разработка и исследование алгоритмов для повышения эффективности интеллектуального анализа web-контента// реферат выпускной работы магистра Факультет вычислительной техники и информатики ДонНТУ. 2013.

- Вороной С.М., Егошина А.А. Формализация словообразовательного синтеза на основе семантических свойств формантов [Электронный ресурс]. – Режим доступа: Data mining techniques.htm

This master's work is not completed yet. Final completion: December 2014. The full text of the work and materials on the topic can be obtained from the author or his head after this date.