New programming language makes turning GPUs into supercomputers a snap

Àâòîðû: R. Whitwam

Èñòî÷íèê: http://www.extremetech.com/...

Most of the bits you’ve ever crunched were run through a CPU, but your computer’s graphic processing unit (GPU) is increasingly being used for general computing tasks. The problem has always been designing applications that can take advantage of the raw computing power of a GPU. A computer science Ph.D. candidate at Indiana University by the name Eric Holk has created a new programming language called Harlan to make the process easier.

CPUs and GPUs are both important for modern computing, with each being better suited for different tasks. Most CPUs have several cores capable of running a few processing threads. It runs each thread very fast, then moves on to the next one. A GPU typically has a large number of slower processing cores (sometimes called stream processors) which can run more simultaneous threads. We would say that GPU computing is inherently more parallel than the CPU variety.

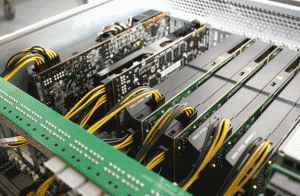

The complications when running calculations on a single GPU are troublesome enough, but pulling multiple GPUs together in an impromptu network can increase the complications dramatically. Still, researchers are increasingly turning to GPU computing in hopes of realizing the benefits of massively parallel computing. Stick enough GPUs together, and you’ve got a makeshift supercomputer at a fraction of the price.

Programming for GPUs requires the programmer to spend a lot of brain cycles dealing with low-level details, which distract from the main purpose of the code. Harlan is interesting because it can take care of all the grunt work of programming for GPUs.

There are certainly other GPU programming languages out there, with OpenCL and Nvidia’s CUDA being perhaps the most well known. These are all based on the CPU past, though. Holk’s project aims to see if a language designed from the ground up to support GPUs can do a better job.

Harlan can be compiled to OpenCL (like CUDA), but it can also make use of higher-level languages like Python and Ruby. The syntax is based on Scheme, which itself is based on the much-beloved Lisp programming language. Originally developed in 1958, Lisp has influenced some of the most popular modern languages including Perl, JavaScript, and Ruby — though, perhaps unsurprisingly, Lisp’s lots of irritating superfluous parentheses didn’t make the jump to these newer languages.

Harlan is all about pushing the limits of what programmers can do with GPU computing. This language actually generates streamlined GPU code to run on the hardware which may look very different from what the programmer created. This differs from a similar language called Rust, a Mozilla project that helps programmers craft a program suited to the underlying hardware.

Harlan is a bit less conventional in its approach, but it has the potential to make GPU computing more accessible by handling complicated low-level tasks. The new language is completely open and can be downloaded along with complete documentation from Github.