Research and development of remote data backup system

Content

- Introduction

- 1. Data backup

- 1.1 Backup

- 1.2 Types of backup

- 2. Distributed File Systems

- 2.1 General properties of distributed file systems

- 2.2 Development issues

- 3. Software implementation of data backup system

- 3.1 Three-tier architecture

- 3.2 Data storage

- Conclusion

- References

Introduction

Currently, most of the data is stored on computer drives containing hard magnetic disks, read heads and other components. Due to the presence of such factors as voltage drops, wear of working parts of equipment, external physical factors leading to deformation, the human factor of chance, it became necessary to create copies of important data and store them on other drives.

The problem of data backup is the most critical for most people and businesses today. Currently, a large number of people use various backup systems. Similarly, different enterprises use their own data storage structure, be it databases or regular files, their servers, computers, and their own operating systems, therefore, for each enterprise only the specific software needed for data backup that meets the necessary structure requirements the enterprise itself. But it is worth noting that there are few free open source systems.

Also, there are very few systems that are managed from a server, where you can connect several computers in a user profile and set up backup copies of files for each computer, ensuring work in the corporate network without connecting to the Internet. There are also few systems that can send backups to their own server, monitor the number of copies on the server, and send them to the server on a schedule. In this regard, the problem of creating a backup system with this functionality and open source is highly relevant today [1-2].

1. Data backup

1.1 Backup

When developing software, it is worth noting certain terms to further clarify the structure of the software and its implementation [3].

Backup is the process of creating a copy of data on a storage medium (hard disk, floppy disk, etc.) designed to restore data to the original or new location in the event of damage or destruction.

Backup is necessary to enable fast and inexpensive recovery of information (documents, programs, settings, etc.) in case of loss of a working copy of information for any reason.

1.2 Types of backup

In total, there are not many different types of backup that are used today.

A full backup usually affects the entire system and all files. Weekly, monthly and quarterly backups imply the creation of a full copy of all data. It is usually done when copying a large amount of data does not affect the work of the organization. To prevent a large amount of resources used, compression algorithms are used, as well as a combination of this type with others: differential or incremental. Full backup is indispensable in the case when you need to prepare a backup for quick system recovery from scratch.

With differential backup, every file that has been modified since the last full backup is backed up each time. Differential copying speeds up the recovery process. All copies of files are made at certain points in time, which, for example, is important when infected with viruses.

An incremental backup copies only those files that have changed since the last time a full or incremental backup was performed. A subsequent incremental backup adds only files that have changed since the previous one. Incremental backups take less time because fewer files are backed up. However, the data recovery process takes more time, since the data of the last full backup should be restored, as well as the data of all subsequent incremental backups. Unlike differential copying, changed or new files do not replace old ones, but are added to the media independently.

The next type of data backup is cloning. Cloning allows you to copy an entire partition or medium (device) with all files and directories to another partition or to another medium. If the partition is bootable, then the cloned partition will also be bootable.

Another type of data backup is image backup.

The image is an exact copy of the entire partition or media (device) stored in one file. Real-time backup allows you to create copies of files, directories and volumes without interrupting your work, without restarting your computer.

With a cold backup, the database is turned off or closed to consumers. The data files do not change, and the copy of the database is in a consistent state the next time it is turned on.

With hot backup, the database is turned on and open to consumers. A copy of the database is brought to a consistent state by automatically applying backup logs to it after copying data files.

2. Distributed File Systems

2.1 General properties of distributed file systems

Data backup software has elements of distributed storage and data readout, therefore it is important to note the structure and implementation of distributed file systems [4].

Such file systems have their own partition management mechanisms that simplify the storage of publicly available information. They also support duplication - the ability to make copies of partitions and save them to other file servers. If a single file server becomes inaccessible, then all the same, the data stored on its partitions can be accessed using the available backup copies of these partitions.

Modern distributed file systems include special services for managing partitions. This allows you to mount partitions of various file servers into a central directory hierarchy maintained by file systems. Directory hierarchies are available to all clients of the distributed file system, and look the same on any of the client workstations. This allows users to work with their files equally on any computer. If your desktop computer is not working, you can safely use any other - all your files are safe on the server [5].

A distributed file system is a specific layer of software that manages the connection between traditional operating systems and file systems. This layer of software integrates with the operating systems of the host machines of the network and provides a distributed file access service for systems that have a centralized core.

Distributed file systems have a number of important properties. Each particular system may have all or part of these properties. This is exactly what creates the basis for comparing different architectures among themselves.

- Network transparency - clients must be able to access remote files using the same operations as accessing local files

- Location transparency - the name of the file should not determine its location on the network

- Location independence - the file name should not change when changing its physical location

- User mobility - users should be able to access shared files from any node on the network.

- Resilience to failures - the system should continue to function at malfunction of a separate component (server or network segment). However, this may lead to poor performance or exclusion of access to some part of the file system.

- Scalability - the system must be able to scale in case of increasing load. In addition, it should be possible to gradually scale up the system by adding individual components

- File mobility - it should be possible to move files from one location to another on a working system

2.2 Development issues

There are several important issues that are considered when developing distributed file systems. They relate to the functionality, semantics and system performance. Different file systems can be compared with each other, figuring out how they solve these issues:

- Namespace - Some distributed file systems provide a uniform namespace such that each client uses the same pathname to access this file. Other systems allow the client to create their own namespace by mounting shared subtrees to arbitrary directories in the file hierarchy.

- Operations with and without state saving - the stateless server provides storage of information about client operations between requests and uses this status information to correctly service subsequent requests. Requests such as open or seek are associated with state changes, since someone has to remember information about which files the client has opened, as well as all the shifts in open files. In a stateless system, each request is “self-sufficient” and the server does not support steady states about clients. For example, instead of maintaining the offset information in an open file, the server may require the client to specify an offset for each read or write operation. Stateful servers are faster because they can use knowledge of the state of the client to significantly reduce network traffic. However, they must also have a whole range of mechanisms for maintaining a consistent state of the system and restoring after its failure. Stateless servers are simpler to design and implement, but do not provide such high performance.

- Split semantics — A distributed file system must define semantics that applies when multiple clients simultaneously access a single file. UNIX semantics require that all changes made by one client are visible to other clients when they issue the next read or write system call. Some file systems provide “session semantics”, in which changes are made available to other clients based on the granularity of the open and close system calls. And some systems provide even weaker guarantees, for example, the time interval that must pass before the changes are likely to fall to other customers.

- Remote access methods - in a simple client-server model, the remote service method is used, where each action is initiated by the client, and the server is simply an agent that fulfills the client's requests. In many distributed systems, especially in stateless systems, the server plays a much more active role. It not only serves client requests, but also participates in the operation of the coherence mechanism, notifying clients of all cases where the data cached in it becomes unreliable.

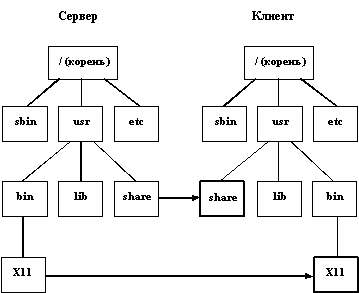

Picture 2.1 shows an example in which two subdirectories of a remote server file system (share and X11) are mounted to two (empty) client file system directories.

3. Software implementation of data backup system

3.1 Three-tier architecture

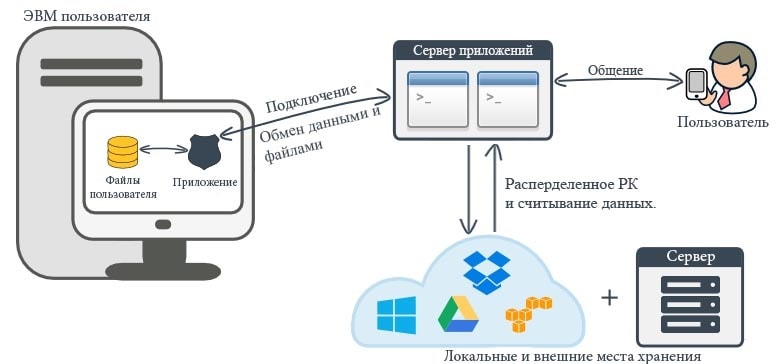

The software package uses a modified three-tier architecture [6-7], it consists of the following components (layers): the client, the application server (to which the client application is connected) and the database server (with which the application server works). Modification is the ability to deploy software in a local network, as well as distributed data storage and reading. In addition, for each layer has its own modifications. Picture 4.1 shows the three-tier software architecture.

In this architecture, the first layer is the client. The client is an interface component of the complex that has no direct connections to the database, does not store the state of the application, and does not process business logic (implementation of rules and restrictions of automated operations). The user's computer is tied to his account on the application server layer, accepts tasks, and according to them, sends a copy of the data to the storage specified by the user, with user-defined settings, and also informs the application server about the status of each task when they are executed. The modification of this layer is the storage of messages sent to the application server when there is no connection to the network, and the sending of saved messages when connected to the network. This modification is necessary in view of the work of the client with the schedule of actions.

The second layer (middle, middleware) is an application server, where most of the business logic is concentrated. He is responsible for processing data when communicating with the client and the database [8]. The software is designed in such a way that when adding additional functionality, horizontal scaling of the software performance is ensured and no changes are required to the software code of the application. Modification of this layer is the ability to manage data storage, providing a choice of storage location (on the database server or in the Internet storage), as well as the possibility of distributed storage and reading from different data stores. The server part is accessible via URLs within the network, in addition to all the logic, it is also responsible for the Web interface, which allows you to manage and configure all backup tasks. Also, the user has the opportunity to garter the necessary computers to the account to create the necessary backup tasks on each of them. The task has such settings as: file selection, compression, selection of storage locations, schedule, status notification, encryption, task history and the saved data itself, which the user can download.

The database server (data layer) provides data storage and is placed on a separate third level. In the software, it is implemented by the MongoDB database management system tool, connection to this component is provided only from the application server level. The modification of this layer is a distributed data storage to ensure greater reliability and speed of data reading [9].

3.2 Data storage

To date, there are many cloud services that allow you to store data on their own storage media. In the developed software product, the possibility of distributed data storage is developed both on its own disk space and on popular cloud storages (Dropbox, Google Drive, Mega, OneDrive, etc.). In the software product, all disk space with services selected by the user is represented as one. Elements of artificial intelligence were also designed to track downloaded and readable data, and automatically distribute the most used files to the service with the best data exchange rate, and less used files, respectively, to the service the worst data exchange rate [10].

Conclusion

The master's work is devoted to the actual scientific task of creating a software system for remote data backup for corporate networks. In the framework of the research carried out:

- The necessary software functionality has been identified for users based on an analysis of the advantages and disadvantages of other data backup software currently relevant.

- The software architecture is described and designed.

- Defined and justified modern tools for the development of all the necessary functionality.

- Developed the software itself.

The master’s work identified and justified the need to develop this software, as well as the estimated future relevance based on statistics from other alternative software.

References

- Ольшевский А.И. Исследование и проектирование программного комплекса удаленного резервного копирования данных [Текст] / А.И. Ольшевский, В.С. Нестеренко // Сб. мат. «Информатика, управляющие системы, математическое и компьютерное моделирование» (ИУСМКМ – 2018). – Донецк : ДонНТУ, 2017. – С. 61-64

- Ольшевский А.И. Система удаленного резервного копирования данных для корпоративных сетей [Текст] / А.И. Ольшевский, В.С. Нестеренко // Сб. мат. «Программная инженерия: методы и технологии разработки информационно-вычислительных систем» (ПИИВС – 2018). – Донецк : ДонНТУ, 2018. – С. 88-92

- Бычкова Е.В. Программное средство создания резервных копий данных [Текст] / Е.В. Бычкова, В.С. Нестеренко // Сб. науч. тр. «Информатика, управляющие системы, математическое и компьютерное моделирование» в рамках III форума «Инновационные перспективы Донбасса» (ИУСМКМ – 2017). – Донецк : ДонНТУ, 2017. – С. 381-384

- Silberschatz A. Operating System Concepts / Silberschatz A., Galvin P.B., Gagne G. – Salt Lake City : John Wiley & Sons, 1994. – 780 p.

- Gupta М. Storage Area Network Fundamentals / Gupta М. – Indianapolis : Cisco Press, 2002. – 320 p.

- Jepsen T.C. Distributed Storage Networks: Architecture, Protocols and Management / Jepsen T.C. – Raleigh : John Wiley & Sons, 2003. – 338 p.

- El-Rewini H. Advanced Computer Architecture and Parallel Processing / El-Rewini H., Abd-El-Barr M. – Hoboken : John Wiley & Sons, 2005. – 288 p.

- Столлингс В. Компьютерные сети, протоколы и технологии Интернета / В. Столлингс – СПб. : БХВ-Петербург, 2005. – 752 с.

- Хокинс С. Администрирование Web-сервера Apache и руководство по электронной коммерции / С. Хокинс – СПб. : Вильямс, 2001. – 336 с.

- Джордж Ф. Искусственный интеллект. Стратегии и методы решения сложных проблем / Ф. Джордж – СПб. : Вильямс, 2001. – 864 с.