Vavrinyuk Igor

Faculty of Institute of informatics and Artificial Intelligence

Department of artificial intelligence systems

Speciality “Artificial intelligence systems”

Development and research of model caching in control systems Intelligent Systems

Scientific adviser: k.t.n., Babakov Roman

Summary on the theme of master's work

Introduction

1. Statement of the problem.

2. Analysis of the literature.

3. The purpose of the study

4. Statement of the research problem.

5. Solution of the problem and the results of research.

6. Printsip work

Conclusion

References

Introduction

One of the important factors increasing the performance of the processor, is the presence of cache memory, or rather its size, speed of access and distribution levels.

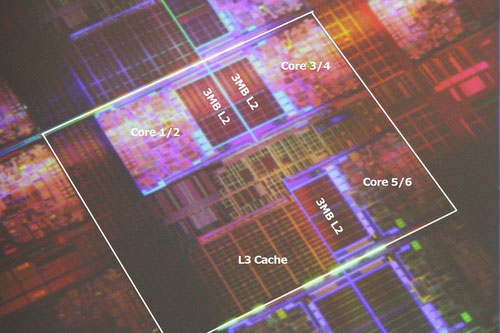

Figure 1 – Presentation Kesh memory

For a long time almost all of the processors are equipped with this type of memory, which again proves the usefulness of its presence. In this article, we will talk about the structure, purpose and practical levels of cache memory as a very important characteristic protsessora.Kesh memory - it's super-fast processor, memory used to temporarily store data, which are most frequently used. That's it, in short, can be described this type of memory. It is based on a trigger that, in turn, consist of transistors. Group transistor occupies much more space than the same capacitor, which consists of the RAM. It pulls a lot of difficulties in production, as well as limitations in volume. That is why the cache memory is a very expensive memory, while having negligible volumes. But from such a structure, it follows the main advantage of this memory - speed. Since flip-flops do not require the regeneration valve and the delay time at which they are collected is small, while the trigger switching from one state to another takes place very quickly. This allows the cache to operate on the same frequencies as the modern protsessory.Takzhe, the important factor is the placement of the cache. Placed it at the processor chip, which significantly reduces the time to access it. Previously, the cache memory of some levels, was placed outside the processor chip, a special chip SRAM somewhere on the motherboard. Now, almost all processors, cache memory is available on the processor die.

Figure 2 – Distribution Kesh memory practically by all contemporary processor

1. Statement of the problem

Algorithms of the cache memory in modern digital intelligent systems are quite complex. At the same time, to the efficiency of the cache memory rather high demands put forward, due to its relatively high cost in the digital device. Ability to modify the parameters of the module cache allows them to pick up such a value at which the cache will have a maximum efficiency for a given firmware. This optimization characteristics of the module cache will improve the performance of digital control and the potential to expand the scope of its application.

2. Analysis of the literature

In [1] discussed the organization and functioning of the digital control unit on the basis of compositional microprogram control unit for which a [2-5] proposed a method for increasing the performance by using the module cache microcode, as well as the issues of architectural organization of the cache module memory. In rabotak [6-8] developed a heuristic algorithm to improve the efficiency of the cache module in the compositional microprogram control code division based on addressing specific operational linear chains. A number of strategies for combining multiple operator chains in the same block of memory, allowing a general increasethe probability of cache hits for the flow-chart of the algorithm implemented upravleniya.Rassmotren example of the use of the proposed heuristic algorithm. As in [9] presented a method of synthesis of the basic structure of compositional microprogram control unit with the division of codes and caching microcode. The method is based heuristic distribution of operational linear chains in the control memory address space, providing maximum control algorithm implemented for the probability of cache hits. The sources [10-11] the systems architecture-based processors Intel ® Xeon ® processor 5600 series and their performance. As in the case of processors 5500 series, 5600 series processor architecture creates custom-Chicama some difficulties due to the high flexibility and a wide array of configurations, the proposed Mykh this new platform. Performance analysis in this review covers parameters such as latency of main memory, memory bandwidth and performance. Then, in the review examines the analytical results ofprevious technical reviews for the 5500 series and new analytical results and performance issues associated with the frequency of memory and filling the memory slots in a particular system. In addition, in this data, we analyze the optimal memory configuration, and outlines the best standard procedures and guidelines for con-gurirovaniyu related platforms IBM.

3. The purpose of the study

Formulate requirements for the development of computer simulation and analytical model of cache microcode in the digital control unit intelligent system.

4. Statement of the research problem

In this paper we solve the problem of constructing a scientific computer simulation and analytical model of cache memory module, allowing to determine the effectiveness of the cache memory for the given parameters. To solve this problem, do the following:

- a list of parameters to define the module cache affecting the amount of efficiency;

- to obtain analytical expressions for the performance;

- to formulate the basic requirements for the program model.

5. Solution of the problem and the results of research

Cache memory is used to store microcode sequences that can be configured compositional microprogram control unit (CMCU) in the near future. Statistical analysis of modern computers shows that about 90% of the data requested by the computing device, typically located in the cache memory. This value is called the probability or cache hit.

Since the cache is faster than the control memory, which is based in element basis ROM, you can determine the average waiting time of the entire system memory (average memory access time relative to the combination of CMCU).

following notation:

- Ph - the probability of a cache hit;

- tUP - time access control memory;

- tc - the search in the cache memory and a choice of data required in the event of a cache hit;

- tm - time access to data in case of a cache miss, while tm = tUP + ts, where ts - the so-called time losses in the cache miss it takes to search for the requested data in the cache and the data from the control room cache memory. Then

average waiting time tE of the memory system including a cache memory, is defined as: Te = R * t + (1-P) * tm

of expression implies that, to achieve high-speed access, which is close to the speed of accessing the cache hit rate will require about 90%.

Another way of assessing the effectiveness of cache memory is to determine how the cache memory increases the speed of access to the system memory. Assuming that generally tc Expression (3) clearly shows that at tUP = tm value of E> 1 for any Ph> 0, then there is a constant gain in speed. Since tm = tUP + ts, the obvious is the desire to reduce ts to come as close to tm tUP. If tUP >> tc, then the Ph> 0,9 have multiple winning E. For example, if tUP = 200 ns, tc = 10 ns and Ph = 0.9 gain is 6.9, which is almost 700%. Based on the above it can be concluded that the increase in performance gain in the system cache memory compared to the system without a cache contribute: - increasing the likelihood of cache hits Ph; - reducing the time loss in the cache miss ts; - reducing the access time to the cache tc.

In terms of magnitude tc and ts are defined element base and functional organization of equipment. The specific values ??of these variables can be determined if the time and order of operation of electronic circuit components cache and cache controller. most difficult to determine the probability of cache hits Ph, because this value depends on the selected characteristics of the cache memory and from the control algorithm implemented CMCU. The value Ph may vary over the entire range of probability - from 0 to 1 - and thus significantly affect the efficiency of the cache CMCU. If quantities tc and tm can easily obtain accurate values, even approximate determination of the value Ph is difficult, because the situation of cache hits and cache misses are not apparent from the firmware, and predetermined characteristics of the cache memory. To determine the probability of cache hits for a given flow-chart of the control algorithm and the characteristics of the module cache invited to perform computer simulation and analytical modeling of the structure CMCU with cache memory for different types of settings and architectural module cache. The model must implement process and the functioning of CMCU module cache by collecting statistical information on situations of cache hits and cache misses. In this case, it is obligatory to implement replacement algorithms of data in the cache module. input data to determine the likelihood of cache hits assume Ph: - graph chart of a control circuit interpreted CMCU; - transition probabilities in logical terms, the analyte in the GSA; - addressing microcode that defines the location of microcode in the control memory address space; - by lines and words in the cache. Count-chart will be considered for the implementation of the transformed circuit control unit with a natural addressing of microinstructions. Also, we assume that the first and last vertices in the GSA are always top of the operator (in their absence, they must be added artificially.) This condition is necessary to establish the start and end points of the control algorithm. transition probabilities logical conditions known because we believe that these values ??may depend not only on the results of the micro-ops, but also on external factors (eg, sensors). These values ??can not be derived analytically, but only by experience in the operation of CMCU that implements the algorithm in question, in the real world. microinstruction addresses and cache sizes are factors which change may increase or decrease the probability of a cache hit for the predetermined algorithm. The initial assignment of addresses microcode can be caused by various reasons, such as pre-oiled with sophisticated algorithm microprogram transitions or implemented automatically with "hard" logic. Content microcode operating at the stage of determining the probability of a cache hit does not matter. The actions performed in them, or does not affect the execution of the algorithm, or affect the transition probabilities of Latvia, which is assumed to be given. Therefore analyzed the control algorithm takes the abstract. Thus, we formulate the basic requirements for the developed model: 1. Using as input the values of the following information: - firmware corresponding to the predetermined control algorithm; - the probabilities of logical conditions; - parameters for the cache (architectural organization, the number of rows and columns in the data). 2. Simulation of the operation of CMCU with cache memory with the collection of statistical information on the situation of cache hits and cache misses. 3. Analytical determination of the effectiveness of different cache architectures for a given control algorithm. The ultimate goal of the designer of digital systems based on CMCU with cache memory architecture is the choice of the cache memory module, suitable for a development project. Thus one of the selection criteria is the speed of the circuit control device, to a large extent determined by the value of the probability of cache hits. Thus, the sequential solving of problems in this paper solves the problem of feature selection and type of cache memory architecture for a given firmware. is the speed of access to the system memory. Assuming that generally tc Expression clearly shows that at tUP = tm value of E> 1 for any Ph> 0, then there is a constant gain in speed. Since tm = tUP + ts, the obvious is the desire to reduce ts to come as close to tm tUP. If tUP >> tc, then the Ph> 0,9 have multiple winning E. For example, if tUP = 200 ns, tc = 10 ns and Ph = 0.9 gain is 6.9, which is almost 700%. Based on the above it can be concluded that the increase in performance gain in the system cache memory compared to the system without a cache contribute: - increasing the likelihood of cache hits Ph; - reducing the time loss in the cache miss ts; - reducing the access time to the cache tc. This section describes typical data cache and some instruction caches; TLB can be arranged more difficult, and the instruction cache - easier. The diagram on the right shows the main and cache memory. Each line - a group of memory cells contains data organized in a cache line. The size of each cache line can vary in different processors, but for most x86-processors it is 64 bytes. The size of the cache lines are usually larger than the data to which access is possible from one machine instruction (typical dimensions of 1 to 16 bytes). Each group of data in the memory size of one cache line has a sequence number. For the main memory, this number is the address of the memory with dropped low bits. The cache of each cache line further with the tag, which is the address duplicated in the cache line data in the main memory. By accessing the processor to the memory of checking whether the cache stores the requested data from memory. This is accomplished by comparing the request address with the values ??of all the tags in the cache to which the data can be stored. Coincidence with the tag of a cache line is called a cache hit (born cache hit), the opposite case is called a cache miss (born cache miss). Hit in the cache allows the processor can immediately read or write data in the cache line with the matched tag. The ratio of cache hits to total requests to the memory called hit rating (born hit rate), it is a measure of the effectiveness of the cache for the selected algorithm or program. In the case of a miss, the cache is allocated a new record, which is written to the tag address of the current request, and in itself a cache line - data from memory after they have been read or write data to memory. Slips in reading delay execution because they require data request in the slower main memory. Slips on the record can not give a delay because the data recorded can be immediately stored in the cache, and writing them into the main memory can be performed in the background. Work instruction caches in many ways similar to the above algorithm of data cache, but the instructions are executed only for read requests. Instruction and data caches can be divided to increase the productivity (the principle used in the Harvard architecture) or combined to simplify the hardware implementation. To add data to the cache after a cache miss may require displacement (born evict) previously recorded data. To select the replaced line is used heuristic, called substitution policies (born replacement policy). The main problem of the algorithm is the prediction of what line are not likely to be required for subsequent operations. Qualitative predictions are complex, and hardware caches use simple rules, such as LRU. Mark certain areas of memory as non-cacheable (born non cacheable) improves performance by caching the ban is rarely used data. Such memory lapses do not create a copy of the data in the cache. Figure 3 - Work cache memory Representation of ontologies in the form of a finite state machine with no exits allows you to enter transactions on ontologies. Operations on the machines mean operations on regular languages, which is accepted by these automata. When recording data in the cache must be a certain time when they are stored in main memory. It is now controlled by the policy record (born write policy). For a write-through cache (born write-through), any entry in the cache leads to an immediate write to memory. Another type of cache, write-back English. write-back (sometimes called copy-back) postpones the recording at a later time. In these caches, track the status of cache lines is not yet released to the memory (mark bit "dirty" Eng. Dirty). Storing produced by displacing a similar line from the cache. Thus, a miss in the cache that uses a write-back policy, the two operations may require access to the memory, one to reset the old line and the other - to read new dannyh.Suschestvuyut also mixed policy. The cache may be write-through (English write-through), but to reduce the number of transactions on the bus recording can be temporarily placed in a queue and associate with each other. Data in main memory can be changed not only the processor, but also peripherals using direct memory access, or other processors in a multiprocessor system. Changing the data leads to the obsolescence of their copies in the cache (state stale). In another embodiment, when a processor modifies data in the cache copy of the data in the caches of the other processors will be marked as stale. In order to maintain the contents of a cache up to date using a special cache coherency protocol. An approach to the determination of the optimal characteristics of the module cache microcode for maximum efficiency of its operation in the control unit of the intelligent system based on computer modeling. The analytical expressions for determining the efficiency of the module cache. The basic requirements for computer simulation and analytical model control devices with cache memory. As further research in this area relates to the actual development of the model and the development of the methodology with the help of experimental studies. 1. Баркалов А.А. Синтез устройств управления на программируемых логических устройствах / А.А. Баркалов. — Донецк: ДонНТУ, 2002. — 262 с. 2. Бабаков Р.М. Применение принципов кэширования в композиционных микропрограммных устройствах управления / А.А. Баркалов, С.А. Ковалев, Р.М. Бабаков // Управляющие системы и машины. — 2001. — № 5. — С. 26-33. 3. Бабаков Р.М. Организация композиционных микропрограммных устройств управления с кэш-памятью / С.А. Ковалев, Р.М. Бабаков // Вісник східноукраїнського національного університету ім. Володимира Даля. — 2002. — №8. — С. 123-126. 4. Бабаков Р.М. Кэш-память микрокоманд в композиционных микропрограммных устройствах управления / А.А. Баркалов, С.А. Ковалев, Р.М. Бабаков // Зб. наукових праць ДНТУ. Серія “Інформатика, кібернетика та обчислювальна техніка”. Вип. 70. — Донецьк: ДонДТУ, 2003. — С. 90-97. 5. Бабаков Р.М. Эвристический подход к оптимизации работы кэш-памяти в композиционном микропрограммном устройстве управления / С.А. Ковалев, И.И. Агаркова, Р.М. Бабаков, Д.В. Николаенко // Сборник трудов XVIII международной научно-технической конференции «Машиностроение и техносфера XXI века», г. Севастополь, 12-17 сентября 2011 г. В 4-х томах. — Донецк: ДонНТУ, 2011. Т. 2. — С. 57-61. 6. Агеев М.С. Извлечение значимой информации из web-страниц для индексирования / М.С. Агеев, И.В. Вершинников, Б.В. Добров // «Интернет-Математика-2005»: семинар в рамках Всеросс. науч. конф. RCDL'2005. — 2005. — С. 283-301. 7. Булгаков С.В. Использование кеш памяти для построения инновационных цепочек в системе поддержки инновационной деятельности в регионе / С.В. Булгаков, Ю.А. Загорулько // Труды VI-й Междунар. конференции «Проблемы управления и моделирования в сложных системах». — Самара: Самарский Научный Центр РАН, 2004 — С. 328-333. 8. Овдей О.М. Обзор инструментов инженерии кеш памяти / О.М. Овдей, Г.Ю. Проскудина // Журнал ЭБ. — 2004 — С. 25-26. 9. Бениаминов Е.М. Алгебраические методы в теории баз данных и представлении знаний / Е.М. Бениаминов. — М.: Научный мир, 2003 — 184 с. 10. Бевзов А.Н. Разработка методов автоматического индексирования текстов на естественном языке для информационно-поисковых систем / А.Н. Бевзов // Труды X Всеросс. науч. конф. Электронные библиотеки: перспективные методы и технологии, электронные коллекции — RCDL'2008 — С. 401-404. 11. Королев А.Н. Лингвистическое обеспечение информационно-поисковой системы Excalibur RetrievalWare: Аналитический аспект / А.Н. Королев // материалы конференции «Корпоративные Информационные Системы», 1999. — С.44-47. Important note This master's work is not completed yet. Final completion: December 2013. The full text of the work and materials on the topic can be obtained from the author or his head after this date. 6.Printsip work

(Animation: 9 frames, 15 cycles of repetition, 107 KB) Conclusions

References