Abstract on the topic of graduation work

Content

- Introduction

- 1. Relevance of the topic

- 2. Purpose of the study

- 3. General provisions

- 3.1 Relevance

- 3.2 Automated translation

- 3.2.1 Static machine translation

- 3.2.2 Neural Machine Translation

- 3.3 Overview of existing systems

- 4. Mathematical setting

- 4.1 Choice of architecture

- 4.2 Modes of operation of the recurrent network

- 4.3 Text presentation

- Solutions

- 5.1 Recurrent neural networks

- 5.2 LSTM

- 5.3 Digitizing text

- 5.4 Development environment

- List of sources

Introduction

Translation, like language, today remains the most universal means of communication between people. It is necessary both in interpersonal contacts, and in the construction of practical production activities, and in communication between peoples. The importance of translation appears especially in the dialogue of cultures. The growing globalization of the world economy and strengthening international relations between subjects of different countries stimulate an increasing number of companies interested in international cooperation to increase their efficiency and professionalism in intercultural communication and business communication. Translation activity affects a significant number of areas of human activity, plays an important role in the development of science and technology, directly contributing to the progress of mankind and providing an opportunity for communication and transfer of experience,

The activity of a translator is a linguistic phenomenon that is complex in structure. The high–quality performance of his duties requires him to have both practical and theoretical knowledge. The need to navigate the modern realities of the profession, the ability to use and effectively apply the latest systems are the requirements that are increasingly being imposed on specialists today.

A modern translator must not only possess extensive linguistic knowledge, but also be technically savvy.

Due to the globalization of business, the emergence of new types of content and the explosive growth in information consumption, the translation industry, like many others, is undergoing rapid changes. The demand for translation is growing rapidly and along with it the need for technologies that will increase the productivity of translation processes and meet this demand is increasing. At present, computer–aided translation systems are widely used in translation activities.

As such, automated translation includes a range of scientific disciplines from linguistics to mathematics and cybernetics. Automated translation tools vary considerably in their structure and working methods. Their common feature is that they serve to improve the working conditions of the translator, often automatically generating the desired part of the text in the desired language under the supervision of a specialist, using special rules for translating grammatical structures. The degree of specialist control over these tools during the translation process can also vary significantly. The effectiveness of the implementation of these systems depends on the knowledge and level of professional training of the user, as well as on the ability to quickly learn new software.

Translation of syntactic constructions is one of the main problems and difficulties in translation activities. It is the coherent and equivalent transformation of these structures of different types that represents the main challenge in the work of a specialist. This work is intended to investigate the effectiveness of automated translation as a tool for solving this problem.

1. Relevance of the topic

The relevance of the work is due to the need for a deeper study of programs and tools for automated translation and methods of their functioning, a significant number of new trends in computational linguistics. The potential benefits of using automated translation systems are not fully explored and integrated into translation activities. The number of requirements and skills that a professional translator must possess is growing from year to year.

2. Purpose of the study

The purpose of the research is to study the specifics, processes and mechanisms of using automated translation when translating syntactic structures from English into Russian, to develop an automated translator in the field of IT–technologies.

3. General provisions

3.1 Relevance

The relevance of the project is due to the need to create a translator in the field of technical texts. Existing translation systems are focused on spoken language and do not always translate technical texts correctly. The task of the project is to develop a translator working exclusively in IT technologies.

3.2 Automated translation

Automated translation – translation of texts on a computer using computer technology. [1] Automated translation tools vary considerably in their structure and methods of operation. Their common feature is that they serve to improve the working conditions of the translator, often automatically generating the desired part of the text in the desired language under the supervision of a specialist, using special rules for translating grammatical structures.

3.2.1 Static machine translation

Statistical machine translation is a type of machine translation where the translation is generated based on statistical models, the parameters of which are derived from the analysis of bilingual text corpora. [2]

The developers of machine translation systems introduce some end–to–end

rules to improve quality , thereby transforming purely statistical systems into Hybrid Machine Translation. Adding some rules, that is, creating hybrid systems, somewhat improves the quality of translations, especially if there is an insufficient amount of input data used to build the machine translator index.

3.2.2 Neural Machine Translation

Neural Machine Translation is an approach to machine translation that uses a large artificial neural network. [3]

NMT models use deep learning and feature learning. They require only a small fraction of memory to operate compared to traditional statistical machine translation (SMT) systems. In addition, unlike traditional translation systems, all parts of the neural translation model are trained together (from start to finish) to maximize translation efficiency.

A bi–directional recurrent neural network (RNN), also known as an encoder, is used by a neural network to encode a source sentence for a second recurrent network, also known as a decoder, which is used to predict words in a target language.

3.3 Overview of existing systems

Google translate

Google Translate is a Google web service that automatically translates a portion of a text or a web page into another language. [4]

Google uses its own software. The company is assumed to be using a self–learning machine translation algorithm. In March 2017, Google completely translated the translation engine into neural networks for better translation. Due to the fact that the output of variants is controlled by a statistical algorithm, when translating common common words, Google Translate may suggest obscene words among the possible variants. The result of the issue can also be influenced by massively suggesting a certain, including a deliberately incorrect, version of the translation. Google Translate offers translation from any supported language to any supported language, but in most cases it actually translates through English. Sometimes the quality suffers greatly from this. For example, when translating from Polish into Russian, cases are usually violated (even when they are the same in Russian and Polish). There are also languages that go through a dual translation processing process, first through a closely related language, then through English.

Yandex Translate

Yandex.Translate is a Yandex web service designed to translate a part of a text or a web page into another language. [5]

The service uses a self–learning statistical machine translation algorithm developed by the company's specialists. The system builds its correspondence dictionaries based on the analysis of millions of translated texts. The computer first compares the text for translation with the base of words, then with the base of language models, trying to determine the meaning of the expression in the context. Translator from Yandex, like other automatic translation tools, has its limitations. This tool aims to help the reader understand the general meaning of the content of a text in a foreign language; it does not provide accurate translations. We are constantly working on the quality of the translation, translations into other languages are being developed.

Deepl

DeepL is an online translator powered by machine translation. Launched by DeepL GmbH

from Cologne in August 2017. [6]The service allows you to translate 72 language pairs in German, English, French, Dutch, Polish, Russian, Italian, Spanish, Portuguese. The service uses Linguee–trained convolutional neural networks. The translation is generated by a 5.1 petaflops supercomputer powered by electricity from a hydroelectric power plant in Iceland. Convolutional neural networks, as a rule, are somewhat better suited for translating long sequential phrases, but have not yet been used by competitors who preferred to use recurrent neural networks or statistical translation.

4. Mathematical setting

4.1 Choice of architecture

There are many types of neural network architectures.

Popular ones are:

- Recurrent neural networks (this is a class of neural networks that are good for modeling sequential data such as time series or natural language); [7]

- Convolutional neural networks (a special architecture of artificial neural networks aimed at efficient pattern recognition is part of deep learning technologies); [8]

- Combined neural networks (such neural networks are able to understand what is in the image and describe it. And vice versa: draw images according to the description).

To build a translator, you need to work with sequences. For this, a recurrent neural network will be used.

4.2 Modes of operation of the hand–held network

A recurrent neural network can operate in various modes:

- One at the entrance – one at the exit;

- One per input – a sequence of outputs;

- Sequence of inputs – sequence of outputs;

- The sequence of inputs is one per output.

The input of the neural translator can include both a word and whole sentences, as well as text. Therefore, the input of the black box

must be a sequence, and the output must be a sequence.

4.3 Text presentation

The text must be presented in digital form. There are three ways to encode:

- Numeric encoding;

- One hot encoding (binary vector representation);

- Dense vector representation (representation in a vector of any numbers).

Since the input will be a sequence, vector representation must be used. It is advisable to use dense vector representation, since the size of this vector is smaller.

5 Solutions

5.1 Recurrent neural networks

An important feature of machine translation, as well as any other problem related to natural language, is the variable length of the input X = (x1, x2,…, xT) and the output Y = (y1, y2,…, yT´) . In other words, T and T´ are not fixed.

To work with variable length input and output, you must use a recurrent neural network (RNN). Widely used feedforward neural networks, such as convolutional neural networks, do not store information about the internal state, using only their own parameters of the network. Each time a data item is fed into a feedforward neural network, the internal state of the network (that is, the activation functions of hidden neurons) is recalculated. At the same time, it is not affected by the state calculated during the processing of the previous data item. In contrast, the RNN retains its internal state when reading the input sequence of data items, which in our case is a sequence of words. Therefore, the RNN is capable of handling input data of arbitrary length.

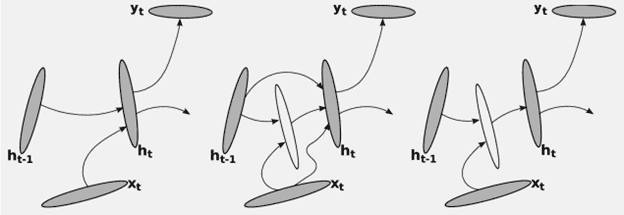

The main idea of the RNN is to use recursion to form a vector of fixed dimension from the input sequence of symbols. Suppose that at step t we have a vector ht – 1 representing the history of all previous symbols. The RNN will calculate a new vector ht (that is, its internal state), which combines all the previous symbols (x1, x2, ..., xt – 1) , as well as a new symbol xt using:

where φΔ is a function parameterized by θ that takes as input a new symbol xt and a history ht – 1 up to the (t – 1) th symbol. Initially, we can safely assume that h0 is a zero vector.

Drawing 1 – Recurrent neural network

The recurrent activation function φ is usually implemented, for example, as a simple affine transformation followed by an element–wise nonlinear function:

This expression contains the following parameters: input weight matrix W, recurrent weight matrix U, and bias vector b. It should be noted that this is not the only option. There is ample room for developing new recurrent activation functions.

5.2 LSTM

Long short–term memory (LSTM) is a special kind of recurrent neural network architecture capable of learning long–term dependencies. [9] LSTMs are specifically designed to avoid the problem of long–term addiction. Memorizing information for long periods of time is their normal behavior, not something they struggle to learn.

The LSTM structure is similar to a chain, but the modules look different. Instead of one layer of a neural network, they contain as many as four, and these layers interact in a special way.

Drawing 2 – LSTM network

The key component of an LSTM is the cell state, a horizontal line across the top of the diagram.

The state of the cell resembles a conveyor belt. It goes directly through the entire chain, participating in only a few linear transformations. Information can easily flow through it without being subject to change.

5.3 Digitizing text

A neural network can only work with numbers. She performs various mathematical operations with them. Therefore, when we use neural networks to analyze data, the data needs to be translated into a set of numbers.

Imaging is a set of numbers that correspond to pixel intensities from 0 to 255.

When working with structured data (for example, tables), there are two options:

- If the data is numeric, you don't need to do anything with it;

- If the data is in a categorical form (for example, the gender of a man or a woman), then such data is presented in the form of one hot encoding vectors.

To work with text, you need to break the text into separate parts, each of which will be presented in digital form separately.

You can split the text into:

- Symbols (letters, numbers, punctuation marks, etc. and represent a single character in numerical form);

- Words (put a number or a set of numbers not for individual characters, but for whole words);

- Sentences (whole sentences are represented as numbers).

After splitting the text into separate tokens, it is necessary to convert each token to a numeric form.

To convert tokens to numeric form, you can use:

- Numeric encoding (we assign a separate code to each token: the frequency (with which the token occurs in the text), or use different encodings (ASCII, UTF–8, etc. (each character of the alphabet corresponds to a certain numeric code)));

- Each token corresponds not to one number, but a vector of numbers – one hot encoding (used at the output from neural networks in classification problems and to represent the correct answers when working with a teacher). In this case, the vector contains as many numbers as you can use tokens and all elements of the vector are equal to zero, except for the one that corresponds to the required token;

- Using dense vector representation. In this case, each number is assigned not one number, but a vector, but the dimension of the vector is lower than one hot encoding, due to the fact that this vector uses not only zeros and ones, but any numbers.

5.4 Development environment

Subsystem development will be developed in Python using Google Colab. Google Colab is a service that allows you to run Jupyter Notebooks with free access to an Nvidia K80 graphics card.

Jupyter Notebook is a python interactive computing shell.

List of sources

- Automated translation [electronic resource] // Internet resource – Access mode: https://dic.academic.ru/dic.nsf/ruwiki/30223

- Static machine translation [electronic resource] // Internet resource – Access mode: https://intellect.icu/mashinnyj-perevod-vidy-i-osobennosti-9510

- Neural machine translation [electronic resource] // Internet resource – Access mode: http://ru.wikipedia.org/wiki/Neural_machine_translation

- Google Translate [electronic resource] // Internet resource – Access mode: https://ru.wikipedia.org/wiki/Google_Translator

- Yandex Translator [electronic resource] // Internet resource – Access mode: https://habr.com/ru/company/yandex/blog/576438/

- DeepL [electronic resource] // Internet resource – Access mode: https://www.deepl.com/ru/home

- Recurrent neural networks [electronic resource] // Internet resource – Access mode: https://habr.com/ru/post/487808/

- Convolutional neural networks [electronic resource] // Internet resource – Access mode: https://medium.com/@balovbohdan/convolutional neural networks

- LSTM [electronic resource] // Internet resource – Access mode: https://habr.com/ru/company/wunderfund/blog/331310/