Abstract

Contents

- 1. Problem definition

- 2. Theme urgency

- 3. Goal and tasks of the research

- 4. Review of existing methods for the edge detection of the image

- 5. The proposed method

- Conclusion

- References

1. Problem definition

Images can be defined as a two-dimensional function f(x, y), where x and y are coordinates in space and the value of f which at any point is defined by a pair of coordinates (x, y), is the intensity or the color gray level at that point. If the values of x, y, f are a finite number of discrete values, we speak about the digital image. Digital image processing is the image processing using digital computers. Digital images consist of a finite number of elements, which settle down in a specific line item and accept a certain value. These elements are called as parts of the image or pixels [1].

According to the psychologists' research it was found that in terms of recognition and analysis of the objects in an image the most informative value is the characteristics of their borders – contours, but not the brightness of objects. Therefore, the basic information is contained not in the brightness of individual regions, but in their contour. The task of edge detection in image processing is creation of the image of boundaries of objects and contours of uniform areas.

The edge of the image is a set of pixels at which a spasmodic change of function of brightness is noted.In case of digital processing the images are represented as a function of integer arguments, circuits are represented by lines at least one pixel wide [2]. The basic datum of this task is an image that is represented by a set of points. Each pixel is characterized by a value of brightness in the expected range. Result is a set of points that defines the outline of the image.

Proceeding from this, it is necessary to develop the algorithm of edge detection of the image. Since human's visual analyzer efficiently performs this task due to its extremely high selectivity, it is necessary to take into account that mechanism of human visual analyzer when developing the algorithm should be based on the structure and principles of the visual system [3, 4].

2. Theme urgency

The main idea of this work is edge detection of image objects. This task can be found in many aspects of the modern life: in productions, in processing of visual information, in medical diagnostics etc. Therefore, the solution of the similar task is a topical issue.

Nowadays there are methods which resolve such issues. However, as in digital processing the images are set by a matrix of the appropriate points and not all from them bear the useful information, therefore, there is a problem of rational use of resources. For that reason, we need a method that filters out the useless part of the image and focuses on useful information. An example of such data is the human perception of visual information. The aim of this work is the development of an algorithm based on human's visual analyzer.

3. Goal and tasks of the research

The Aim of this paper is the development of an algorithm based on mechanism of human's visual analyzer.

Main tasks of the research:

- to study and analyze the existing approaches to the allocation of objects in the image contours;

- to consider the human's visual mechanism;

- to realize the developed algorithm.

Research object: process of edge detection of the image by algorithm, which is based on the mechanism of perception of light by the retina of the human eye.

Research subject: contours of image objects.

4. Review of existing methods for the edge detection of the image

Edge detection images – term in the theory of image processing and computer vision, partly from finding objects and object selection is based on algorithms that set digital image points with sharply changing of the brightness or there are some other types of non-uniformity.

Ideally, the result of edge enhancement is a set of related curves, indicating the limits of objects, faces and fingerprints on the surface, as well as changing the position of the curves reflecting surfaces. Thus, applying a filter to the image border selection can significantly reduce the amount of data to be processed, because the filtered part of the image is considered to be less important, and the most important structural features of the image are preserved. There are many approaches to the allocation of the image edge, but almost all of them can be divided into two categories: methods based on finding maxima and methods based on finding zeroes.

The methods based on finding maxima isolate contours by calculating the "edge's forces" usually the expressions of first derivative, such as the magnitude of the gradient, and then search for the local maxima edge's forces using the intended direction of the contour, usually perpendicular to the gradient vector.

The methods based on search of zero, look for intersections of an abscissa axis and an expression of the second derivative, usually Laplacian’s or zero non-linear differential expression’s zero. As a step of preliminary processing for detection of image’s edge is used the image smoothing, usually a Gaussian filter.

Edge enhancement methods differ in applied filters of smoothing. Although many methods from this group are based on the calculation of the gradient image, they are different in types of filters applied to calculate the gradients in the x- and y-directions [5].

The methods based on calculated gradient. The most well-known methods of this class are methods by Sobel, Prewitt and Roberts. All these methods are based on one of the basic properties of the luminance signal - discontinuity. The most common method is to search for discontinuities is processing moving images with the help of the mask, also known as the filter kernel, or a window pattern, which is a kind of a square matrix corresponding to the stated group of pixels of the original image. Matrix elements are called coefficients. Handling such a matrix in any local transformations is called filtering or spatial filtering [6, 7].

The methods based on search of zero. Some operators as borders instead of working with a gradient use the second derivatives of the image brightness. Thus, in the ideal case, the detection of the second derivative zeroes will identify the local maxima of the gradient. Operator Marr-Hildreth is based on the calculation of the roots of the Laplace operator applied to the image smoothed Gaussian filter. However, it was shown that this operator selects false borders on homogeneous areas of the image where the gradient has a local minimum. Besides this operator poorly localizes rounded edges. Therefore, this statement is now of more historical value. More relevant way of edge enhancement of the second order, which also detects the edge with pixel precision is the use of a differential approach to identify the zeros of the second derivative in the direction of the gradient vector [8, 5].

5. The proposed method

Solution of the problem is broken up into separate stages. Each stage corresponds to a separate function. Consider the sequence of each stage. Thus, we have the original image I size NxM pixels which is set by brightness matrix. Image gets to the system,and the system is an analogue of human perception, where the image falls on the retina of the human's eye. Each pixel of the image corresponds to the photoreceptors on the fovea of the retina.

Further we sequentially analyze each pixel and its surrounding pixels, in terms of their brightness (see fig. 1). This procedure is based on the mechanism of photoreceptor cells [9]. This is the first stage of contours detection.

Figure 1 – Pixels are analyzed by the characteristic brightness

There is i0 – the brightness of a pixel to be analyzed; i1, i2, i3, i4 – the brightness of the surrounding pixels. The next step is the analysis of pixels in the horizontal cell layer, which deals with signals from the next pixels.

Then the results of the previous stages fall into analog bipolar cells of the proposed algorithm. Here we compare the brightness of a pixel with a predetermined value. The results define the belonging of the corresponding pixel of the receptive field. Namely, if the brightness of the pixel is less than a predetermined value, then the pixel belongs to the receptive field of off-center. Otherwise – with on-centers. This indicator is based on the signal of lightening or darkening, respectively [10, 11].

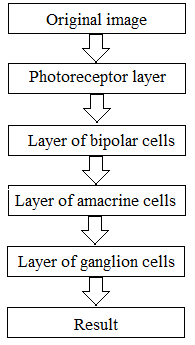

So we have the layer of bipolar cells. Further, based on the result we analyze pixels belonging to the amacrine layer. These cells define ganglion cells pixel that is considered. The final step is the comparison of the source pixels with a parameter that varies from the original image. This parameter is the average brightness value of a pixel of the image. Thus, we have analyzed whether the pixel belongs to the contour of the image, or - to the object itself. The following figure shows schematically the proposed algorithm.

Figure 2 – Flowchart layers of the retina to detect image contours

Conclusion

We have analyzed the methods for the isolation contours of image objects. We have also considered the approach based on the mechanism of perception of light by the retina of the human eye. It is believed that pre-treatment of the global signal (which forms the image) takes place in the human retina. The method put forward – modeling of the main layers of the human's retina (layers of photoreceptors, bipolar cells, amacrine cells and ganglion cells). In the future, we are planning to implement these layers using neural networks.

Note

This master's work is not completed yet. Final completion: February 2015. The full text of the work and materials on the topic can be obtained from the author or his head after this date.

References

- Гонсалес Р. С., Вудс Р. Э. Цифровая обработка изображений. - М.: Техносфера, 2006. - 1072 с.

- Сойфер В. А. Компьютерная обработка изображений. - М.: Физматлит, 1996. - 784 с.

- Боюн В.П. Зоровий аналізатор людини як прототип для побудови сімейства проблемно-орієнтованих систем технічного зору /В.П. Боюн // Материалы Международной научно-технической конференции «Искусственный интеллект. Интеллектуальные системы. ИИ-2010». – 2010.Т.1. – С. 21-26.

- Boycott B.B. Organization of the Primate Retina: Light Microscopy / B.B. Boycott, J.E. Dowling// Philosophical Transaction of the Royal Society of London. – Series B, Biological Sciences. – vol. 255, is.799. – 1969. – P.109-184.

- RamadeviY., SrideviT., PoornimaB. SegmentationandObjectRecognitionusingEdgeDetectionTechniques / Y.Ramadevi, T. Sridevi, B. Poornima, B. Kalyani // Internation Journal of Computer Scienceand Information Technology. – 2010. – vol.2, No 6. – P.153-161.

- Алгоритмы выделения контуров зображений // Electronic source. Access mode:: http: //habrahabr.ru/post/114452/

- Кудрявцев Л.В. Краткий курс математического анализа – M.: Наука, 1989 – 736с

- Differential Operators for Edge Detection // Electronic source. Access mode: http://dspace.mit.edu/bitstream/handle/1721.1/41198/ai_wp_252.pdf?sequence=4

- Сетчатка //Электронный журнал «Биология и медицина». // Electronic source. Access mode: http:// http://medbiol.ru/medbiol/phus_ner/0008db2b.htm#0004a91e.htm

- Вовк О.Л. Учет особенностей строения сетчатки глаза человека для выделения контуров объектов изображений / О.Л. Вовк // Наукові праці Донецького національного технічного університету: “Інформатика, кібернетика та обчислювальна техніка”. – ДНТУ, Донецьк. – 2013. – Випуск 17 (205). – С.48-52.

- Golish T. Eye Smarter than Scientists Believed: Neural Computations in Circuits of the Retina / T. Golish, M. Meister //Neuron. – vol.65, IS.2. – 2010. – P.150-164