Abstract

Contents

- Objective

- Tasks

- Topicality work

- Alleged scientific novelty

- Planned practical results

- 1 Overview of Research and Development

- 1.1 A review of international developments

- 1.2 Review of national development

- 1.3 Overview of local development

- 2 The architecture of the CMU Sphinx system

- 3 Preliminary results

- Conclusion

- References

Objective

Research tool environment CMU Sphinx and the development of intellectualization IO code of the program based on it.

Tasks

- Review of existing methods of speech recognition.

- Review of existing speech recognition systems.

- The wording of tasks intellectualization IO program code.

- The choice of tools to implement automatic speech recognition.

- Development Architecture speech interface for input and output program code.

- Investigation of the processes of automatic speech recognition CMU Sphinx.

- Development of acoustic and linguistic models of language.

- Research of efficiency of the developed models of voice interaction with the input-output program text.

- Developing Java applications.

Topicality work

Currently, a set of texts of programs in programming languages is carried out manually using the keypad that requires good keyboard skills, a lot of attention and pressure on the eyes. This input method is time consuming for the person and not very comfortable [14]. The elimination of this lack of success is possible by solving the problem of automatic speech recognition. Thus, it is urgent intellectualization of the input and output of the source code of the program that can make life easier for both experienced developers and beginners.

Alleged scientific novelty and practical significance of the work

The scientific novelty of this work is to improve the efficiency of computer speech recognition by using the tool environment CMU Sphinx, which in turn will develop a speech interface to enter the code.

The practical significance of the work is to create a system of voice input and output of the program code via CMU Sphinx.

Planned practical results

- The development of technologies to develop speech recognition systems based on tools CMU Sphinx.

- Building a system of voice input, text output of programs in the programming language Pascal.

- Assessment of the quality of speech recognition system CMU Sphinx.

1 Overview of Research and Development

There are 3 basic methods of speech recognition:

- hidden Markov model;

- dynamic programming;

- neural networks.

These methods are constantly interwoven and some software products using several methods.

1.1 Overview of international sources

According to the linguistic characteristics of human speech, articulation additional data can more accurately identify the speaker, and it will automatically break the sound wave into separate fragments [13]. The problem of speech recognition a key issue database, which will take into account all the words and their pronunciation.

The following will examine various speech recognition system with an open and closed source.

Sphinx — is speaker-independent continuous speech recognizer that uses hidden Markov models and the n-programmatic statistical language model. Sphinx is the possibility of recognition of continuous speech, speaker-independent recognition of a huge dictionary [5]. Sphinx4 complete and rewritten speech engine Sphinx, the main purpose of which is to provide a flexible framework for research in speech recognition. Sphinx4 written entirely in the Java programming language [2]. Sun Microsystems has made a great contribution to the development Sphinx4 and help in the examination of the draft program, which causes the programming language is written in the system.

Live development include:

- The development of new acoustic models for training.

- The implementation of speech adaptation.

- Improvements configuration management.

- Implementation ConfDesigner — graphical system design.

Julius — a high-performance continuous speech recognizer with a large vocabulary(large vocabulary continuous speech recognition), decoder software for research in the field of speech and related development. It is great for decoding in real time on most existing computers, with a dictionary 60 000 words using context-independent hidden Markov models. The main feature of the project is fully embeddable. It is also safe modulation can be independent of the model structures and different types of hidden Markov models that support overall trifonov and related mixture models with a variety of medicines, phonemes and statements [11].

RWTH ASR is the tool of speech recognition open source. The Toolkit includes technology skills to recognize speech for the creation of automatic speech recognition systems. The technology is developing Natural Language Technology Center and exemplary Sensor detection group RWTH Aachen University. RWTH ASR includes tools to develop acoustic models and decoders, as well as components for the adaptation of speech speaker adaptive speech training system speaker uncontrolled training system, differential system of training and lattice forms of treatment [10].

Google speech API — a product of Google, which allows you to enter voice search using speech recognition technology. The technology integrated into mobile phones and computers, where you can enter information by voice. The technology for personal computers only supported browser Google Chrome. There is also a voice control function for introducing voice commands on phones running Android. To work with a database of speech recognition system is sufficient to register the account to the Google Developers and then you can operate the system within the legal framework [8].

Complex speech technology Yandex includes recognition and speech synthesis, voice activation and selection of semantic objects in the spoken text. A big plus is a multi-platform library to access speech technology in Yandex mobile applications and cloud service that unlocks any programs and device access speech technology Yandex. However, the disadvantage is difficult to understand the documentation and the limitation on the number of queries: 10 000 per day [9].

1.2 Review of national development

The existence of respectable companies that create software products on the basis of recognition or speech synthesis without the help of the International scientific-educational center of information technologies and systems is unknown. Apparently, because they simply do not. Exist or scientific institutions that conduct research in the field of recognition and synthesis, or single developers [1].

About the leader in Ukraine in speech recognition and synthesis can be found below. In fact, besides the leader, there are other people who are interested in the problems of recognition and synthesis.

In Donetsk, in the Department of Speech recognition of the State Institute of Artificial Intelligence are working on speech recognition.

There is a person who is engaged in self-recognition and synthesis. It — Kharkov Anatoly Black with his project of an alternative intelligence. One of his designs — a synthesizer

Rozmovlyalka

.

Recently, there was a program for Ukrainian texts sound reciters. It provides three types of use: reading electronic books special format of dictation, selected from the collection of dictations or separate text, text editing listening. The author uses his own voice synthesizer.

Sound images recognition department of the International Research and Training Center of Information Technologies and Systems — leading the leader in speech technologies in Ukraine. Since the late 1960s in the department (then at the Institute of Cybernetics) led Vintsyuka TK works on speech recognition [1].

Now the department is engaged in the following directions in speech recognition:

- recognition of portable devices;

- speaker-independent recognition;

- recognition of extra-large vocabularies;

- recognition of keywords;

- recognition through telephone links.

1.3 Overview of local development

In the Donetsk National Technical University under the direction of Associate Professor of Applied Computer Science and Mathematics O. Fedyaev, speech recognition deals with the following masters:

- Bondarenko Ivan (Integration of visual and verbal methods of process control input and editing of textual information);

- Verenich Ivan (Analysis methods for constructing speech recognition systems based on hybrid hidden Markov models and neural networks);

- Nesterenko Dmitry (Automatic recognition of isolated words of Russian language based on wavelet analysis);

- Savkova Daria (Speech interface for intellectualization of programming language texts input).

Main scientific articles DonNTU speech recognition are shown in Table 1:

Table 1 — Publications on the themes associated with speech recognition

|

Article title |

Source |

The names of the co-s |

| Segment-Holistic Speech Recognition System Based on Model of Brain Hemispheric Interaction in Speech Perception | Proceedings of 11th International Conference on Pattern Recognition and Information Processing PRIP’2011. — Minsk Belarusian State University of Informatics and Radioelectronics, 2011. — pp. 226–230. | Bondarenko I., Fedyaev O. |

| Isolated Speech Word Recognition Based on Fuzzy Pattern Matching with Optimal Temporal Alignment |

In Proc. of 13–th International Conference Speech and ComputerSPECOM 2009, St. PetersburgRussia, 2009. — pp. 454–457. |

Bondarenko I., Fedyaev O. |

| Usage of a vocal component in interfaces of programmed systems | Interactive Systems: The Problems of Human — Computer Interaction. — Proceedings of the International Conference, 23–27 of september 2001. Ulyanovsk:UISTU, 2001. — pp. 26–28. | Gladunov S., Fedyaev O. |

2 The architecture of the CMU Sphinx system

As an instrumental environment for the development of the system intellectualization of the IO program code based on speech technology, the system was used Sphinx4.

CMU Sphinx complex, which includes several types of systems. Available today the most popular are the Sphinx 4 and PocketSphinx. Each Sphinx system consists of two components [3]: trainer and decoder. The coach is required to create an acoustic model adapted to the specific needs and the decoder performs the actual recognition. It should be emphasized that trainer Sphinx builds an acoustic model, and not adapted to the specific speech features. Coach Sphinx is designed for developers who understand how speech recognition; same with ordinary user, the system needs to interact without preparation [12]. The possibility of operation in this mode is very useful when creating public services, such as automated phone services, etc.

Sphinx 4 uses the Java API Speech, though not implements the standard interface of the speech Recognizer of the system. To demonstrate the capabilities of the system, the developers offer a small dictionaries designed for use in special applications.

The top-level architecture for Sphinx4 relatively simple.

Block Front End is responsible for collecting, annotating, and processing the input data. In addition, it extracts the objects from the input data read by using the decoder.

Knowledge Base contains information necessary for the decoder. This information includes an acoustic model and the language model. The knowledge base may also receive a response from the decoder, allowing the knowledge base to dynamically change yourself on the basis of search results. These modifications may include switching the acoustic models and/or language models, and to update parameters such as the mean and variance transforms for acoustic models.

Decoder performs most of the work. It reads data from the Front End, compares them with data from the knowledge base and the application and searches for the most probable sequences of words that could be represented by a number of features.

Unlike much architectures for speech recognition, Sphinx4 allows the application to control many features of the speech engine. During decoding, the application can receive data from the decoder at the time when he searches. These data allow the application to track how the decoding process and also allows the application to affect the decoding process prior to its completion. In addition, the application may update the knowledge base at any time. Figure 1 shows a diagram of a speech recognition system based on CMU Sphinx.

Figure 1 — Controlling the speech recognition system based on CMU Sphinx the

(animation: 6 frames, 6 cycles of repetition, 38.2 KB)

The structure of Sphinx-4 is characterized by flexibility and modularity. Each module can be replaced, allowing developers to experiment with different implementations of the module without having to change other parts of the system. System Sphinx-4 has a large number of configurable options, each of which can be used to improve system performance. To configure these settings, you can use the API or the XML settings file. The system configuration of Sphinx-4 allows you to dynamically load and configure the modules at run time, making the system flexible and easily customizable. To track the quality of recognition and statistics gathering Sphinx provides a number of tools. As the whole system, the tools of statistics are customizable, allowing developers to conduct qualitative analysis [6].

3 Preliminary results

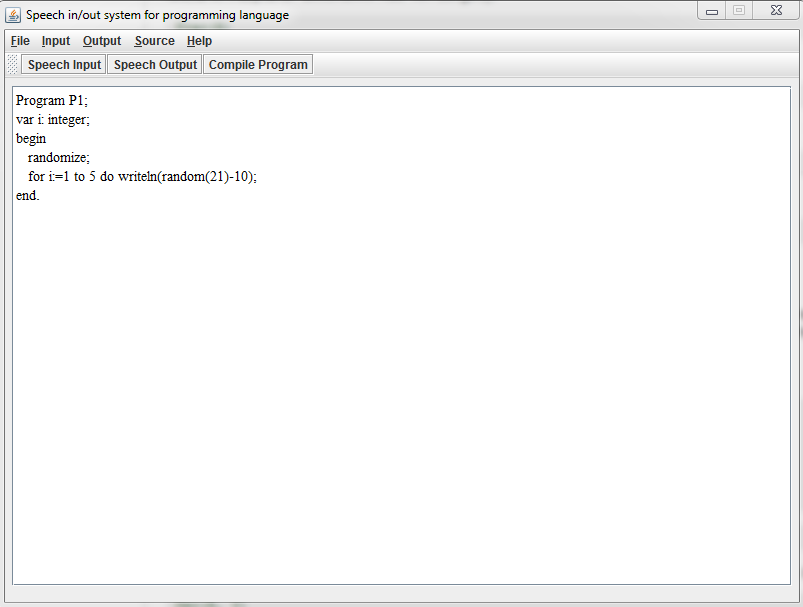

The first study was devoted to assessing the quality of the model. Conducted a series of experiments. For this we have created dictionaries for 10, 20 and 168 words. They contained the English word — tokens of the programming language Pascal. Used a speaker independent acoustic model. In the experiments to be recognized as separate words without grammar, and phrases. Preliminary results on work experience with the use CMU Sphinx for its task shown in figure 2.

Figure 2 — The program window of the Sphinx with the results of typing

After several experiments with different dictionaries, conclusions were drawn that the system will start to loose quality by increasing the volume of the dictionary. The highest accuracy when using simple dictionaries was at least 10 words. To improve the quality of speech recognition can be specially constructing the dictionary and trigrams model for him. This approach allows us to highlight sustainable design instead of separate words. To improve accuracy required adjustment to the environment in which you work, i.e. the settings in the configuration file for the equipment and microphone. Further work to improve the level of recognition is conducted.

Conclusion

Review articles and current developments showed that the topic of speech recognition relevant today as ever. No, in the public domain, similar systems predictive of program code using speech confirm the scientific novelty of the work and relevance.

The performance review system built shows that need refinement as dictionaries and acoustic models. To improve the recognition results required adjustment to the speaker equipment. Further work will be carried out in these areas.

References

- Сайт з розпізнавання та синтезу мовлення в Україні [Electronic source]. — Access Mode: http://speech.com.ua.

- CMU Sphinx Open Source Toolkit For Speech Recognition Evaluation [Electronic source]. — Access Mode: http://cmusphinx.sourceforge.net/.

- Sphinx-4: A Flexible Open Source Framework for Speech Recognition [Electronic source]. — Access Mode: http://twiki.di.uniroma1.it/pub/NLP/WebHome/Sphinx4Whitepaper.pdf.

-

Федяев О. И., Савкова Д. Г., Бакаленко В. С. Речевой интерфейс для интеллектуализации ввода исходного кода программ // 15 международная научная конференция им. Т.А.Таран

Интеллектуальный анализ информации (ИАИ–2015)

, Киев, 20–21 мая 2015 г. — К.: Просвіта, 2015. — c. 21–28. - Рабинер Л. Р. Скрытые марковские модели и их применение в избранных приложениях при распознавании речи// ТИИЭР. — 1984. — Т.72, № 2. — с. 86–120.

-

Савкова Д. Г., Бондаренко И. Ю. Опыт применения инструментальной системы Sphinx для решения задачи распознавания речевых команд управления компьютерными системами // Сборник материалом 3-й Всеукраинской научно-практической конференции

Информационные управляющие системы и компьютерный мониторинг

ИУС КМ–2012. — Донецк: ДонНТУ. — 2012. — с. 111–117. - Welcome — Russian Evaluation [Electronic source]. — Access Mode: http://www.voxforge.org/ru.

- Использование Google Speech API для управления компьютером [Electronic source]. — Access Mode: http://habrahabr.ru/post/144535/.

- Речевые технологии SpeechKit [Electronic source]. — Access Mode: https://tech.yandex.ru/speechkit/.

- RWTH ASR — The RWTH Aachen University Speech Recognition System [Electronic source]. — Access Mode: http://www-i6.informatik.rwth-aachen.de/rwth-asr/.

- Open-Source Large Vocabulary CSR Engine Julius [Electronic source]. — Access Mode: http://julius.osdn.jp/en_index.php.

- Example of the Baum–Welch Algorithm [Electronic source]. — Access Mode: http://www.indiana.edu/~iulg/moss/hmmcalculations.pdf .

- Винцюк Т. К. Анализ, распознавание и интерпретация речевых сигналов. — К.: Наукова думка, 1987. — с. 264.

- Чистович Л. А., Венцов А. В., Ганстрем М. П. и др. Физиология речи. Восприятие речи человеком. — Л.: Наука, 1976.